Artificial intelligence (AI) is revolutionizing industries and driving the need for advanced hardware solutions. To stay competitive in this fast-paced landscape, organizations must plan for AI hardware upgrades that can keep up with the increasing demands of neural networks.

As AI technology continues to evolve, so does the need for more powerful and efficient hardware. Upgrading AI hardware is crucial for enabling faster computations, improved accuracy, and enhanced performance. Organizations that fail to prioritize hardware upgrades may face limitations in their AI capabilities and miss out on opportunities for innovation and growth.

By understanding the specific requirements and challenges posed by neural network demands, organizations can develop a comprehensive strategy for planning AI hardware upgrades. This includes assessing the compute, memory, and networking needs of AI applications, as well as evaluating the scalability and compatibility of hardware solutions.

Furthermore, organizations must stay abreast of the latest advancements in AI hardware technologies. This involves investing in training and resources to ensure teams are equipped with the knowledge and skills needed to optimize AI hardware integration and performance. Collaborative partnerships can also offer access to expert advice and shared resources, further enhancing the planning and implementation of AI hardware upgrades.

Key Takeaways:

- AI hardware upgrades are vital for meeting the increasing demands of neural networks.

- Planning for AI hardware upgrades requires assessing compute, memory, and networking needs.

- Investing in training and resources helps teams optimize AI hardware integration and performance.

- Collaborative partnerships provide access to expert advice and shared resources for implementing hardware upgrades.

- Staying informed about the latest developments in AI hardware technologies is crucial for effective planning.

The Role of AI Hardware Components

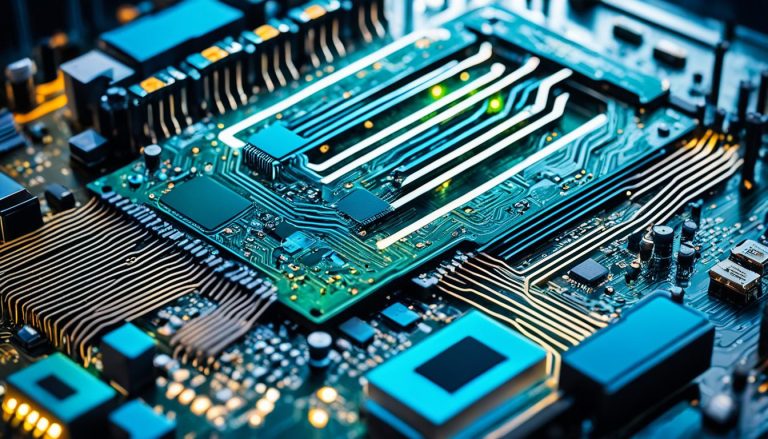

In the world of artificial intelligence (AI), the hardware components play a critical role in supporting and enhancing the capabilities of AI systems. Each of these components serves a unique function, enabling the efficient processing and storage of vast amounts of data.

CPUs: Powering General Computing Tasks

Central Processing Units (CPUs) are the workhorses of AI hardware. They are responsible for executing a wide range of general computing tasks, providing the necessary computational power for various AI applications. CPUs offer a balanced approach to processing power and energy efficiency, making them a fundamental component of AI systems.

GPUs: Parallel Processing for Large Datasets

Graphics Processing Units (GPUs) are known for their exceptional parallel processing capabilities, making them ideal for handling the large datasets commonly encountered in AI applications. GPUs excel at performing complex calculations simultaneously, enabling faster training and inference in machine learning models.

TPUs: Accelerating Machine Learning Workloads

Tensor Processing Units (TPUs) are specifically designed to accelerate machine learning workloads. TPUs offer extensive support for high-speed matrix operations and utilize specialized hardware architectures optimized for AI tasks. This allows for efficient training and inference, improving the overall performance of AI systems.

FPGAs: Versatility and Customization

Field-Programmable Gate Arrays (FPGAs) offer unique advantages in the realm of AI hardware. They provide versatility and customization, allowing developers to configure the hardware to meet the specific requirements of their AI applications. FPGAs are particularly beneficial for tasks that require real-time processing or demand low latency.

Memory Systems: High-Speed Data Storage and Retrieval

Memory systems play a crucial role in AI hardware by providing high-speed data storage and retrieval capabilities. These systems ensure that AI models can access the necessary data quickly, enabling efficient training and inference. Whether it’s random access memory (RAM) or storage-class memory (SCM), the performance of memory systems directly impacts the overall efficiency of AI systems.

By combining these hardware components effectively, AI systems can leverage their unique capabilities and achieve optimal performance. Each component contributes to the seamless execution of AI tasks, allowing organizations to unlock the full potential of artificial intelligence.

The image above visually represents the different AI hardware components, illustrating their roles and interactions within AI systems.

| AI Hardware Component | Main Function |

|---|---|

| CPU | Executes general computing tasks |

| GPU | Enables parallel processing for large datasets |

| TPU | Accelerates machine learning workloads |

| FPGA | Offers versatility and customization |

| Memory Systems | Provides high-speed data storage and retrieval |

The table summarizes the main functions of each AI hardware component, highlighting their key roles in supporting AI applications.

Best Practices for AI Hardware Integration and Optimization

When it comes to integrating AI hardware into your infrastructure, following best practices is key to ensuring optimal performance and efficiency. Here are some essential guidelines to consider for successful AI hardware integration and optimization.

1. Prioritize Energy-Efficient Designs

Energy efficiency is crucial for AI systems, as they can consume significant amounts of power. When selecting AI hardware components, prioritize energy-efficient designs that can help reduce operational costs and environmental impact. Look for hardware solutions that offer power-saving features, such as low-power modes and intelligent power management capabilities.

2. Embrace Software-Hardware Co-design

AI hardware integration should go hand in hand with software development. Embracing software-hardware co-design allows you to optimize the performance of your AI applications. Collaborating closely with software developers can help identify potential bottlenecks and tailor hardware configurations to meet specific workload requirements. By aligning hardware and software optimization efforts, you can achieve better efficiency and performance.

3. Invest in Training and Resources

AI hardware technologies are rapidly evolving, and staying up to date with the latest advancements is crucial for successful integration and optimization. Invest in regular training programs and resources for your teams to equip them with the necessary knowledge and skills. This will enable them to make informed hardware choices, implement best practices, and efficiently manage AI workloads.

4. Align Hardware Choices with Project Needs

Each AI project may have unique hardware requirements. When selecting AI hardware components, consider the specific needs and constraints of your projects. Take into account factors such as computational power, memory capacity, data throughput, and storage capabilities. By aligning hardware choices with project needs, you can ensure that your infrastructure can effectively support your AI applications.

5. Consider Hardware Scalability

Scalability is a critical factor when integrating AI hardware. As AI workloads grow and evolve, your hardware infrastructure should be able to scale accordingly. Choose hardware solutions that offer flexibility and scalability, allowing you to easily expand your compute and storage capabilities. This will ensure that your AI system can handle increasing data volumes and complexity without sacrificing performance.

6. Explore Partnerships and Collaborative Programs

Collaborating with industry partners and participating in collaborative programs can provide valuable insights and resources for AI hardware integration and optimization. Connect with hardware manufacturers, research institutions, and industry associations to access expert knowledge, joint development initiatives, and shared resources. Partnering with organizations that specialize in AI hardware can offer guidance and support in navigating the complexities of integration and optimization.

By following these best practices, you can maximize the potential of AI hardware integration and optimization, ensuring efficient and high-performing AI systems for your organization.

Common Mistakes to Avoid in AI Hardware Selection

When it comes to selecting AI hardware, making the right choices is paramount for successful implementation. Avoiding common mistakes can save organizations valuable time, resources, and ensure optimal performance. Let’s explore some of the most prevalent pitfalls and how to steer clear of them.

1. Overlooking Compatibility with Existing Systems

Compatibility is a crucial factor when choosing AI hardware. Neglecting to assess compatibility with existing systems can lead to integration challenges and inefficiencies. Prior to making any hardware decisions, it is vital to conduct a thorough evaluation of the current infrastructure and verify compatibility to achieve a seamless integration.

2. Underestimating Scalability Needs

Scalability is a vital consideration in AI hardware selection. Failing to anticipate future growth and scalability needs can result in limitations and the need for premature upgrades. Organizations should carefully assess their long-term requirements and select hardware that can accommodate expanding workloads and evolving AI demands.

3. Ignoring Energy Efficiency

Energy efficiency is a critical aspect that should not be overlooked. AI systems can be power-intensive, and neglecting energy efficiency can lead to increased operating costs and environmental impact. When evaluating hardware options, organizations should prioritize energy-efficient designs to minimize power consumption without compromising performance.

4. Neglecting Software-Hardware Synergy

Software-hardware synergy is a key consideration in AI hardware selection. The hardware chosen should be capable of effectively supporting the software frameworks and tools required for the AI applications. Organizations must ensure compatibility and synergy between the chosen hardware and software ecosystem to optimize performance and minimize any potential bottlenecks.

5. Overfocusing on Cost

While cost is an important factor in any decision-making process, overfocusing on cost alone can lead to suboptimal choices. The selection of AI hardware should consider a balance between performance, scalability, energy efficiency, and cost. Prioritizing cost savings without evaluating the broader requirements may result in compromised performance, limited scalability, and increased operational costs in the long run.

By avoiding these common mistakes and following best practices, organizations can make informed AI hardware selections that align with their goals and requirements. Defining AI goals, aligning software and hardware choices, considering data management strategies, and evaluating hardware options thoroughly are crucial steps in building a robust and future-proof AI infrastructure.

By carefully considering these factors and avoiding common pitfalls, organizations can ensure that their AI hardware selection is compatible, scalable, energy-efficient, and synergistic with their software ecosystem. This strategic approach will lead to optimal performance, cost-effectiveness, and the ability to harness the full potential of artificial intelligence.

Conclusion

Planning for AI hardware upgrades is crucial for organizations to keep up with the increasing demands of neural networks. By following best practices for AI hardware integration and optimization, companies can ensure optimal performance, scalability, and cost-efficiency in their AI infrastructure.

Avoiding common mistakes in AI hardware selection is essential for building a robust and future-proof AI system. It is important to consider factors such as compatibility, scalability, energy efficiency, and software-hardware synergy in order to make the right hardware choices.

With the right AI hardware upgrades and a strategic approach, organizations can harness the full potential of AI and stay ahead in the rapidly evolving tech industry. By staying updated on the latest technologies and best practices, companies can ensure that their AI systems perform at their best, both now and in the future.

FAQ

What is the importance of planning for AI hardware upgrades?

Planning for AI hardware upgrades is crucial for keeping up with the demands of neural networks and ensuring optimal performance, scalability, and cost-efficiency.

What are the different components of AI hardware?

AI hardware consists of CPUs, GPUs, TPUs, FPGAs, and memory systems. CPUs are essential for general computing tasks, while GPUs excel in parallel processing capabilities. TPUs are designed for machine learning workloads, FPGAs offer versatility and customization, and memory systems are crucial for high-speed data storage and retrieval.

What are the best practices for AI hardware integration and optimization?

Best practices for AI hardware integration and optimization include prioritizing energy-efficient designs, embracing software-hardware co-design, investing in training and resources, aligning hardware choices with project needs, and exploring partnerships and collaborative programs.

What common mistakes should be avoided in AI hardware selection?

When selecting AI hardware, it is important to avoid common mistakes such as overlooking compatibility with existing systems, underestimating scalability needs, ignoring energy efficiency, neglecting software-hardware synergy, and overfocusing on cost.