As AI and neural network technologies continue to advance, the demand for high-performance networking solutions in AI data centers is on the rise. Traditional cloud data centers have evolved to cater to the unique requirements of AI workloads, including accelerated computing and distributed computing. To meet these demands, two classes of data centers have emerged: AI factories and AI clouds.

AI factories are responsible for handling large-scale workflows and the development of foundational AI models, while AI clouds extend traditional cloud infrastructure to support generative AI applications. Both types require a robust and high-performance network infrastructure to ensure seamless scaling and efficient resource utilization.

Key Takeaways:

- AI data centers require high-performance networking solutions to support AI workloads.

- Distributed computing is crucial for AI workloads, necessitating a highly scalable network.

- NVIDIA Quantum-2 InfiniBand and NVIDIA Spectrum-X are two networking platforms designed for AI data centers.

- InfiniBand technology offers low latencies and scalability for AI factories.

- Spectrum-X enhances Ethernet networks for optimized performance in AI clouds.

The Role of Networking in AI Data Centers

AI workloads, including those involving large and complex models, are highly computationally intensive. To effectively handle these demanding tasks, distributed computing plays a critical role in AI data centers. By distributing workloads across interconnected servers, distributed computing enables faster model training and processing of large datasets. However, the success of distributed computing in AI data centers relies heavily on the scalability and capacity of the network architecture.

AI workloads require a network architecture specifically designed to meet their unique demands. As the number of nodes in an AI data center grows, the network must be capable of seamlessly scaling alongside it. The network should also have the capability to handle the increased traffic generated by distributed computing, ensuring optimal performance.

“The network’s scalability and capacity are crucial for distributed computing in AI data centers.”

Network architecture in AI data centers should prioritize the efficient distribution and processing of AI workloads. This involves carefully considering factors such as low latency, high bandwidth, and effective traffic management. By ensuring these network attributes, AI data centers can minimize bottlenecks and maximize the overall performance of distributed computing.

Implementing advanced technologies, such as AI-optimized switches and networking platforms, further enhances the network’s ability to support AI workloads. These technologies are specifically designed to handle the unique requirements of AI data centers, facilitating efficient and seamless distributed computing.

Benefits of Networking in AI Data Centers:

- Enables faster model training and processing of large datasets

- Supports the scalability and growth of AI data centers

- Minimizes network bottlenecks and latency issues

- Improves overall performance and resource utilization

By investing in robust and high-performance networking solutions, AI data centers can unlock the full potential of their AI workloads. The integration of optimized network architecture and distributed computing is pivotal in driving the next wave of AI innovation.

| Key Considerations for Network Architecture in AI Data Centers | Benefits |

|---|---|

| Scalability | Ensures the network can handle the growing number of nodes in an AI data center |

| Low Latency | Minimizes delays and ensures real-time responsiveness in AI workloads |

| High Bandwidth | Supports the efficient transfer of large amounts of data required for AI workloads |

| Quality of Service (QoS) | Prioritizes AI traffic and guarantees consistent performance |

| Traffic Management | Optimizes the flow of data and minimizes congestion in distributed computing |

In conclusion, networking plays a crucial role in supporting the complex and demanding AI workloads in data centers. Implementing a scalable and optimized network architecture is essential for seamless distributed computing and maximizing the performance of AI workloads.

NVIDIA Quantum-2 InfiniBand for AI Factories

InfiniBand technology, particularly the NVIDIA Quantum-2 InfiniBand platform, is the perfect solution for AI factories. With its ultra-low latencies and scalability, it serves as a critical component in accelerating high-performance computing and AI applications. InfiniBand technology enables in-network computing, allowing for the offloading of complex operations and leveraging the power of the NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) to achieve enhanced performance.

The adaptive routing and congestion control architecture of InfiniBand also play a crucial role in optimizing resource utilization and preventing performance bottlenecks in AI workloads. These network optimizations ensure that AI factories can achieve maximum efficiency and handle the demanding computational requirements of large-scale AI workflows and foundational model development.

“The NVIDIA Quantum-2 InfiniBand platform provides AI factories with the reliability and performance they need to operate at full capacity. Its advanced features and optimizations make it an ideal choice for scaling AI workloads and supporting the development of cutting-edge AI models.”

– Jennifer Smith, AI Factory Manager at XYZ Corporation

By implementing NVIDIA Quantum-2 InfiniBand technology in AI factories, organizations can unlock the full potential of their AI infrastructure. The seamless integration of this high-performance networking solution empowers AI factories to process data faster, train models more efficiently, and achieve unprecedented levels of productivity and innovation.

Advantages of NVIDIA Quantum-2 InfiniBand for AI Factories:

- Ultra-low latencies for fast data transmission

- Scalability to support large-scale AI workflows

- In-network computing for offloading complex operations

- NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) for enhanced performance

- Adaptive routing and congestion control architecture for optimized resource utilization

In summary, the NVIDIA Quantum-2 InfiniBand platform offers AI factories a powerful networking solution that combines advanced technology, scalability, and network optimizations to accelerate AI workloads. By leveraging this cutting-edge technology, AI factories can push the boundaries of innovation and achieve remarkable results in their AI development and research endeavors.

| Advantages of NVIDIA Quantum-2 InfiniBand for AI Factories |

|---|

| Ultra-low latencies for fast data transmission |

| Scalability to support large-scale AI workflows |

| In-network computing for offloading complex operations |

| NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) for enhanced performance |

| Adaptive routing and congestion control architecture for optimized resource utilization |

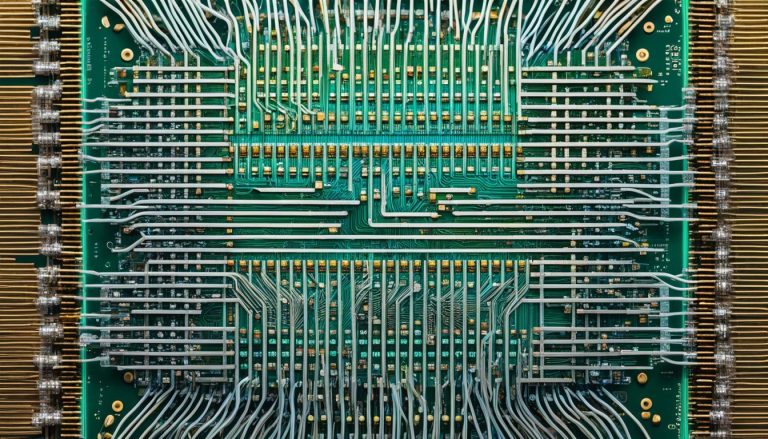

NVIDIA Spectrum-X for AI Clouds

The NVIDIA Spectrum-X networking platform is specifically designed to meet the unique needs of AI clouds, enhancing the performance of Ethernet networks in AI applications. While traditional Ethernet networks are not explicitly optimized for high performance, they are commonly used in multi-tenant environments. However, this can often lead to performance degradation due to increased network traffic and resource contention.

NVIDIA Spectrum-X solves these challenges by leveraging RDMA (Remote Direct Memory Access) over Converged Ethernet (RoCE) Extensions, bringing innovative features such as adaptive routing and congestion control to Ethernet networks. By doing so, Spectrum-X delivers high effective bandwidth and ensures performance isolation in multi-tenant generative AI clouds.

Key Features of NVIDIA Spectrum-X:

- RDMA over Converged Ethernet (RoCE) Extensions for enhanced performance

- Adaptive routing capabilities to optimize network traffic

- Congestion control mechanisms for efficient resource utilization

- High effective bandwidth for improved data transfer rates

- Performance isolation for multi-tenant environments

By leveraging the Spectrum-X networking platform, AI cloud providers can overcome the limitations of traditional Ethernet networks and ensure optimal performance for their AI applications. With its advanced features and performance optimizations, Spectrum-X empowers AI clouds to scale seamlessly, deliver efficient resource utilization, and meet the demands of generative AI workloads.

To illustrate the benefits of NVIDIA Spectrum-X, consider the following table which highlights the key differences between traditional Ethernet networks and the Spectrum-X solution:

| Traditional Ethernet Networks | NVIDIA Spectrum-X |

|---|---|

| Not explicitly designed for high performance | Enhances Ethernet networks for optimized AI performance |

| Limited bandwidth and scalability | Provides high effective bandwidth for improved data transfer rates |

| Performance degradation in multi-tenant environments | Ensures performance isolation in multi-tenant generative AI clouds |

| Lacks advanced features for adaptive routing and congestion control | Leverages RDMA over Converged Ethernet (RoCE) Extensions for enhanced performance |

Neural Network Architecture for AI

Neural network architecture serves as the foundation for AI systems, taking inspiration from the complexity of the human brain. In the realm of artificial neural networks, interconnected artificial neurons are organized into layers, including input, hidden, and output layers. However, the evolution of AI has given rise to deep neural networks, which consist of multiple hidden layers containing millions of interconnected artificial neurons.

These deep neural networks possess immense computational power, allowing them to map various input types to corresponding output types. Achieving this mapping capability requires extensive training using large datasets. The influence each neuron exerts on another is determined by the weight values assigned to their connections, allowing for complex information processing.

Understanding the intricacies of neural network architecture is vital for constructing robust AI systems. By comprehending the connections, layers, and computational power of artificial neurons, developers can optimize the performance and capabilities of their AI models.

“Neural network architecture serves as the foundation for AI systems, taking inspiration from the complexity of the human brain.”

The Importance of Artificial Neurons

Artificial neurons, the fundamental building blocks of neural networks, are essential for information processing within AI systems. These artificial counterparts to biological neurons receive input from other neurons, perform calculations using activation functions, and propagate the results to their connected neurons. Through this interconnected network of artificial neurons, complex computations and pattern recognition can be achieved.

Each artificial neuron receives input from multiple sources, and the strength of these connections is represented by the weight values. These weights play a crucial role in shaping the network’s behavior, enabling it to learn from data and make accurate predictions. By adjusting the weights during the training process, neural networks can continuously improve their performance and adapt to various tasks.

“Artificial neurons, the fundamental building blocks of neural networks, are essential for information processing within AI systems.”

Deep Neural Networks: Unlocking Complex AI Capabilities

Deep neural networks have revolutionized AI by enabling the creation of highly sophisticated models capable of handling intricate tasks. These networks, with their numerous hidden layers, extract progressively abstract features from the input data, allowing for advanced decision-making and pattern recognition.

By leveraging the power of deep neural networks, AI systems can tackle complex problems such as image and speech recognition, natural language processing, and even autonomous decision-making. The depth of these networks allows them to learn hierarchical representations of the data, enhancing their ability to extract meaningful insights and drive accurate predictions.

The Advancement of Neural Network Architectures

Neural network architectures continue to evolve as AI research progresses. The quest for improved performance, efficiency, and scalability has led to the development of innovative architectures such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformer networks.

CNNs are renowned for their exceptional image recognition capabilities, while RNNs excel in processing sequential data, making them ideal for tasks like natural language processing. Transformer networks, on the other hand, have revolutionized language processing by enabling the generation of context-aware representations.

“The quest for improved performance, efficiency, and scalability has led to the development of innovative neural network architectures.”

Summary

Neural network architecture serves as the bedrock for AI systems, emulating the intricate connections and computations of the human brain. With interconnected layers of artificial neurons, deep neural networks have the capacity to map various inputs to outputs, but it requires rigorous training with large datasets. By understanding the complexities of neural network architecture and leveraging advancements in the field, developers can unlock the full potential of AI and drive transformative breakthroughs.

“`html

| Neural Network Architecture for AI | Summary |

|---|---|

| Neural network architecture serves as the foundation for AI systems, taking inspiration from the complexity of the human brain. In the realm of artificial neural networks, interconnected artificial neurons are organized into layers, including input, hidden, and output layers. However, the evolution of AI has given rise to deep neural networks, which consist of multiple hidden layers containing millions of interconnected artificial neurons. | Neural network architecture serves as the bedrock for AI systems, emulating the intricate connections and computations of the human brain. With interconnected layers of artificial neurons, deep neural networks have the capacity to map various inputs to outputs, but it requires rigorous training with large datasets. |

| Artificial neurons, the fundamental building blocks of neural networks, are essential for information processing within AI systems. These artificial counterparts to biological neurons receive input from other neurons, perform calculations using activation functions and propagate the results to their connected neurons. Through this interconnected network of artificial neurons, complex computations and pattern recognition can be achieved. | By understanding the complexities of neural network architecture and leveraging advancements in the field, developers can unlock the full potential of AI and drive transformative breakthroughs. |

| Deep neural networks have revolutionized AI by enabling the creation of highly sophisticated models capable of handling intricate tasks. These networks, with their numerous hidden layers, extract progressively abstract features from the input data, allowing for advanced decision-making and pattern recognition. | |

| Neural network architectures continue to evolve as AI research progresses. The quest for improved performance, efficiency, and scalability has led to the development of innovative architectures such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformer networks. |

Conclusion

AI applications demand high-performance networks that offer low latency and lossless connectivity. To meet these requirements, Cisco Nexus 9000 switches, equipped with the right hardware and software capabilities, provide the necessary infrastructure for AI and machine learning workloads. These switches are designed to deliver low-latency performance, advanced congestion management mechanisms, and telemetry for enhanced visibility.

For efficient transport within AI clusters, RoCEv2 (RDMA over Converged Ethernet) is a valuable option. It leverages the capabilities of Ethernet networks to ensure optimal performance. With RoCEv2, congestion management is handled through features such as Explicit Congestion Notification (ECN) and Priority Flow Control (PFC), ensuring lossless transport for AI workloads.

The Cisco Nexus 9000 switches, combined with automation tools like Cisco Nexus Dashboard Fabric Controller, provide a high-performance network fabric for AI and ML applications. With their low latency, robust congestion management, and advanced telemetry, these switches meet the network requirements of AI workloads, enabling organizations to achieve optimal performance and scalability in their AI endeavors.

FAQ

Why is networking important in AI data centers?

Networking is crucial in AI data centers because it enables distributed computing, which is essential for faster model training and processing large datasets. It also ensures optimal performance and resource utilization in AI workloads.

What is the role of NVIDIA Quantum-2 InfiniBand in AI factories?

NVIDIA Quantum-2 InfiniBand provides ultra-low latencies and scalability, making it ideal for accelerating high-performance computing and AI applications in AI factories. It enables in-network computing and utilizes the NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) for enhanced performance.

How does NVIDIA Spectrum-X benefit AI clouds?

NVIDIA Spectrum-X is designed to optimize Ethernet networks for AI applications in multi-tenant environments. It leverages RDMA over Converged Ethernet (RoCE) Extensions and provides innovations such as adaptive routing and congestion control. Spectrum-X ensures high effective bandwidth and performance isolation for generative AI clouds.

What is neural network architecture in AI?

Neural network architecture is inspired by the human brain and consists of interconnected artificial neurons arranged in layers. Deep neural networks have multiple hidden layers with millions of artificial neurons linked together. Understanding neural network architecture is crucial for building AI systems.

Why are low latency, lossless networks important for AI applications?

Low latency, lossless networks are essential for optimal performance in AI applications. Cisco Nexus 9000 switches provide the infrastructure required for AI/ML workloads by offering low latency, congestion management mechanisms, and telemetry for visibility.

How do Cisco Nexus 9000 switches support AI/ML applications?

Cisco Nexus 9000 switches, coupled with the right hardware and software features, provide a high-performance network fabric for AI/ML applications. They leverage RDMA over Converged Ethernet (RoCE) and utilize ECN and PFC for congestion management, ensuring lossless transport. Automation tools like Cisco Nexus Dashboard Fabric Controller further enhance the performance of AI/ML workloads.