Managing the lifecycle of AI hardware is crucial for ensuring the longevity and performance of artificial intelligence systems. As the global private cloud services market continues to grow rapidly, with a forecasted value of USD 405.30 billion by 2033, organizations must optimize their AI hardware lifecycle to stay competitive.

Machine learning projects involve various steps, including planning, data preparation, model engineering, model evaluation, model deployment, and monitoring and maintenance. Following a structured approach, such as the Cross-Industry Standard Process for the development of Machine Learning applications with Quality assurance methodology (CRISP-ML(Q)), can help organizations build sustainable and cost-effective AI products.

Key Takeaways:

- Effective AI hardware lifecycle management is crucial for the longevity and performance of AI systems.

- A structured approach, like CRISP-ML(Q), can help organizations build sustainable AI products.

- Machine learning projects involve planning, data preparation, model engineering, evaluation, deployment, and maintenance.

- Organizations should optimize their AI hardware lifecycle to stay competitive in the evolving market.

- Proper planning and data preparation are essential for selecting the right hardware and training accurate AI models.

Planning for AI Hardware Lifecycle

In the planning phase of AI hardware lifecycle management, it is essential to assess the scope, success metrics, and feasibility of the ML application. This involves understanding the business objectives, conducting a cost-benefit analysis, and defining clear and measurable success metrics for business, machine learning models, and economics.

When planning for AI hardware, various requirements and constraints must be considered. Factors such as data availability, applicability of the solution, legal constraints, robustness and scalability, explainability, and availability of resources play a crucial role in determining the success of the AI system.

Effective planning ensures that the right hardware is selected and the AI system meets the desired goals.

To begin the planning process, a comprehensive feasibility report should be prepared. This report assesses the technical, economic, and operational aspects of implementing AI hardware. It helps identify potential risks, challenges, and opportunities, enabling informed decision-making.

Defining success metrics is a key aspect of the planning phase. These metrics should align with the overall business objectives and provide a clear framework for evaluating the performance and effectiveness of the AI hardware.

Overall, thorough planning is crucial to ensure that AI hardware is successfully integrated into the organization, contributing to improved efficiency, productivity, and competitiveness.

Data Preparation for AI Hardware Lifecycle

Data preparation plays a crucial role in the lifecycle of AI hardware, ensuring that the models are trained on high-quality data and can make accurate predictions. This phase involves several essential steps, including data collection and labeling, data cleaning, data processing, and data management.

Data Collection and Labeling

Accurate and well-labeled data is the foundation of successful AI models. During this phase, relevant data is collected from various sources, ensuring it aligns with the objectives of the AI project. The collected data is then labeled to provide clear annotations and categorizations that facilitate proper model training.

Data Cleaning

Before feeding the data into AI models, it undergoes a thorough cleaning process. This involves removing any inconsistencies, duplicates, or irrelevant data points that may adversely impact the model’s performance. Data cleaning aims to improve the quality and reliability of the dataset, ensuring that the models receive accurate and meaningful information.

Data Processing

Data processing involves transforming and structuring the collected data into a suitable format for AI model training. This may include feature selection, dealing with imbalanced classes, engineering new features, and normalizing and scaling the data. The goal is to enhance the data’s usefulness, making it more suitable for the specific AI algorithms or models being utilized.

Data Management

Effective data management is essential for maintaining data integrity, accessibility, and version control. Organizations need to establish data pipelines for automation, ensure data quality verification, and implement robust data storage solutions. By establishing proper data management practices, organizations can ensure data reproducibility, easy collaboration, and accurate analysis.

“Data preparation is vital for the ultimate success of AI hardware, as it ensures that the underlying models have access to high-quality data to generate accurate predictions and insights.”

By putting effort into AI hardware data preparation, organizations can significantly improve the performance and reliability of their AI models. The following table provides a summary of the key steps involved in data preparation for the AI hardware lifecycle:

| Data Preparation Steps | Description |

|---|---|

| Data Collection and Labeling | Collecting relevant data from various sources and labeling it for model training. |

| Data Cleaning | Removing inconsistencies, duplicates, and irrelevant data points from the dataset to improve its quality. |

| Data Processing | Transforming and structuring the data, selecting features, dealing with imbalanced classes, and normalizing the data. |

| Data Management | Setting up data pipelines, ensuring data quality verification, and establishing robust data storage solutions. |

Effective data preparation sets the stage for successful AI hardware implementation, ensuring that organizations can leverage high-quality data to develop accurate and reliable AI models for their specific needs.

Model Engineering for AI Hardware Lifecycle

During the model engineering phase of the AI hardware lifecycle, the focus is on building and training AI models that will effectively perform the desired tasks. This phase involves several key steps and considerations that help ensure the quality and performance of the models.

Designing Effective Model Architectures

Designing the right model architecture is crucial for achieving optimal performance. Extensive research and analysis are conducted to determine the most suitable architecture for the specific AI application. Factors such as the type and complexity of the problem being solved, the availability of data, and the computational resources available are taken into account.

By understanding the intricacies of the problem at hand and leveraging domain knowledge, engineers can design architectures that effectively capture the relevant features and relationships in the data, leading to accurate predictions and insights.

Defining Model Metrics

Model metrics play a vital role in evaluating the performance of AI models. During the engineering phase, it is essential to define appropriate metrics that align with the objectives of the AI application. These metrics could include accuracy, precision, recall, F1 score, and others, depending on the nature of the problem.

Defining these metrics allows engineers to assess the model’s performance during training and validation, ensuring that it meets the desired level of accuracy and reliability.

Training and Validation

The training and validation process is critical for fine-tuning the AI models and ensuring they can make accurate predictions. During this phase, relevant datasets are used to train the models, iteratively adjusting the model’s weights and biases to minimize errors and improve performance.

Validation is performed using separate datasets to assess the model’s generalization capabilities and to identify any potential overfitting or underfitting issues. Proper training and validation help optimize the model’s performance and improve its ability to handle real-world data.

Model Compression and Ensembling

As AI models are deployed on various hardware platforms, it’s important to consider their size, computational requirements, and memory constraints. Model compression techniques such as pruning, quantization, and knowledge distillation are employed to reduce the model’s size and computational complexity without significant loss in performance.

Ensembling techniques, which involve combining multiple models or predictions, can also improve the robustness and accuracy of the AI system. Through model compression and ensembling, engineers can create efficient and powerful models that can be effectively deployed on different AI hardware platforms.

By incorporating these model engineering techniques into the AI hardware lifecycle, organizations can build AI systems that deliver accurate results and meet the specific needs of their applications.

Model Evaluation in AI Hardware Lifecycle

Model evaluation plays a critical role in the AI hardware lifecycle, ensuring the ultimate readiness of models for production. This phase involves comprehensive testing on a separate test dataset, which allows for the identification of errors and the assessment of model robustness.

Subject matter experts are involved in the evaluation process, providing valuable insights and expertise. Testing the models on random and real-world data helps gauge their performance in different scenarios and validate their efficacy. By comparing the results against the planned success metrics, organizations can determine if the models meet the desired goals.

Model evaluation goes beyond performance metrics. It emphasizes adherence to industrial, ethical, and legal frameworks governing the development and deployment of AI solutions. Compliance with these frameworks ensures that AI solutions are aligned with industry standards and ethical considerations.

“Effective model evaluation is key to achieving quality, reproducibility, and compliance with the required standards.”

Comprehensive evaluation and proper documentation of the evaluation process are crucial. Recording the steps taken, the datasets used, and the decisions made during evaluation ensures accountability and supports future audits or reviews.

Adherence to Industrial and Legal Frameworks

Industrial and legal frameworks provide guidelines and regulations that govern the development and deployment of AI solutions. Following these frameworks is vital to address concerns around fairness, bias, privacy, accountability, and transparency in AI systems.

Industrial frameworks aim to promote responsible and ethical use of AI by addressing issues such as bias, discrimination, and the potential impact of AI applications on society. Compliance with these frameworks fosters trust and reliability in AI systems.

Legal frameworks, on the other hand, safeguard individual rights and protect against potential harm caused by AI deployments. Ensuring compliance with these regulations helps organizations avoid legal ramifications and potential damage to their reputation.

By considering industrial and legal frameworks, organizations can ensure that their AI hardware models are developed and deployed responsibly, with due consideration given to societal impact and legal requirements.

Data Governance and Privacy in Model Evaluation

Data governance and privacy are critical considerations in model evaluation. Organizations must have robust data management practices in place to protect sensitive information and comply with privacy regulations.

- Ensure data privacy: Organizations must anonymize and protect personal information while evaluating AI models. Applying data anonymization techniques, encrypting sensitive data, and implementing access controls are essential for safeguarding privacy.

- Establish data governance policies: Clear policies and procedures regarding data collection, storage, and usage should be established. Data governance frameworks help organizations manage data effectively and maintain its integrity throughout the evaluation process.

Data governance and privacy considerations ensure that model evaluation processes are conducted ethically and in accordance with legal and regulatory requirements, protecting the rights and privacy of individuals.

| Metric | Planned Goal | Model A | Model B |

|---|---|---|---|

| Accuracy | 95% | 94.8% | 95.2% |

| Precision | 0.85 | 0.82 | 0.86 |

| Recall | 0.90 | 0.92 | 0.89 |

The table above illustrates an example of model evaluation metrics and results. The planned goals for accuracy, precision, and recall were 95%, 0.85, and 0.90, respectively. Model A achieved slightly lower accuracy and precision but higher recall compared to the planned goals. On the other hand, Model B surpassed the planned accuracy and precision but slightly fell short in terms of recall.

These metrics provide valuable insights into the performance of the evaluated models, allowing organizations to make informed decisions about model deployment and further optimization.

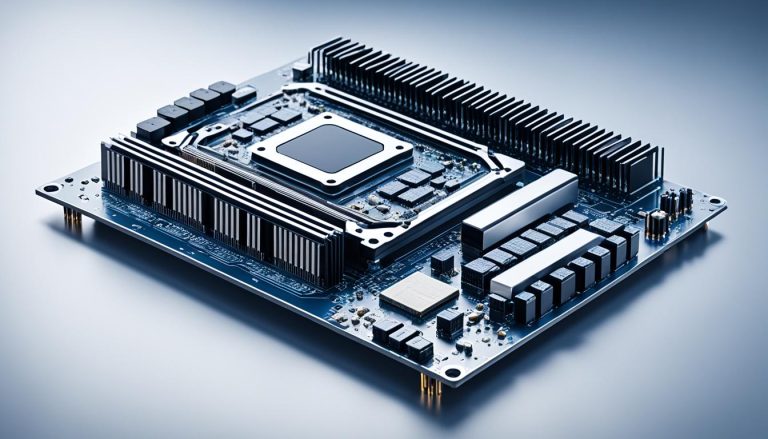

Model Deployment in AI Hardware Lifecycle

Once the AI models have been trained, the next crucial step in the AI hardware lifecycle is model deployment. Model deployment involves deploying the trained AI models into the production environment, where they can be used to make actual predictions and decisions.

During the model deployment phase, it is important to carefully consider the deployment strategy. This strategy revolves around selecting the appropriate hardware for deploying the models. It could be in the cloud, locally on servers, on web browsers, or even at the network edge. Each deployment option has its own advantages and considerations, such as scalability, latency, cost, and infrastructure requirements.

Properly defining the inference hardware requirements is essential for successful model deployment. By understanding the computational resources and hardware specifications needed to support the deployed models, organizations can ensure that the AI system operates optimally and efficiently.

To determine the effectiveness of the deployed models, organizations should conduct A/B testing. A/B testing involves running the deployed models alongside an existing system or alternative approach and comparing their performance. This allows organizations to gauge the accuracy and efficiency of the trained models in real-world scenarios.

Seamless Deployment Strategy

A seamless deployment strategy is crucial for ensuring the smooth transition of AI models into the production environment. It involves developing a comprehensive plan that covers the entire deployment process, including data migration, model integration, and code deployment.

Having disaster management plans in place is also essential for minimizing any losses due to failures or disruptions during the deployment process. These plans ensure that organizations can quickly identify and address any issues that may arise, minimizing downtime and potential negative impacts on the AI system.

Additionally, continual monitoring of the deployed models is crucial for maintaining their effectiveness. Monitoring helps identify any performance issues, drifts in accuracy, or deviations from expected outcomes. By actively monitoring the models, organizations can take corrective actions promptly, such as retraining the models on new data or making adjustments to the architecture.

Minimizing Losses and Ensuring Effectiveness

Minimizing losses in the model deployment phase is essential for optimizing the performance of the AI system. Organizations should establish robust monitoring processes and implement proactive measures to address any potential failures or disruptions. This includes setting up alert systems, implementing automated monitoring tools, and utilizing anomaly detection techniques.

By closely monitoring the deployed models and promptly addressing any issues, organizations can ensure the ongoing effectiveness of the AI system. This continual optimization helps minimize losses due to inaccurate predictions, system failures, or suboptimal performance.

| Deployment Strategy Considerations | Benefits |

|---|---|

| Cloud-based deployment | Scalability, flexibility, and cost-effectiveness |

| Local deployment | Low latency, control over infrastructure, and data privacy |

| Web browser deployment | Easy access, platform independence, and user-friendly interface |

| Edge deployment | Reduced latency, real-time decision-making, and bandwidth optimization |

Table: Deployment Strategy Considerations and Benefits.

Monitoring and Maintenance of AI Hardware Lifecycle

After deploying the AI models, constant monitoring and maintenance are essential for optimal performance. This phase involves constant monitoring of model metrics, hardware and software performance, and customer satisfaction. By implementing AI hardware monitoring and maintenance practices, organizations can proactively identify and address any potential issues that may arise.

Alert systems and automated processes are configured to identify anomalies, constant monitoring, and reduced performance that can affect the overall model and system performance. These systems notify the relevant stakeholders and initiate remedial actions promptly. By keeping a close eye on the health and performance of AI hardware, organizations can ensure that their systems are running smoothly.

“Constant monitoring is key to ensuring that AI hardware maintains peak performance. By closely tracking model metrics, organizations can proactively identify any deviations and take necessary actions to rectify the issues.”

During the monitoring and maintenance phase, organizations may need to take various actions to ensure the long-term success of their AI hardware. This could include retraining the models on new data to improve accuracy or making changes to the architecture to optimize performance. Regular updates to both software and hardware, along with the implementation of a continuous integration framework, are crucial for keeping the AI hardware up-to-date.

By prioritizing constant monitoring and maintenance, organizations can ensure that their AI hardware continues to operate at peak performance levels. This proactive approach minimizes downtime, maximizes efficiency, and enhances the overall value and longevity of the AI system.

Benefits of AI Hardware Monitoring and Maintenance

A well-implemented AI hardware monitoring and maintenance strategy offers several benefits:

- Optimized System Performance: Constant monitoring allows organizations to quickly identify and resolve performance issues, ensuring that the AI hardware operates at its full potential.

- Enhanced Reliability and Availability: By keeping a vigilant eye on the system, organizations minimize the risk of downtime and maximize the availability of AI services.

- Improved Customer Satisfaction: Monitoring customer feedback and satisfaction levels enables organizations to address concerns promptly, resulting in better customer experiences.

- Continuous Improvement: Regular updates and maintenance keep the AI hardware in line with the latest industry developments and emerging technology trends, allowing organizations to continually enhance their AI capabilities.

Constant Monitoring for Model and System Performance

An effective monitoring strategy involves tracking several vital metrics to ensure optimal model and system performance. These metrics may include:

- Accuracy: Evaluating the model’s ability to make precise predictions and assessing if it meets the desired accuracy levels.

- Computational Resources: Monitoring the utilization of hardware resources, such as CPU, GPU, and memory, to identify any bottlenecks or inefficiencies.

- Inference Latency: Measuring the time taken by the AI hardware to process and generate predictions, ensuring that it aligns with the desired latency thresholds.

- Error Rates: Keeping a close eye on error rates, false positives, false negatives, and overall model performance to identify any deviations from expected standards.

- Data Drift: Monitoring changes in input data distributions, ensuring that the AI models remain robust and accurate in the face of evolving data patterns.

Implementing robust monitoring processes, leveraging advanced analytics and visualization tools, allows organizations to proactively detect and address performance degradation issues that may arise throughout the AI hardware lifecycle.

Regular Updates for Optimal Performance

Regular software and hardware updates are critical to maintaining the optimal performance and security of AI hardware. Software updates typically involve patching vulnerabilities, adding new features, and improving overall system stability.

Hardware updates, on the other hand, may include upgrading CPU or GPU units, increasing memory capacity, or improving cooling systems to handle higher computational requirements.

By regularly updating the software and hardware components, organizations can ensure that their AI hardware remains at the forefront of technological advancements, maximizing its capabilities and staying ahead in a rapidly evolving market.

Additionally, organizations should consider implementing a continuous integration framework, enabling seamless integration of updates and providing a structured and controlled environment for testing and deploying changes.

Monitoring and Maintenance Table

| Monitoring and Maintenance Actions | Description |

|---|---|

| Constant Monitoring | Implement proactive monitoring tools and processes to track model metrics, system performance, and customer satisfaction. |

| Alert Systems | Set up alert systems to notify relevant stakeholders of anomalies, reduced performance, and negative feedback. |

| Actionable Insights | Analyze monitoring data to gain actionable insights and take necessary actions, such as retraining models or making architectural changes. |

| Regular Updates | Perform regular software and hardware updates to ensure performance optimization and security enhancements. |

| Continuous Integration Framework | Implement a continuous integration framework to seamlessly integrate updates and changes into the AI hardware ecosystem. |

Conclusion

Managing the lifecycle of AI hardware is crucial for organizations to optimize the performance and longevity of their artificial intelligence systems. By implementing effective strategies for planning, data preparation, model engineering, model evaluation, model deployment, and monitoring and maintenance, organizations can ensure the successful implementation and management of AI hardware.

A successful AI hardware lifecycle management approach brings numerous benefits to organizations. It enables cost savings by maximizing the utilization and efficiency of hardware resources. Additionally, it enhances security by ensuring proper implementation of security protocols and mitigating potential vulnerabilities. Improved compliance with industry regulations and standards is also achieved through effective lifecycle management of AI hardware.

Moreover, organizations experience reduced downtime by proactively monitoring and maintaining their AI hardware. Continuous monitoring allows for early detection of potential issues, enabling organizations to take preventive actions and minimize disruptions. By optimizing the usage of devices through the implementation of device lifecycle management strategies, such as mobile device lifecycle management and IoT device lifecycle management, organizations can achieve their business objectives more efficiently.

FAQ

Why is managing the lifecycle of AI hardware crucial?

Managing the lifecycle of AI hardware is crucial for ensuring the longevity and performance of artificial intelligence systems.

How can organizations optimize the performance of their AI hardware?

Organizations can optimize the performance of their AI hardware by implementing effective strategies for planning, data preparation, model engineering, model evaluation, model deployment, and monitoring and maintenance.

What are the benefits of AI hardware lifecycle management?

The benefits of AI hardware lifecycle management include cost savings, enhanced security, improved compliance, reduced downtime, and better employee experience.

What is involved in the planning phase of AI hardware lifecycle management?

The planning phase of AI hardware lifecycle management involves assessing the scope, success metric, and feasibility of the ML application, understanding business objectives, conducting cost-benefit analysis, and defining clear success metrics.

What is data preparation in the AI hardware lifecycle?

Data preparation in the AI hardware lifecycle involves collecting and labeling data, cleaning and analyzing the data, selecting relevant features, and normalizing and scaling the data.

What is model engineering in the AI hardware lifecycle?

Model engineering in the AI hardware lifecycle involves building and training AI models, designing effective model architectures, defining appropriate model metrics, and performing model compression and ensembling techniques.

Why is model evaluation important in the AI hardware lifecycle?

Model evaluation is important in the AI hardware lifecycle to ensure the readiness of the models for production, test the models on separate datasets, assess robustness, and ensure compliance with industrial and legal frameworks.

How is model deployment done in the AI hardware lifecycle?

In the AI hardware lifecycle, model deployment is done by deploying trained AI models into the production environment, defining inference hardware requirements, and evaluating the model performance using A/B testing.

What is involved in monitoring and maintenance in the AI hardware lifecycle?

Monitoring and maintenance in the AI hardware lifecycle involve constant monitoring of model metrics, hardware and software performance, customer satisfaction, and taking necessary actions to improve performance and address issues.