The field of artificial intelligence (AI) has been rapidly evolving, driven by advancements in computing power and algorithms. At the forefront of this revolution are Graphics Processing Units (GPUs), which play a crucial role in neural network training and inference, the two key processes in machine learning.

GPUs are highly efficient at parallel processing, allowing for faster and more energy-efficient computations compared to Central Processing Units (CPUs). This capability has made GPUs the go-to choice for AI and machine learning tasks, as they can handle the massive amounts of data involved in training and inference with incredible speed.

Since 2003, NVIDIA GPUs, in particular, have seen an astonishing increase in performance, with a 7,000-fold improvement while maintaining cost-effectiveness. Epoch, an independent research group, has highlighted the significant contributions of GPUs to the recent progress in AI and machine learning.

With their parallel processing capabilities and advanced architecture, GPUs have become indispensable for training deep neural networks. They can effectively handle the complex computations required to process large datasets simultaneously, ensuring faster training times and improved accuracy.

Moreover, GPUs continue to advance and enhance AI performance. Over the past decade, NVIDIA GPUs alone have increased AI inference performance by a staggering 1,000 times. This exponential growth has opened the doors to more complex and sophisticated AI applications across industries.

Key Takeaways:

- GPUs excel in neural network training and inference due to their parallel processing capabilities.

- GPUs have witnessed a significant increase in performance and cost-effectiveness over the years.

- Deep neural network training heavily relies on GPUs for efficient and rapid computations.

- NVIDIA GPUs have achieved remarkable advancements in AI inference performance.

- GPUs continue to drive the progress of AI and machine learning.

The Role of GPUs in AI and Machine Learning

GPUs play a crucial role in accelerating the advancement of artificial intelligence (AI) and machine learning. With their parallel processing capabilities, GPUs enable rapid processing and analysis of complex data, making them an ideal choice for AI algorithms that involve extensive data processing.

When it comes to deep learning, GPUs are essential for training complex neural networks. Their ability to handle large datasets and perform simultaneous calculations greatly enhances the efficiency of deep learning algorithms. As AI models and datasets continue to grow in size and complexity, the computational speed and efficiency provided by GPUs become increasingly important.

The architectural features of GPUs, such as highly tuned tensor cores and advanced memory architectures, further contribute to their effectiveness in AI and machine learning workloads. These features allow for efficient processing of AI algorithms, ensuring optimal performance and accuracy.

To illustrate the significance of GPUs in the field of AI and machine learning, here are some key points:

- GPUs excel at parallel processing, enabling faster computations and more efficient analysis of complex data.

- They are particularly well-suited for training deep neural networks, thanks to their ability to handle large datasets and perform simultaneous calculations.

- GPU architectural features, such as highly tuned tensor cores and advanced memory architectures, enhance the efficiency of AI and machine learning workloads.

- As AI models and datasets continue to grow in size and complexity, GPUs provide the necessary computational speed and efficiency for these advancements.

To visualize the impact of GPUs on AI and machine learning, consider the following quote from Dr. Andrew Ng, the co-founder of Coursera and former chief scientist at Baidu:

“GPUs have been absolutely instrumental in creating a new wave of AI products and services because they are one of the enabling technologies behind deep learning. They allow researchers and developers to train neural networks more quickly, enabling us to tackle more complex problems and make exciting advancements in AI and machine learning.”

As AI continues to push the boundaries of what is possible, the role of GPUs in driving innovation and efficiency in machine learning applications cannot be overstated. They pave the way for faster, more accurate analysis of complex data and empower researchers and developers to tackle increasingly challenging problems.

Fueling the Future of AI

The future of AI and machine learning relies heavily on the ongoing development and optimization of GPUs. As AI models and datasets continue to evolve, GPUs will continue to play a pivotal role in providing the necessary computational power and efficiency. Researchers and developers can leverage the parallel processing capabilities of GPUs to accelerate training and inference, unlocking new possibilities and driving further advancements in the field of AI.

With the ever-increasing demand for AI-powered solutions across various industries, the importance of GPUs in enabling advanced machine learning applications cannot be underestimated. As organizations strive to leverage the full potential of AI, GPUs will remain a key component in achieving optimal performance, accuracy, and efficiency in AI and machine learning.

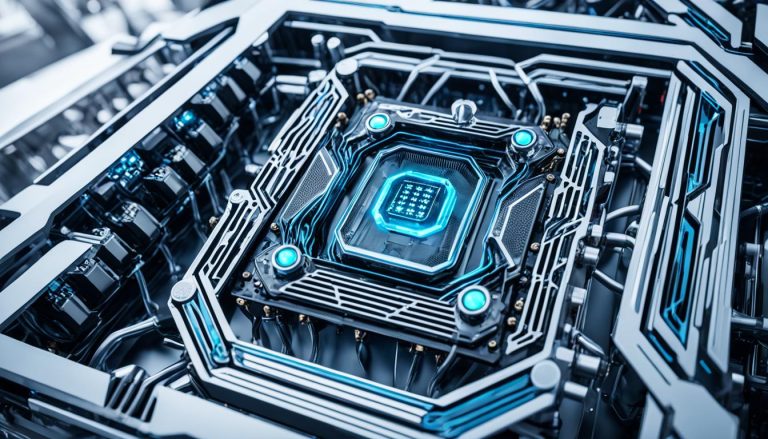

GPU Architecture and its Importance in AI Advancements

GPU architecture plays a crucial role in driving advancements in artificial intelligence (AI) by delivering unmatched computational speed and efficiency. GPUs excel at handling large datasets and complex data structures commonly found in AI and machine learning applications, making them indispensable for processing the information-intensive tasks required in these domains.

“The fusion of GPU architecture and AI has propelled the computational boundaries, allowing for faster, more sophisticated, and real-time applications.”

One of the key features that sets GPU architecture apart is its parallel processing capabilities. GPUs are structured with thousands of cores that work simultaneously to perform calculations, resulting in significantly faster computations compared to traditional CPUs. This parallel processing power is especially beneficial for AI applications that involve training and inference tasks.

Moreover, GPUs boast high-bandwidth memory, allowing for efficient data transfer and retrieval. This enables GPUs to handle the massive amounts of information commonly associated with AI tasks, such as processing large datasets or training complex neural networks.

“The architectural features of GPUs, including highly tuned tensor cores and advanced memory architectures, enable more efficient processing of AI and machine learning workloads.”

Specialized cores within GPUs are another essential aspect of GPU architecture. These cores are optimized to perform specific tasks commonly found in machine learning algorithms, such as matrix operations and vector calculations. By dedicating specific hardware for these operations, GPUs can accelerate AI tasks and achieve higher computational performance.

The large-scale integration of components within GPU architecture further contributes to its efficiency in AI advancements. The integration of various components, such as multiple cores, memory units, and specialized tensor cores, allows for seamless and efficient communication between different parts of the GPU, resulting in reduced latency and improved performance.

The Importance of GPU Architecture in Handling Large Datasets

Large datasets are a fundamental part of AI and machine learning. GPUs are specifically designed to handle these massive volumes of data efficiently and effectively. With GPUs, data can be processed in parallel, allowing for faster analysis and model training. This enables researchers and data scientists to work with more extensive and complex datasets, ultimately driving advancements in AI.

The Evolution of GPU Architecture and AI

Over the years, GPU architecture has evolved significantly, aligning with the growing demands of AI. The increasing computational power and efficiency of GPUs have enabled faster and more accurate AI algorithms, leading to groundbreaking advancements in various fields, including computer vision, natural language processing, and autonomous systems.

(

This image illustrates the intricate structure of GPU architecture and its relationship with AI advancements.

)

The Future of GPU Architecture in AI

As AI models and datasets continue to expand in size and complexity, the importance of GPU architecture will only continue to grow. Researchers and technology companies are constantly pushing the boundaries of AI, requiring increasingly powerful hardware to support their innovations. GPU manufacturers, such as NVIDIA and AMD, are continuously investing in research and development, further advancing GPU architecture to meet the evolving needs of the AI industry.

To summarize, GPU architecture plays a crucial role in driving AI advancements by providing unmatched computational speed and efficiency. Through their parallel processing capabilities, high-bandwidth memory, specialized cores, and large-scale integration, GPUs enable faster computations, reduced training times for neural networks, and more efficient processing of large datasets. As AI continues to progress, GPU architecture will remain at the forefront, pushing the boundaries of computational capabilities and enabling transformative applications across various industries.

Trade-offs and the Future of Inference Chipsets

In the inference phase of machine learning, speed, efficiency, and accuracy play crucial roles in ensuring predictions are useful in real-world applications. While GPUs and other specialized chipsets have dominated the AI acceleration market, the demand for inference chipsets is projected to reach $52 billion by 2025.

Traditionally, CPUs have been considered less viable for inference due to their lower performance compared to specialized hardware. However, recent advancements in CPU performance and optimization have started challenging this notion, opening up new possibilities for the future.

As machine learning models and datasets grow larger and more complex, there is an increasing need to unlock inference from the chipset and leverage the computational power of CPUs. This approach offers several advantages, including cost savings, scalability, and the ability to deploy models on a broader range of devices and systems.

“The future of inference chipsets lies in striking the right balance between speed, efficiency, and performance. By harnessing the computational capabilities of CPUs, we can expand the reach of AI applications and drive further innovation.”

To better understand the trade-offs between inference chipsets and CPUs, let’s compare their key attributes:

| Attribute | Inference Chipsets | CPUs |

|---|---|---|

| Speed | Faster | Slower |

| Efficiency | High | Varies |

| Accuracy | High | High |

| Performance | Superior | Competitive |

| Cost | Expensive | Affordable |

As seen in the table above, inference chipsets typically offer faster speeds and higher efficiency compared to CPUs. However, CPUs can still deliver competitive performance and high accuracy while being more affordable. With advancements in CPU optimization and growing datasets, CPUs are becoming increasingly attractive for inference tasks.

The future of inference chipsets lies in finding the right balance between specialized hardware and optimized CPUs. By capitalizing on the strengths of both, we can achieve optimal performance, cost-effectiveness, and scalability in AI and machine learning applications.

Conclusion

In conclusion, GPUs have played a pivotal role in revolutionizing the field of AI and machine learning. With their unparalleled parallel processing capabilities and specialized architecture, GPUs have become indispensable for handling the immense computational demands of AI algorithms and large datasets. The dominance of specialized chipsets in the AI acceleration market has been widely acknowledged, but recent advancements in CPU performance and optimization have sparked a renewed recognition of their potential for inference tasks.

By unlocking inference from the chipset, organizations can unlock a multitude of benefits. Cost savings, scalability, and broader deployment possibilities are just a few advantages that can be achieved by leveraging the computational power of CPUs. As we look to the future of AI, the optimization of CPUs and the synergy between GPUs and CPUs will continue to shape the field. This optimization and collaboration between the two will pave the way for even greater innovation and efficiency in machine learning applications.

In essence, the future of AI hinges on the careful balance of harnessing the power of GPUs and optimizing CPUs. These technologies working together will unlock the full potential of machine learning and pave the way for new advancements in the field. As the demand for AI continues to grow, organizations must embrace CPU optimization and leverage the strengths of both GPUs and CPUs to propel AI-driven innovation forward.

FAQ

What is the role of GPUs in AI and machine learning?

GPUs play a crucial role in AI and machine learning by enabling rapid processing and analysis of complex data. Their parallel processing capabilities make them well-suited for accelerating machine learning algorithms that involve extensive data processing.

What is the importance of GPU architecture in AI advancements?

GPU architecture is essential for driving AI advancements due to its unmatched computational speed and efficiency. GPUs excel at handling large datasets and complex data structures common in AI and machine learning. Their parallel processing capabilities, high-bandwidth memory, specialized cores, and large-scale integration all contribute to faster computations, reduced training times for neural networks, and more efficient processing of large datasets.

What are the benefits of unlocking inference from the chipset and leveraging CPUs?

Unlocking inference from the chipset and leveraging CPUs allows for cost savings, scalability, and the ability to deploy models on a broader range of devices and systems. As machine learning models and datasets become larger and more complex, the need to harness the computational power of CPUs becomes increasingly important.

How are GPUs revolutionizing the field of AI and machine learning?

GPUs have revolutionized the field of AI and machine learning by driving advancements in neural network training and inference. Their parallel processing capabilities and specialized architecture make them essential for handling the computational demands of AI algorithms and large datasets.

What is the future of AI and CPU optimization?

As the future of AI unfolds, the optimization of CPUs and the synergy between GPUs and CPUs will continue to shape the field, enabling even greater innovation and efficiency in machine learning applications.

5 Comments