Neural networks are computational models that mimic the structure and function of the human brain. These models have revolutionized the field of artificial intelligence (AI) by enabling innovative applications in computer vision, natural language processing, and more. However, to ensure optimal performance, it is crucial to understand the hardware foundations that support these neural networks.

Hardware plays a vital role in powering up AI projects, and different components are used to facilitate neural network operations. This article will delve into the basics of neural network hardware and explore its applications in AI projects.

Key Takeaways:

- Neural networks are computational models inspired by the human brain.

- Hardware forms the foundation of neural network implementation.

- Understanding the different hardware components is essential for optimizing neural network performance.

- Hardware choices depend on specific AI project requirements.

- Stay tuned to explore the nuances of neural network hardware in the upcoming sections.

Current Hardware for Deep Learning Computational Needs

When it comes to deep learning computational needs, specific types of hardware play a crucial role in optimizing performance. Understanding the different options available, such as GPUs, TPUs, FPGAs, and ASICs, is essential for powering AI projects and achieving desired outcomes.

Graphics Processing Units (GPUs)

GPUs are widely recognized for their processing power and ability to handle complex computations, making them a popular choice for deep learning. With their parallel processing architecture, GPUs can efficiently execute numerous tasks simultaneously, which is vital for neural network operations. Their ability to rapidly process large amounts of data makes them well-suited for training and inferencing tasks in deep learning applications.

Tensor Processing Units (TPUs)

TPUs, developed by Google as AI accelerator application-specific integrated circuits (ASICs), are designed to excel in tensor operations. These specialized hardware units can optimize the performance of deep learning models by efficiently executing tensor computations. TPUs offer impressive speed and efficiency in handling complex neural network computations, making them a valuable resource for large-scale deep learning projects.

Field-Programmable Gate Arrays (FPGAs)

FPGAs are another important type of hardware used in deep learning applications due to their flexibility and customizability. These integrated circuits can be reprogrammed to meet specific application requirements, making them suitable for neural network implementation. FPGAs can efficiently parallelize computations and leverage the sparsity and redundancy present in neural networks, reducing computation and memory requirements.

Application-Specific Integrated Circuits (ASICs)

ASICs are specialized hardware chips designed for specific purposes, including neural network implementation. These dedicated circuits optimize performance and minimize power consumption, allowing for fast and efficient handling of specific computations required in deep learning. ASICs support various optimization techniques like quantization and pruning, enhancing their capabilities in accelerating neural network operations.

Each type of hardware—GPUs, TPUs, FPGAs, and ASICs—offers unique advantages in addressing deep learning computational needs. The choice of hardware depends on the specific requirements of the project, taking into consideration factors such as processing power, energy efficiency, flexibility, and cost.

“The intelligent use of GPU accelerated computing can speed up deep learning algorithms by an order of magnitude or more”. – Yann LeCun, Chief AI Scientist, Facebook AI Research.

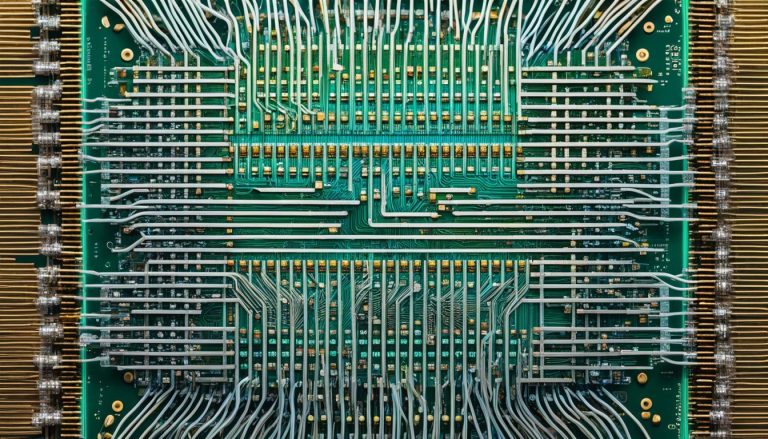

FPGAs: Flexible and Customizable

Field Programmable Gate Arrays (FPGAs) are integrated circuits that offer high flexibility and customizability for neural network implementation. Unlike CPUs and GPUs with fixed architectures, FPGAs can be configured to integrate into any logic function. This makes them ideal for adapting to the specific requirements of different neural network models and applications.

FPGAs support a wide range of data types and precision levels, allowing developers to optimize performance and memory usage. They can exploit the sparsity and redundancy inherent in neural networks, reducing the amount of computation and memory required. This capability not only improves efficiency but also enables the deployment of neural networks on resource-constrained devices.

Although FPGAs require low-level programming skills and have limited resources compared to ASICs (Application-Specific Integrated Circuits), they have been successfully used to implement various types of neural networks. Their flexibility and customizability make them suitable for a wide range of applications, from image recognition to natural language processing.

Let’s take a look at a comparison table illustrating the key features and advantages of FPGAs compared to other neural network hardware:

| Hardware | Flexibility | Customizability | Resources |

|---|---|---|---|

| FPGAs | High | High | Limited |

| CPU | Low | Low | High |

| GPU | Low | Low | High |

| ASIC | Low | Low | High |

In conclusion, FPGAs provide a flexible and customizable hardware solution for neural network implementation. Their ability to adapt to various logic functions, support different data types, and reduce computation and memory requirements make them highly suitable for a wide range of applications. While they may require specialized programming skills and have limited resources compared to ASICs, FPGAs offer a cost-effective and versatile option for implementing neural networks.

ASICs: Fast and Efficient

Application-Specific Integrated Circuits (ASICs) are dedicated hardware chips designed and manufactured for specific purposes. These specialized architectures optimize performance, minimize power consumption, and reduce size for neural network implementation.

ASICs offer fast and efficient hardware acceleration, making them ideal for high-performance computing tasks. They can implement circuits tailored to specific neural network operations like matrix multiplication and convolution, enhancing overall computational efficiency.

Moreover, ASICs support various optimization techniques, including quantization and pruning, which help further improve performance and reduce memory requirements. Quantization allows for reduced precision computations, while pruning eliminates unnecessary connections, reducing the overall model size.

ASICs provide unparalleled speed and efficiency in neural network implementation. Their specialized architectures and optimization techniques make them a reliable choice for accelerating AI workloads.

Despite their advantages, ASICs require a significant investment and have limited reusability and upgradability. The design process for creating ASICs can be complex, involving intricate circuit designs and manufacturing processes. However, their robust performance and efficiency make them a valuable choice for applications that demand high computational performance.

Implementing neural networks on ASICs requires deep hardware knowledge and expertise. While ASICs provide substantial benefits, they may not be the most practical solution for all use cases. Researchers and developers need to carefully consider their specific needs and evaluate whether ASICs are the right choice for their neural network implementation.

Overall, ASICs play a vital role in the field of neural network hardware, offering fast and efficient hardware acceleration through specialized architectures and optimization techniques.

Advantages of ASICs in Neural Network Implementation

Using ASICs for neural network implementation offers several advantages:

- Fast and efficient hardware acceleration

- Specialized architectures for specific neural network operations

- Support for optimization techniques like quantization and pruning

- Enhanced computational efficiency and reduced memory requirements

These advantages make ASICs an attractive choice for applications that require high-performance computing and efficient neural network implementation.

Limitations of ASICs

While ASICs offer significant advantages, they also have certain limitations:

- Significant investment and limited reusability

- Complex design and manufacturing processes

- Requires deep hardware knowledge and expertise

Despite these limitations, ASICs remain a powerful solution for fast and efficient neural network implementation, particularly in applications where performance is critical.

Neuromorphic Chips: Bio-Inspired and Energy-Efficient

Neuromorphic chips are a fascinating development in neural network implementation. These chips, using integrated circuits, aim to emulate the structure and function of the biological brain. By employing both analog and digital circuits, neuromorphic chips can process and store information in a distributed and parallel manner.

One of the key advantages of neuromorphic chips is their high energy efficiency. Unlike conventional hardware platforms, these chips eliminate the separation between processing and memory, reducing data movement overhead. This design approach not only improves energy efficiency but also enables scalability in implementing large-scale neural networks.

Although neuromorphic chips present exciting possibilities, they do come with certain design challenges. When compared to traditional hardware platforms, neuromorphic chips may exhibit lower accuracy. However, ongoing research and advancements are continuously addressing these limitations, bringing them closer to the standards set by conventional hardware.

Despite the design challenges, neuromorphic chips show promise in advancing neural network implementation. Their bio-inspired architecture and energy efficiency make them an attractive option for various AI projects. As researchers and developers continue to explore the potential of these chips, the future holds great possibilities for scaling up neural networks and achieving breakthroughs in AI applications.

Implementation Challenges and Considerations

Implementing neural networks on specialized hardware comes with its fair share of challenges and considerations. The design complexity and difficulty of such implementations often require individuals with low-level programming skills and a solid understanding of hardware. It’s crucial to overcome these challenges to ensure successful implementation.

One of the primary challenges is the design complexity associated with neural network hardware. The intricate nature of neural networks demands careful attention to detail, with various interconnected layers and nodes. This complexity requires expertise in low-level programming and extensive hardware knowledge to develop efficient and optimized hardware architectures.

“Designing and implementing neural networks on specialized hardware demands expertise in low-level programming and hardware knowledge to overcome the design complexity.”

Moreover, hardware limitations in terms of resources, performance, and cost must be taken into account during implementation. Specialized hardware platforms may have limited resources, such as memory and storage capacity, which must be carefully managed to optimize performance. Performance considerations include the efficiency of data handling, processing speed, and power consumption.

Cost is also an important consideration. Specialized hardware can be expensive to develop and deploy, requiring a significant investment. Therefore, it’s crucial to evaluate the cost-effectiveness of the hardware solution while considering the specific requirements of the neural network project.

Compatibility and interoperability with existing neural network frameworks and tools are additional considerations for successful implementation. Ensuring seamless integration with established frameworks and tools is vital for efficient development and deployment, minimizing compatibility issues that can hinder progress.

By understanding and addressing these challenges and considerations, researchers and developers can navigate the complexities of neural network hardware implementation more effectively.

Guidelines for Successful Implementation

When tackling neural network hardware implementation, it’s important to adhere to certain guidelines to maximize effectiveness and efficiency. These guidelines include:

- Conducting thorough research and analysis to choose the most suitable hardware platform based on project requirements.

- Collaborating with hardware experts and consultants to leverage their specialized knowledge and experience.

- Developing a detailed implementation plan, outlining the necessary steps, timeline, and resource allocation.

- Considering the scalability of the chosen hardware platform to accommodate potential future growth and expansion of the project.

- Regularly monitoring and evaluating the performance of the implemented hardware solution, making necessary adjustments and optimizations.

By following these guidelines and remaining aware of the challenges and considerations involved, successful implementation of neural networks on specialized hardware can be achieved, unlocking the full potential of artificial intelligence.

Comparison of Design Complexity

Below is a table comparing the design complexity of different neural network hardware:

| Hardware Platform | Design Complexity |

|---|---|

| GPUs | Medium |

| TPUs | Low |

| FPGAs | High |

| ASICs | High |

As shown in the table, GPUs and TPUs have relatively lower design complexity compared to FPGAs and ASICs.

Applications and Future Developments

Neural network hardware plays a crucial role in various fields, such as computer vision, natural language processing, and speech recognition. These applications leverage the power of neural networks and their ability to analyze and interpret complex data. By utilizing specialized hardware, researchers and developers can enhance the performance and efficiency of these applications.

As technology continues to advance, the future holds promising developments in hardware architectures. Ongoing research focuses on improving hardware efficiency, scalability, and compatibility with existing frameworks, paving the way for more robust neural network implementations. These advancements aim to address current limitations and open up new possibilities for AI applications.

One of the key areas of future development is hardware efficiency. By optimizing the hardware for neural network computations, researchers aim to reduce power consumption and improve overall performance. This is particularly important in applications where energy efficiency is a critical factor, such as mobile and edge computing.

Scalability is another focus for future developments. As neural networks become larger and more complex, hardware architectures need to support the increased computational and memory requirements. Scalable hardware designs will enable the implementation of large-scale neural networks and facilitate the analysis of massive datasets.

Additionally, compatibility with existing frameworks is a priority for future neural network hardware developments. Ensuring seamless integration and interoperability with popular deep learning frameworks, such as TensorFlow and PyTorch, will enable researchers and developers to leverage the advantages of both software and hardware to achieve optimal results.

With these future developments in neural network hardware, the possibilities for AI applications are expanding. The potential for advancements in computer vision, natural language processing, and speech recognition is vast, offering opportunities for innovation and breakthroughs in various industries.

As we look ahead, it is important to consider the impact of these developments on the future of AI. Neural network hardware advancements will continue to push the boundaries of what AI systems can achieve, enabling more sophisticated and powerful applications that can revolutionize the way we interact with technology.

Conclusion

Understanding neural network hardware basics is crucial for powering up AI projects. When it comes to deep learning computational needs, different hardware options have their advantages and are essential for various applications. FPGAs offer flexibility and customizability, allowing researchers to tailor the hardware to their specific needs. ASICs provide fast and efficient performance by implementing specialized architectures and optimization techniques. Neuromorphic chips offer bio-inspired energy efficiency, making them a promising choice for energy-conscious implementations.

Implementing neural networks on hardware platforms does come with its challenges and considerations. Design complexity and hardware limitations need to be taken into account, as well as compatibility with existing frameworks. However, advancements in hardware architectures continue to drive future developments, opening up new possibilities for improving neural network performance.

By carefully choosing the right hardware for neural network implementation, researchers and developers can unlock the full potential of AI. Whether it’s GPUs, TPUs, FPGAs, ASICs, or neuromorphic chips, each option has its strengths and should be considered based on the specific computational requirements of the project. With the right hardware in place, AI projects can benefit from increased performance, efficiency, and scalability, driving innovation in the field and pushing the boundaries of what neural networks can achieve.

FAQ

What are neural network hardware basics?

Neural network hardware basics refer to the fundamental components used in implementing neural networks, such as GPUs, TPUs, FPGAs, and ASICs.

Which hardware components are essential for deep learning computational needs?

The essential hardware components for deep learning computational needs include GPUs, TPUs, FPGAs, and ASICs.

What is the difference between GPUs and TPUs?

GPUs (Graphics Processing Units) are widely used for their processing power, while TPUs (Tensor Processing Units) are application-specific integrated circuits developed by Google for fast tensor operations.

What is an FPGA and how does it support neural network implementation?

Field-Programmable Gate Arrays (FPGAs) are integrated circuits that offer high flexibility and customizability for neural network implementation. They can support different data types and precision levels, and exploit the sparsity and redundancy of neural networks to reduce computation and memory requirements.

What are ASICs and how do they optimize neural network performance?

Application-Specific Integrated Circuits (ASICs) are dedicated hardware chips designed and manufactured for specific purposes. ASICs optimize neural network performance by minimizing power consumption, reducing size, and implementing specialized architectures and circuits tailored to specific neural network operations.

What are neuromorphic chips and how do they differ from conventional hardware platforms?

Neuromorphic chips emulate the structure and function of the biological brain using integrated circuits. They offer high energy efficiency and scalability by eliminating the separation between processing and memory. However, they may have design challenges and lower accuracy compared to conventional hardware platforms.

What are some challenges and considerations in implementing neural networks on hardware platforms?

Implementing neural networks on hardware platforms requires low-level programming skills and hardware knowledge. Additionally, hardware limitations in terms of resources, performance, and cost must be considered. Compatibility and interoperability with existing neural network frameworks and tools are also important considerations.

What are the applications of neural network hardware?

Neural network hardware finds applications in various fields such as computer vision, natural language processing, and speech recognition.

What are the future developments in neural network hardware?

Ongoing research focuses on improving hardware efficiency, scalability, and compatibility with existing frameworks. Future developments in hardware architectures hold the potential to further enhance neural network performance.

How do I choose the right hardware for neural network implementation?

Choosing the right hardware for neural network implementation requires considering the specific computational needs, design complexity, performance requirements, and compatibility with existing frameworks and tools.