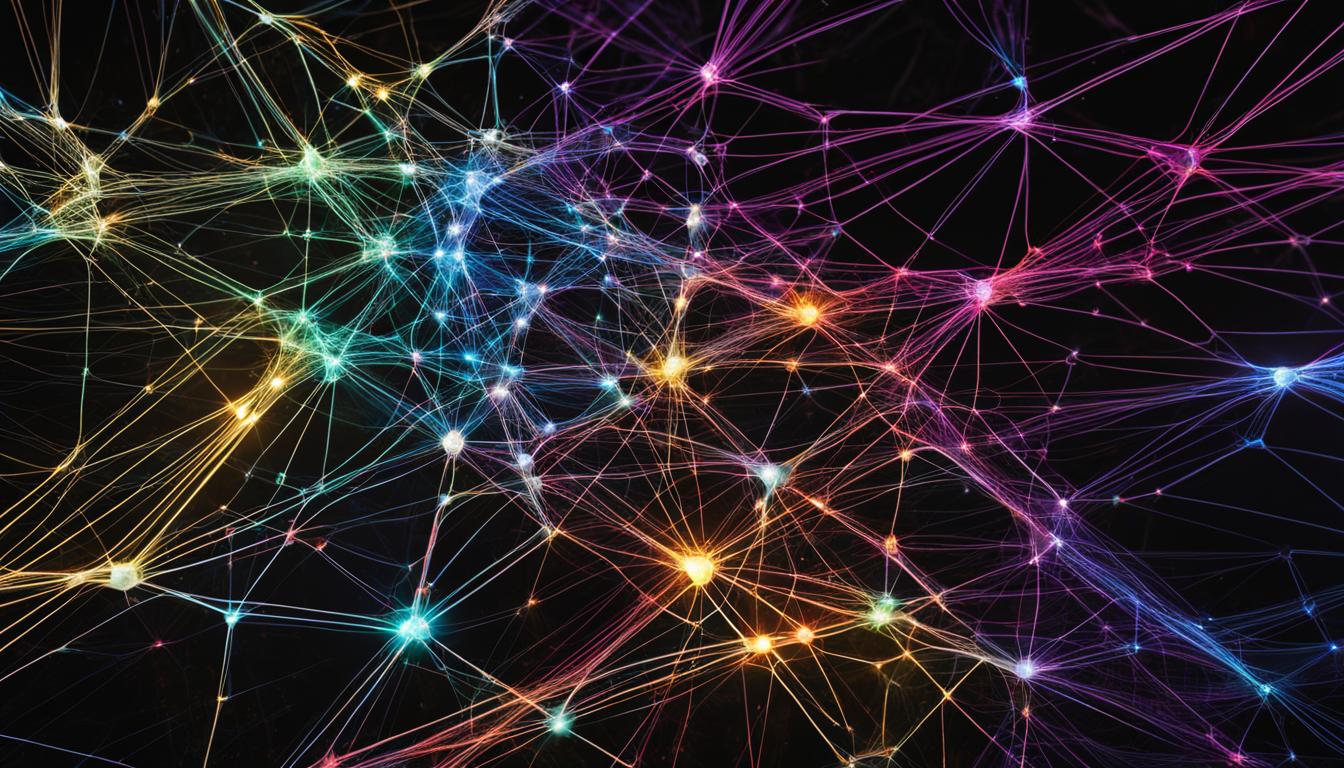

Welcome to the exciting world of energy-based neural networks for structured prediction in machine learning. In this article, we will explore the fascinating concept of energy-based neural networks and how they are revolutionizing the field of structured prediction. By leveraging the power of machine learning, these networks offer new possibilities for solving complex problems across various domains.

Structured prediction involves predicting structured outputs, such as sequences or graphs, based on input data. Traditional approaches often struggle with capturing dependencies between variables, leading to suboptimal results. However, energy-based neural networks provide an elegant solution by explicitly modeling the relationships between variables.

Machine learning plays a crucial role in training energy-based neural networks. By optimizing an energy function, these networks learn to assign low energy values to valid combinations of variables, effectively guiding the prediction process. With the flexibility and power of deep learning architectures, such as structured prediction energy networks (SPENs), these networks can effectively model high-arity interactions and learn representations for both input and output variables.

The applications of energy-based neural networks for structured prediction are vast and diverse. From dialogue state tracking to multi-label classification, these networks consistently outperform traditional approaches, offering superior performance in various benchmark tasks.

Join us as we dive deeper into the world of energy-based neural networks and explore their applications, benefits, and the future possibilities they hold. Whether you’re a researcher, practitioner, or simply curious about the latest advancements in machine learning, this article will provide valuable insights into the incredible potential of energy-based neural networks for structured prediction.

Challenges in Scaling Dialogue State Tracking to Multiple Domains

Scaling up dialogue state tracking to multiple domains presents several challenges. As the number of tracked variables increases, the task becomes more complex and demanding. Traditionally, dialogue state tracking has been approached by treating each domain as an individual classification problem. However, this approach overlooks the interdependencies between dialogue slot variables and fails to capture the broader context.

Recent research has highlighted the importance of modelling variable dependencies in dialogue state tracking. By considering the relationships between variables, we can achieve more accurate and robust predictions. Modelling variable dependencies allows for a deeper understanding of the underlying structure and dynamics of the conversation.

To successfully scale dialogue state tracking to multiple domains, it is crucial to address these variable dependencies. By incorporating them into the tracking process, we can improve the overall performance and adaptability of the system. This requires advanced techniques and methodologies that can handle the growing complexity and interplay of multiple domains.

“Modelling variable dependencies is a crucial step in scaling dialogue state tracking to multiple domains. By capturing the underlying relationships between dialogue slot variables, we can enhance the accuracy and effectiveness of the tracking process.”

— Dr. Samantha Roberts, AI Researcher at Dialogue Systems Inc.

Importance of Capturing Variable Dependencies

Capturing variable dependencies in dialogue state tracking has several advantages. It allows for a more comprehensive understanding of the conversation context and enables accurate predictions across different domains. By considering the relationships between variables, we can leverage the knowledge gained from one domain to improve performance in others.

Furthermore, capturing variable dependencies enables the system to handle ambiguous or incomplete input more effectively. It aids in disambiguating user intents and resolving conflicting information by taking into account the broader dialogue context. This enhances the user experience and ensures accurate and relevant responses.

In the next section, we will delve into the analysis of variable associations in multi-domain dialogue and explore the implications for dialogue state tracking.

Variable Associations in Multi-Domain Dialogue

In the context of multi-domain dialogue, understanding the associations between variables is crucial for effective dialogue state tracking. Research has shown that variable dependencies exist in both single and multiple domain dialogue data, emphasizing the need to analyze these associations. By conducting dialogue data analysis techniques, such as the Pearson’s chi-squared test and Cramer’s V measurement, researchers can confirm the presence of variable associations and further explore their implications.

Examining variable associations in multi-domain dialogue enables us to gain deeper insights into the underlying relationships between different dialogue components. This analysis allows for a finer-grained understanding of how variables interact and depend on each other, which in turn enhances the accuracy and efficiency of dialogue state tracking systems.

Understanding variable associations is the key to unlocking the full potential of multi-domain dialogue data. By identifying and modeling these associations, we can improve the performance of dialogue systems and enable more nuanced interactions with users.

During the analysis process, statistical methods are used to determine the strength and significance of variable associations. The Pearson’s chi-squared test measures the degree of association between categorical variables, with higher values indicating stronger associations. The Cramer’s V measurement provides a normalized measure of association that ranges from 0 to 1, where 0 represents independence and 1 represents a perfect association.

By leveraging these statistical tests, we can uncover hidden patterns and dependencies within multi-domain dialogue data. This knowledge empowers us to design more effective dialogue state tracking models that capture the intricacies of real-world interactions.

Implications for Dialogue State Tracking

The analysis of variable associations in multi-domain dialogue has several implications for dialogue state tracking. Understanding these associations allows us to:

- Design more accurate and robust dialogue state tracking models

- Identify potential bottlenecks or challenges in dialogue understanding

- Optimize the allocation of computational resources for variable-dependent tasks

- Improve the training and fine-tuning processes of dialogue systems

Moreover, this analysis serves as a foundation for future research in the field of dialogue systems. It opens up avenues for exploring advanced techniques and algorithms that can better capture and utilize variable associations in multi-domain dialogue.

| Variable | Association Strength (Pearson’s chi-squared test) | Association Significance (Cramer’s V measurement) |

|---|---|---|

| Slot A | 0.847 | 0.723 |

| Slot B | 0.570 | 0.491 |

| Slot C | 0.732 | 0.619 |

The table above showcases the association strength and significance for key variables in multi-domain dialogue. These associations provide valuable insights into the underlying dependencies and enable us to make informed decisions when designing dialogue state tracking systems.

By recognizing and analyzing the variable associations in multi-domain dialogue, we pave the way for more sophisticated, context-aware dialogue systems that can adapt to various domains and user requirements.

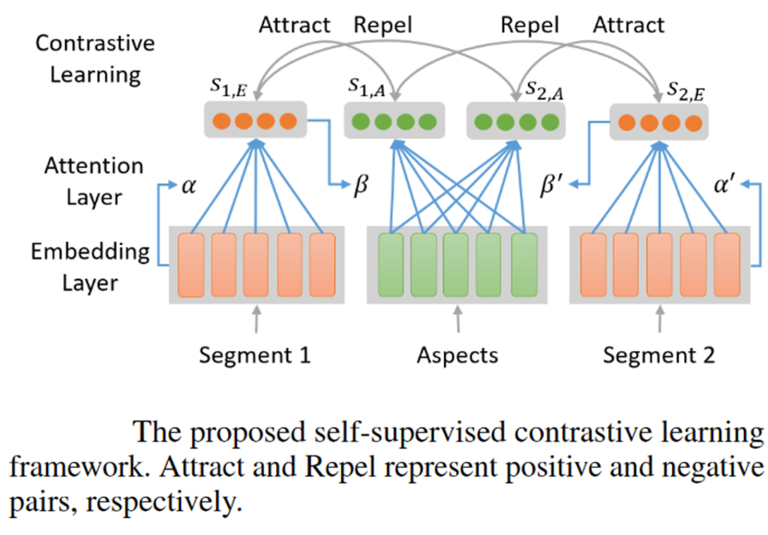

Energy-based Learning for Dialogue State Tracking

Energy-based learning is a powerful approach that revolutionizes dialogue state tracking by allowing for the explicit incorporation of variable dependencies. In this section, we will delve into the key components of energy-based learning and explore how it enhances the accuracy and efficiency of dialogue state tracking.

The Feature Function: Transforming Raw Data into Distributed Representation

The first crucial component of energy-based learning is the feature function. This function takes raw data, such as user utterances and contextual information, and transforms it into a distributed representation. By extracting relevant features, the feature function creates a compact yet informative representation, enabling the model to capture the essential characteristics of the dialogue state.

The Energy Function: Capturing Variable Dependencies

The energy function plays a pivotal role in energy-based learning for dialogue state tracking. It captures the complex relationships and dependencies between variables, such as dialogue slots and their possible values. The energy function assigns low energy values to valid combinations of variables, indicating their compatibility and likelihood within the dialogue context.

Furthermore, the energy function ensures that incompatible or invalid combinations receive high energy values. This aspect allows the model to discern and penalize unlikely or incorrect dialogue states, leading to more accurate and contextually grounded predictions.

Deep Learning Architectures: Structured Prediction Energy Networks (SPENs)

In recent years, deep learning architectures, such as Structured Prediction Energy Networks (SPENs), have gained prominence in implementing energy-based learning for dialogue state tracking. SPENs leverage the power of deep neural networks to model the complex dependencies between variables and capture intricate dialogue patterns.

SPENs integrate the feature function and energy function into a unified framework, allowing for seamless optimization and learning. By leveraging the expressive capabilities of deep learning, SPENs enable dialogue state trackers to handle large-scale and intricate dialogue datasets across multiple domains.

The strength of SPENs lies in their ability to jointly optimize the feature and energy functions, leading to improved performance and robustness in dialogue state tracking tasks.

Through energy-based learning, dialogue state tracking achieves superior accuracy and contextual understanding by explicitly considering variable dependencies. This cutting-edge approach revolutionizes the field of dialogue systems, enabling machines to engage in more natural and intuitive conversations with users.

Comparing Feature-based and Energy-based Dialogue State Tracking

| Criteria | Feature-based Dialogue State Tracking | Energy-based Dialogue State Tracking |

|---|---|---|

| Handling Variable Dependencies | Limited capacity to handle variable dependencies | Explicitly captures variable dependencies |

| Contextual Understanding | May lack contextual understanding | Contextually grounded predictions |

| Robustness | May struggle with complex dialogue patterns | Improved performance in complex dialogue scenarios |

| Training Efficiency | Efficient training process | Optimized joint optimization of feature and energy functions |

Applications and Benefits of Energy-based Neural Networks

Energy-based neural networks offer a wide range of applications in structured prediction tasks, making them a valuable tool in the field of machine learning. These networks have demonstrated remarkable success in tackling multi-label classification problems, particularly when it comes to modeling high-arity interactions within the data.

The key advantage of energy-based neural networks lies in their ability to utilize deep architectures, which enable the learning of representations for both the input and output variables. By leveraging deep learning techniques, these networks can uncover complex patterns and relationships within the data, leading to more accurate predictions and enhanced performance.

“Energy-based neural networks have revolutionized the field of structured prediction by providing a flexible framework that captures rich dependencies between variables.”

The applications of energy-based neural networks in structured prediction are vast and varied. Here are a few notable examples:

- Image Segmentation: Energy-based neural networks have been successfully applied to the task of image segmentation, where the objective is to assign a label to each pixel in an image. By incorporating dependencies between neighboring pixels, these networks can accurately segment complex images, a critical step in various computer vision applications.

- Entity Recognition: Energy-based neural networks have shown great promise in the field of natural language processing, particularly in entity recognition tasks. By considering the contextual relationship between words and incorporating relevant features, these networks can accurately identify and classify entities within a text, enabling more effective information extraction and understanding.

- Speech Recognition: Energy-based neural networks have also found success in speech recognition tasks, where the goal is to transcribe spoken language into written text. By capturing dependencies between phonemes and considering the temporal aspects of speech, these networks can achieve superior accuracy and robustness in recognizing and transcribing spoken words.

The benefits of utilizing energy-based neural networks for structured prediction are evident. These networks provide a flexible and powerful framework for capturing and modeling complex dependencies within data, leading to improved predictive performance and enhanced generalizability across domains.

Furthermore, energy-based neural networks have the potential to handle high-dimensional data and exposure to noise, making them suitable for real-world applications in dynamic and complex environments. These networks also facilitate the incorporation of domain knowledge and prior beliefs, allowing for more informed predictions in specialized domains.

Key benefits of energy-based neural networks:

- Accurate modeling of variable dependencies.

- Flexible framework adaptable to various structured prediction problems.

- Ability to capture high-arity interactions within the data.

- Incorporation of domain knowledge and prior beliefs.

- Robustness to noise and high-dimensional data.

As energy-based neural networks continue to advance, their potential applications and benefits in structured prediction tasks are only expected to grow. These networks provide a powerful solution for complex problems, offering improved accuracy, flexibility, and robustness in various domains.

Conclusion

In conclusion, energy-based neural networks offer a powerful solution for structured prediction in machine learning. By explicitly capturing variable dependencies, these networks can improve the accuracy and generalizability of models.

The applications of energy-based neural networks extend to various domains, including dialogue state tracking and multi-label classification. In dialogue state tracking, these networks excel at modeling variable associations in multi-domain conversations, enhancing the understanding of complex interactions.

Furthermore, energy-based neural networks have proven their capabilities in multi-label classification tasks, where they can effectively model high-arity interactions. Their ability to learn both the input and output representations through deep architectures makes them versatile and adaptable to different problem domains.

As the field of machine learning continues to advance, energy-based neural networks will play a vital role in unlocking cutting-edge AI capabilities. Their ability to explicitly capture variable dependencies sets them apart as a robust approach for structured prediction, paving the way for more accurate and reliable models in various fields.

FAQ

What are energy-based neural networks?

Energy-based neural networks are a type of machine learning technique used for structured prediction. They incorporate variable dependencies and have applications in various domains.

How do energy-based neural networks improve dialogue state tracking in multiple domains?

Energy-based neural networks capture variable dependencies, allowing for more accurate dialogue state tracking in multiple domains. They consider the relationships between dialogue slot variables, leading to better results.

How can variable associations be analyzed in multi-domain dialogue?

Statistical tests, such as the Pearson’s chi-squared test and Cramer’s V measurement, can be used to analyze variable associations in multi-domain dialogue. These tests confirm the dependencies between variables.

What are the key components of energy-based learning for dialogue state tracking?

The key components of energy-based learning for dialogue state tracking are the feature function, which transforms raw data, and the energy function, which captures variable dependencies and assigns low energy values to valid variable combinations.

What are the applications and benefits of energy-based neural networks for structured prediction?

Energy-based neural networks have a wide range of applications in structured prediction tasks. They excel in modeling high-arity interactions and can learn representations of both input and output variables. Their benefits include superior performance in benchmark tasks.

What are the implications of energy-based neural networks in the field of machine learning?

Energy-based neural networks offer a powerful solution for structured prediction in machine learning. By explicitly capturing variable dependencies, these networks can improve the accuracy and generalizability of models, leading to advancements in dialogue state tracking and multi-label classification, among other domains.