Artificial intelligence (AI) is transforming industries with advanced analytics, automation, and personalized experiences. To unlock the full potential of AI technologies, organizations must optimize their infrastructure for AI workloads. This includes leveraging high-speed networks for efficient data transmission, enabling seamless communication between AI systems and maximizing performance.

AI data transmission requires high-speed networks that can handle the massive volume of data generated by AI workloads. These networks ensure that data flows smoothly and quickly between different components of the AI ecosystem, including data centers, edge devices, and cloud platforms. By optimizing the infrastructure for AI workloads, businesses can enhance the speed, accuracy, and reliability of their AI systems.

Key Takeaways:

- Optimizing infrastructure for AI workloads includes optimizing data transmission with high-speed networks.

- High-speed networks enable seamless communication between AI systems and maximize performance.

- Efficient data transmission is crucial for handling the massive volume of data generated by AI workloads.

- By optimizing infrastructure for AI workloads, businesses can enhance the speed, accuracy, and reliability of their AI systems.

- Investing in high-speed networks is essential for unleashing the full potential of AI technologies.

Investing in High-Performance Computing Systems for AI Workloads.

When it comes to optimizing AI workloads, investing in high-performance computing systems is essential. These systems are specifically designed to accelerate model training and inference tasks, enabling businesses to extract insights faster and make informed decisions. Two popular AI accelerators in the market are Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs).

GPUs are known for their exceptional parallel processing capabilities, making them ideal for AI applications. They excel at handling complex mathematical computations, a fundamental requirement for AI algorithms. Equipped with thousands of cores, GPUs can perform calculations in parallel, significantly reducing the time required for model training and inference.

TPUs, on the other hand, are Google’s custom-built AI accelerators, specifically designed to optimize machine learning workloads. They are highly efficient at handling matrix operations, making them particularly suitable for deep learning tasks. TPUs deliver impressive performance gains by offering specialized hardware acceleration for AI workloads.

Both GPUs and TPUs offer substantial speedups compared to traditional Central Processing Units (CPUs). Their ability to handle large amounts of data in parallel allows for more efficient computation, resulting in faster time-to-insight and increased productivity in AI-driven workflows.

“Investing in high-performance computing systems, such as GPUs and TPUs, is an intelligent move for organizations looking to harness the power of AI. These accelerators provide the computational power required to tackle complex AI algorithms, unlocking new possibilities and driving innovation.” – AI Solutions Consultant

By integrating high-performance computing systems, businesses can take full advantage of cutting-edge AI technologies. These systems enable faster model training and inference, empowering organizations to extract valuable insights from data and deliver impactful AI-driven solutions.

The Importance of High-Performance Computing Systems for AI Workloads

High-performance computing systems play a crucial role in AI workloads due to their ability to handle the immense computational demands of AI algorithms. They enable the processing of large datasets and complex calculations required for training and inference, ensuring efficient and accurate results.

Furthermore, the advancements in GPU and TPU technologies have made high-performance computing systems more accessible and affordable. Organizations of all sizes can now leverage these powerful hardware accelerators to supercharge their AI capabilities and gain a competitive edge in their respective industries.

As businesses continue to embrace AI-driven solutions, investing in high-performance computing systems equipped with AI accelerators like GPUs and TPUs becomes not only a strategic advantage but a necessity to stay ahead in today’s fast-paced digital landscape.

Comparing GPUs and TPUs for AI Workloads

| GPU | TPU |

|---|---|

| Designed for parallel processing | Specialized hardware for matrix operations |

| Excellent performance for a wide range of AI tasks | Optimized for deep learning workloads |

| More widely available and supported by various frameworks | Custom-built for Google Cloud’s machine learning ecosystem |

| Ideal for developers and researchers experimenting with AI | Specially tailored for large-scale production deployments |

Both GPUs and TPUs have their unique strengths and are suitable for different AI use cases. GPUs offer versatility and broad compatibility with popular AI frameworks, making them a popular choice among developers and researchers. TPUs, on the other hand, are designed to deliver exceptional performance for Google Cloud’s machine learning ecosystem, making them an excellent choice for large-scale production deployments.

Investing in high-performance computing systems with AI accelerators like GPUs and TPUs is a strategic investment for organizations looking to unlock the full potential of AI technologies. These systems provide the computational power necessary for efficient AI workloads, enabling businesses to leverage the power of AI and gain a competitive advantage in today’s data-driven world.

Scalability with Cloud Platforms and Container Orchestration.

Scalability is paramount for handling AI workloads that vary in complexity and demand over time. When it comes to optimizing infrastructure for AI, businesses need flexible and elastic resources that can dynamically allocate compute, storage, and networking resources based on workload requirements. This is where cloud platforms and container orchestration technologies come into play.

Cloud platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud offer scalable resources that can be easily provisioned and managed. With cloud platforms, businesses can scale their AI infrastructure up or down based on demand, ensuring optimal performance without over-provisioning or underutilization.

Container orchestration technologies like Kubernetes provide a powerful framework for managing and scaling containerized applications. Containers allow for efficient resource utilization by leveraging lightweight, isolated environments. By using container orchestration platforms, businesses can easily scale their AI workloads by adding or removing containers as needed, ensuring that resources are utilized efficiently.

Benefits of Scalability with Cloud Platforms and Container Orchestration

- Efficient Resource Allocation: Cloud platforms and container orchestration technologies allow businesses to dynamically allocate resources, ensuring that AI workloads have the necessary compute power, storage, and networking capabilities.

- Cost Optimization: With scalable resources, businesses can avoid over-provisioning and paying for unused capacity. This helps optimize costs by only utilizing the resources that are needed, reducing infrastructure expenses.

- Improved Performance: Scalability ensures that AI workloads can handle spikes in demand without experiencing performance degradation. This results in faster processing times, quicker time-to-insights, and improved overall user experience.

- Flexibility and Elasticity: Scalable resources provide the flexibility to scale up or down rapidly, enabling businesses to adapt to changing AI workload requirements. This elasticity allows for greater agility and responsiveness, ensuring that infrastructure is aligned with business needs.

Scalability with cloud platforms and container orchestration technologies is crucial for optimizing infrastructure for AI workloads. By leveraging these technologies, businesses can ensure efficient resource allocation, cost optimization, improved performance, flexibility, and elasticity. This enables organizations to handle AI workloads effectively and deliver transformative AI-driven solutions.

With the right scalable infrastructure in place, businesses can unlock the full potential of their AI technologies. The ability to dynamically allocate resources based on workload demands ensures optimal performance and cost-efficiency. By embracing scalability with cloud platforms and container orchestration, businesses can take their AI capabilities to new heights.

Efficient Data Processing Pipelines for AI Workflows.

Leveraging Distributed Storage and Processing Frameworks

Efficient data processing pipelines are critical for AI workflows, particularly those dealing with large datasets. To optimize data processing, organizations can leverage distributed storage and processing frameworks such as Apache Hadoop, Apache Spark, or Dask. These frameworks enable accelerated data ingestion, transformation, and analysis, empowering businesses to derive valuable insights from their data.

Minimizing Latency and Improving Data Access Speeds

In addition to distributed storage and processing, the use of in-memory databases and caching mechanisms plays a crucial role in optimizing data processing pipelines. In-memory databases, such as Redis or Memcached, store data in the main memory, enabling faster retrieval and processing times. Caching mechanisms, such as CDNs or proxy servers, further enhance performance by storing frequently accessed data closer to the end-user, minimizing latency and improving data access speeds.

Streamlining Data Pipelines with Workflow Orchestration

To ensure the efficient execution of data processing pipelines, organizations can utilize workflow orchestration tools like Apache Airflow or Luigi. These tools provide a centralized platform for managing and monitoring data workflows, facilitating seamless coordination between different stages of the pipeline. By streamlining the workflow, organizations can enhance productivity, reliability, and scalability in their AI data processing processes.

Comparison of Distributed Storage and Processing Frameworks

| Framework | Key Features | Use Cases |

|---|---|---|

| Apache Hadoop | – Distributed file system (HDFS) – MapReduce processing model – Fault-tolerant and scalable – Batch processing | – Large-scale batch processing – Data-intensive analytics – Log processing |

| Apache Spark | – In-memory distributed processing – Interactive analytics – Real-time streaming – Graph processing – Machine learning | – Real-time analytics – Iterative machine learning – Stream processing |

| Dask | – Dynamic task scheduling – Parallel computing – Integration with Python ecosystem – Scalable data processing | – Scalable data manipulation – Large-scale machine learning – Interactive computing |

Improving Scalability and Performance

Incorporating efficient data processing pipelines into AI workflows not only enhances scalability but also boosts overall performance. By leveraging distributed storage and processing frameworks, utilizing in-memory databases and caching mechanisms, and streamlining data pipelines with workflow orchestration tools, organizations can unlock the full potential of their data and accelerate the development and deployment of AI-driven solutions.

Parallelizing AI Algorithms for Faster Time-to-Insight.

Parallelizing AI algorithms is a powerful technique that accelerates model training and inference, reducing time-to-insight and enabling faster decision-making. By distributing computation tasks across multiple compute nodes in a cluster, organizations can leverage the power of distributed computing to handle complex AI workloads more efficiently. This approach utilizes the collective resources of the cluster, maximizing utilization and significantly improving performance.

Frameworks like TensorFlow, PyTorch, and Apache Spark MLlib provide robust support for distributed computing paradigms, making it easier to parallelize AI algorithms and harness the power of distributed computing. These frameworks enable seamless integration with distributed computing platforms, allowing organizations to scale their AI workloads across multiple machines.

Benefits of Parallelizing AI Algorithms:

- **Faster Training and Inference**: Parallelizing AI algorithms reduces computational bottlenecks, enabling faster model training and inference. This is particularly beneficial when working with large datasets or complex deep learning models.

- **Scalability**: By distributing computation tasks, parallelization facilitates scalability, allowing organizations to handle increasing volumes of data and growing AI workloads without sacrificing performance.

- **Optimized Resource Utilization**: Parallelization ensures efficient utilization of computational resources within a cluster. Tasks are distributed across multiple machines, making the most effective use of available CPUs, GPUs, and memory.

- **Improved Fault Tolerance**: Distributed computing frameworks like Apache Spark MLlib provide built-in fault tolerance mechanisms, ensuring that computational tasks are resilient to failures and can recover without data loss.

“Parallelizing AI algorithms across a distributed computing cluster empowers organizations to unlock the full potential of their AI workloads, delivering faster insights and enabling data-driven decision-making at scale.” – Industry Expert

With the ability to parallelize AI algorithms using popular frameworks like TensorFlow, PyTorch, and Apache Spark MLlib, organizations can expedite their AI workflows and achieve faster time-to-insight. By harnessing the computational power of distributed computing, businesses can stay ahead in the competitive landscape and unlock new possibilities for innovation.

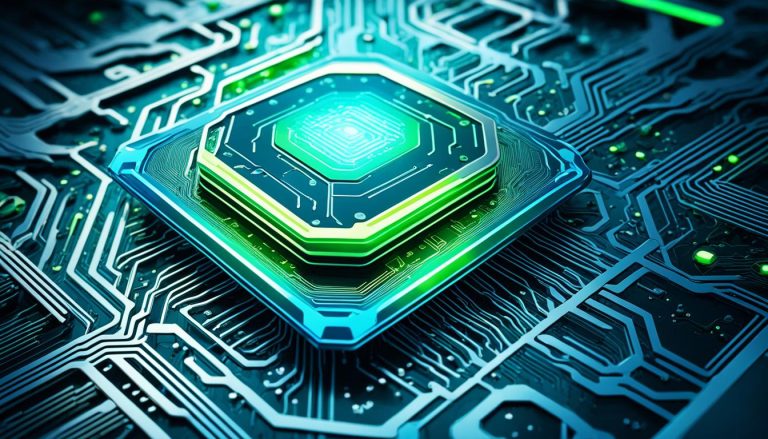

Optimized Hardware Acceleration for Specific AI Tasks.

When it comes to maximizing performance and energy efficiency for specific AI tasks, hardware accelerators play a crucial role. In the realm of AI, field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs) are the go-to choices for optimizing computational workloads. By offloading tasks from general-purpose CPUs or GPUs, these specialized processors deliver significant speedups.

One of the key advantages of utilizing hardware accelerators like FPGAs and ASICs is their ability to excel in inferencing, natural language processing, and image recognition tasks. These processors are designed to handle the unique requirements of these AI workloads, enabling faster processing and improved accuracy.

For inferencing, where AI models make predictions based on input data, hardware accelerators can rapidly process vast amounts of information, enabling real-time decision-making and responsiveness. This capability is critical across various industries, such as finance, healthcare, and autonomous vehicles.

Natural language processing (NLP) is another area where hardware acceleration shines. With the increasing demand for voice assistants, chatbots, and language translation services, efficient NLP processing is essential. FPGAs and ASICs can handle the complex algorithms and intensive linguistic computations required for NLP, enabling faster and more accurate responses.

Similarly, image recognition tasks, including object detection, facial recognition, and image classification, benefit greatly from optimized hardware acceleration. FPGAs and ASICs excel in extracting meaningful features from images, enabling quick and accurate analysis across industries like retail, security, and healthcare.

Unlocking the Full Potential of Hardware Accelerators

“Hardware accelerators, such as FPGAs and ASICs, play a critical role in optimizing AI workloads by delivering superior performance and energy efficiency.”

As organizations continue to harness the power of AI, integrating hardware accelerators into their infrastructure becomes crucial. By leveraging the capabilities of FPGAs and ASICs, businesses can unlock the full potential of their AI systems. From accelerating inferencing and NLP tasks to enhancing image recognition capabilities, hardware accelerators provide the necessary performance boost that can drive transformative outcomes.

It’s important to note that hardware accelerators are not a one-size-fits-all solution. The selection of the right accelerator depends on specific requirements, workload characteristics, and cost considerations. Proper evaluation and understanding of the trade-offs between FPGAs and ASICs are paramount to make informed decisions.

Additionally, integrating hardware accelerators into AI systems requires expertise and proper implementation. Collaborating with hardware and software specialists and utilizing optimized frameworks and libraries can help organizations harness the full potential of these accelerators.

In conclusion, hardware accelerators, such as FPGAs and ASICs, are essential components for optimizing AI tasks like inferencing, natural language processing, and image recognition. These specialized processors deliver superior performance and energy efficiency, enabling organizations to unlock the full potential of their AI workloads.

Conclusion

Optimizing infrastructure for AI workloads is a multifaceted endeavor that requires a holistic approach encompassing hardware, software, and architectural considerations. By embracing high-performance computing systems, organizations can ensure efficient execution of AI tasks, unleashing the full potential of AI technologies.

Scalable resources provided by cloud platforms and container orchestration enable businesses to dynamically allocate computing power and storage, accommodating growing AI workloads without compromising performance. This scalability fosters innovation and drives business growth.

Accelerated data processing pipelines, parallel computing frameworks, and optimized hardware accelerators further enhance AI workloads, leading to faster time-to-insight and more accurate results. Leveraging these technologies empowers organizations to unlock new insights, deliver transformative solutions, and stay ahead in today’s competitive landscape.

By implementing comprehensive monitoring and optimization practices, organizations can ensure the continuous fine-tuning of their AI infrastructure, achieving maximum efficiency and performance. This ongoing optimization guarantees that businesses are able to fully harness the power of AI technologies, driving innovation, and achieving their objectives.

FAQ

What is AI data transmission and how does it relate to high-speed networks?

AI data transmission refers to the transfer of large volumes of data required for AI workloads. High-speed networks enable fast and efficient data transmission, ensuring that data is processed and analyzed in a timely manner.

Why is investing in high-performance computing systems important for AI workloads?

High-performance computing systems, such as GPUs and TPUs, are specifically designed to handle the complex mathematical computations involved in AI algorithms. Investing in these systems accelerates model training and inference tasks, providing significant speedups compared to traditional CPUs.

How can scalability with cloud platforms and container orchestration benefit AI workloads?

Scalability is crucial for handling AI workloads that vary in complexity and demand over time. Cloud platforms and container orchestration technologies provide scalable, elastic resources that dynamically allocate compute, storage, and networking resources based on workload requirements. This ensures optimal performance without over-provisioning or underutilization.

What are efficient data processing pipelines, and why are they important for AI workflows?

Efficient data processing pipelines involve the use of distributed storage and processing frameworks like Apache Hadoop, Spark, or Dask to accelerate data ingestion, transformation, and analysis. Additionally, using in-memory databases and caching mechanisms minimizes latency and improves data access speeds, which are crucial for AI workflows dealing with large datasets.

How can parallelizing AI algorithms accelerate time-to-insight?

By parallelizing AI algorithms across multiple compute nodes, computation tasks are distributed across a cluster of machines. This accelerates model training and inference and reduces the time required to gain insights from AI models. Frameworks like TensorFlow, PyTorch, and Apache Spark MLlib support distributed computing paradigms, enabling efficient utilization of resources.

What are hardware accelerators and how do they optimize performance for AI tasks?

Hardware accelerators, such as FPGAs and ASICs, are specialized processors that optimize performance and energy efficiency for specific AI tasks. They offload computational workloads from general-purpose CPUs or GPUs, delivering significant speedups for tasks like inferencing, natural language processing, and image recognition.

How can optimizing infrastructure for AI workloads unleash the full potential of AI technologies?

Optimizing infrastructure for AI workloads involves a holistic approach encompassing hardware, software, and architectural considerations. By embracing high-performance computing systems, scalable resources, accelerated data processing, distributed computing paradigms, hardware acceleration, optimized networking infrastructure, and continuous monitoring and optimization practices, organizations can unlock new insights, deliver transformative AI-driven solutions, and drive innovation in today’s competitive landscape.