As the use of artificial intelligence (AI) and edge computing continues to grow, the demand for efficient cooling strategies for edge AI devices is increasing. Edge AI devices enable computation near the data source, enhancing responsiveness, security, scalability, and cost efficiency. However, these devices generate heat that can affect their performance and reliability. In this section, we will explore the importance of cooling for edge AI devices and discuss various cooling strategies that can ensure optimal neural network performance and reliability.

Key Takeaways:

- Efficient cooling strategies are crucial for optimizing the performance and reliability of edge AI devices.

- Edge AI devices enable computation near the data source, improving responsiveness, security, scalability, and cost efficiency.

- Heat generated by edge AI devices can impact their performance and reliability.

- Implementing effective cooling strategies is essential to ensure the successful deployment of neural networks at the edge.

- Various cooling strategies can mitigate heat and maintain optimal neural network performance.

The Impact of Concept Drift on Edge AI Systems

Concept drift, a phenomenon where the statistical properties of target data change over time, can have significant repercussions on the performance and reliability of edge AI systems. Noise and environmental changes introduce concept drift, creating a disparity between the trained dataset and the deployed environment. Consequently, this disparity leads to performance degradation and system failures in edge AI systems, jeopardizing their effectiveness and functionality.

To minimize the impact of concept drift, retraining neural network models becomes imperative. Triggered by concept drift detection, the retraining process bridges the gap between the trained dataset and the evolving environment. However, it poses a challenge due to the limited compute resources of edge devices. The computational cost and memory utilization required for frequent retraining can strain these devices, making it necessary to find efficient solutions that strike a balance between computational demands and accurate model performance.

One promising approach to mitigating concept drift in edge AI systems is the implementation of a sequential concept drift detection method. This method reduces computation cost and memory utilization by adapting the retraining process to focus on the most relevant data instances affected by concept drift. By prioritizing critical data points, the method ensures that edge AI systems remain accurate and efficient while minimizing the strain on compute resources.

Sequential Concept Drift Detection Method

The sequential concept drift detection method employs a step-by-step approach to identify and address concept drift in edge AI systems. Here is an overview of the process:

- Collect and label data instances during the deployment phase.

- Periodically evaluate the performance of the neural network model using collected instances.

- If a significant drop in performance is detected, trigger concept drift detection.

- Select a subset of the instances from the oncoming data stream.

- Apply a drift detection algorithm to determine whether concept drift has occurred.

- If concept drift is detected, retrain the neural network model using the selected subset of instances.

- Update the trained model and continue processing the data stream.

This sequential concept drift detection method reduces the computational burden by focusing on detecting and addressing concept drift when necessary, rather than retraining the model continuously. By prioritizing critical instances, it optimizes the utilization of compute resources in edge AI systems.

Implementing effective concept drift detection and retraining strategies is crucial for maintaining the accuracy and efficiency of edge AI systems. By combining advanced methodologies and leveraging the limited resources of edge devices, organizations can overcome the challenges posed by concept drift and ensure the long-term success of their edge AI deployments.

The Energy Consumption Challenge of Generative AI

Generative AI, driven by deep learning models, has revolutionized content creation but comes with significant energy consumption challenges. These models heavily rely on large datasets and powerful GPUs to generate content, resulting in increased energy consumption. This heightened energy demand has a direct impact on cooling requirements, particularly for small data centers and edge sites. With a rising adoption of generative AI and organizations ramping up their investments in AI technologies, addressing the energy consumption challenge becomes imperative.

In this section, we explore the energy consumption implications of generative AI and delve into cooling strategies to mitigate the heat generated by these systems. Efficient cooling solutions are vital to ensure optimal performance, prolong the lifespan of IT equipment, and minimize the environmental impact of generative AI.

The Impact of Generative AI on Energy Consumption

The generative AI models’ energy consumption can be attributed to the intensive computational tasks they perform. These models train on large datasets and require substantial processing power, resulting in a significant carbon footprint. The demand for generative AI continues to grow across various industries, including healthcare, entertainment, and design. However, this surge in popularity gives rise to the pressing need for sustainable energy consumption practices in AI-powered systems.

It is crucial to address the energy consumption challenge in generative AI to curb the strain on limited energy resources and mitigate the associated environmental impact. By implementing energy-efficient cooling strategies, we can optimize the performance of generative AI systems while minimizing the cooling demand and reducing energy costs.

Cooling Strategies for Generative AI

To effectively manage the cooling demand of generative AI systems, organizations can adopt the following strategies:

- **Optimize Data Center Cooling Infrastructure**: Implementing advanced cooling techniques, such as liquid cooling or direct-to-chip cooling, can significantly enhance cooling efficiency while reducing energy consumption. These solutions dissipate heat more effectively, reducing the overall cooling demand for generative AI systems.

- **Efficient Airflow Management**: Proper airflow management is crucial for maintaining optimal operating temperatures in data centers and edge sites. By ensuring adequate airflow and utilizing hot aisle/cold aisle containment, organizations can minimize cooling requirements and achieve energy-efficient cooling.

- **Intelligent Temperature Monitoring**: Deploying intelligent temperature monitoring systems allows organizations to identify hotspots and thermal inefficiencies in real-time. By proactively addressing temperature imbalances, cooling resources can be allocated more efficiently, reducing overall cooling demand.

- **Renewable Energy Integration**: Embracing renewable energy sources, such as solar or wind power, for data center operations can significantly reduce the environmental footprint of generative AI systems. Combining energy-efficient cooling strategies with renewable energy sources creates a more sustainable AI ecosystem.

- **Collaboration with Cooling Solution Providers**: Partnering with cooling solution providers, such as Schneider Electric or Vertiv, can offer access to innovative cooling technologies specifically designed to address the cooling demand of AI systems. These companies provide tailored cooling solutions, taking into account the unique requirements of generative AI workloads.

By adopting these cooling strategies, organizations can optimize energy consumption, achieve cost savings, and contribute to a greener AI ecosystem.

“Efficient cooling solutions are crucial to mitigate the energy consumption challenges posed by generative AI. By implementing sustainable cooling strategies, organizations can strike a balance between AI system performance and environmental responsibility.”

– Industry expert

Effective cooling is integral to ensure the smooth operation of generative AI systems while minimizing the impact on energy consumption and the environment. The next section will explore enabling efficient cooling for edge AI devices, emphasizing the importance of strategic partnerships in delivering optimal cooling solutions.

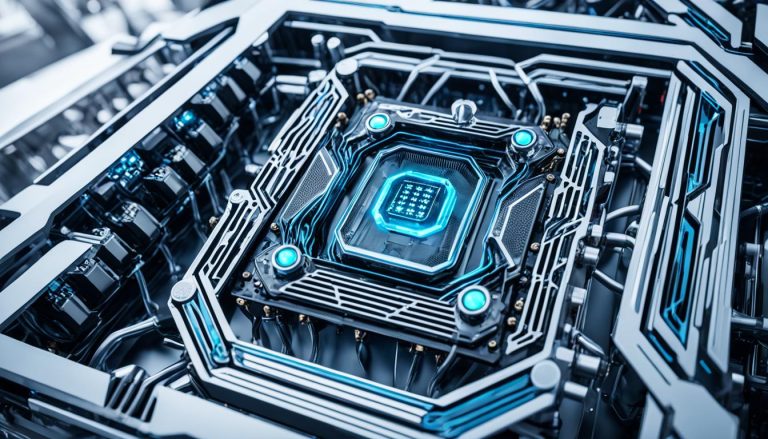

Enabling Efficient Cooling for Edge AI Devices

Effective cooling solutions are crucial for ensuring optimal performance and reliability of edge AI devices. In this section, we will explore the importance of efficient cooling and the role of partners in providing cooling solutions for edge AI devices.

Partnerships with cooling solution providers like Eaton can help organizations recommend IT-grade cooling solutions that lower energy costs and increase the lifespan of IT equipment. Eaton’s expanded cooling capabilities offer comprehensive solutions to meet the cooling requirements of edge AI devices, including the emerging field of generative AI systems.

In-row precision cooling solutions and rack-mounted air conditioners are ideal options for small to midsize data centers, server rooms, edge computing data centers, and high-density zones. These cooling solutions provide efficient and targeted cooling, optimizing the performance of edge AI devices in various deployment scenarios.

The Importance of Efficient Cooling

Efficient cooling is essential for edge AI devices as they generate heat during intense computing operations. Without proper cooling, these devices can experience thermal throttling, reduced performance, and decreased lifespan. By implementing efficient cooling solutions, organizations can ensure that edge AI devices operate at their full potential, delivering reliable and high-performing neural network deployments.

“Efficient cooling solutions are crucial for ensuring optimal performance and reliability of edge AI devices.”

Partnerships in Providing Cooling Solutions

Cooling solution providers like Eaton play a significant role in enabling efficient cooling for edge AI devices. Through partnerships, organizations can leverage the expertise and experience of cooling solution providers to recommend the most suitable cooling solutions for their specific edge AI deployments.

Eaton’s reference architectures for generative AI systems further enhance their cooling capabilities. These architectures provide comprehensive cooling solutions tailored to the unique cooling requirements of generative AI models, promoting efficient operation and minimizing the cooling demand.

The Benefits of Efficient Cooling

Implementing efficient cooling for edge AI devices brings numerous benefits. Firstly, it reduces energy costs by optimizing cooling processes and ensuring energy-efficient operation. Secondly, efficient cooling extends the lifespan of IT equipment, reducing maintenance and replacement costs and contributing to a more sustainable IT infrastructure.

Moreover, efficient cooling solutions enable edge AI devices to deliver consistent performance, even under demanding workloads. This reliability is crucial for critical applications that require real-time processing and decision-making, such as autonomous vehicles, smart cities, and industrial automation.

Conclusion

Cooling strategies are essential for optimizing the performance and reliability of edge AI devices in neural network deployments. As discussed in this article, concept drift and the energy consumption challenge of generative AI have significant implications for edge AI systems. However, by implementing efficient cooling solutions, organizations can ensure that these systems operate at their full potential while reducing energy costs and increasing the lifespan of IT equipment.

As the demand for edge AI devices continues to grow, it is crucial to prioritize efficient cooling. By partnering with cooling solution providers like Eaton, organizations can recommend IT-grade cooling solutions that meet the specific needs of edge AI deployments. In-row precision cooling solutions and rack-mounted air conditioners offer effective cooling options for small to midsize data centers, server rooms, edge computing data centers, and high-density zones.

Eaton’s comprehensive cooling capabilities, including reference architectures for generative AI systems, enable the successful deployment of neural networks at the edge. By investing in efficient cooling strategies, organizations can optimize the performance of edge AI devices, ensure reliability, and support the continued growth of AI-driven applications in various industries.

FAQ

Why is cooling important for edge AI devices?

Cooling is important for edge AI devices because they generate heat during operation, which can affect their performance and reliability. Efficient cooling strategies help maintain optimal neural network performance and ensure the longevity of edge AI devices.

What is concept drift, and how does it impact edge AI systems?

Concept drift refers to a phenomenon where the statistical properties of target data change over time, leading to a degradation in performance and potential system failures in edge AI systems. It occurs due to noise and environmental changes, creating a gap between the trained dataset and the deployed environment. Concept drift detection and retraining of neural network models are necessary to address this gap.

How does generative AI impact energy consumption, and why is cooling important for these systems?

Generative AI, which uses deep learning models to generate content, consumes significant energy due to its reliance on large datasets and powerful GPUs. This increased energy consumption leads to a higher cooling demand, especially in small data centers and edge sites. Efficient cooling solutions are crucial to mitigate the heat generated by generative AI systems.

What are some effective cooling solutions for edge AI devices?

In-row precision cooling solutions and rack-mounted air conditioners are suitable options for small to midsize data centers, server rooms, edge computing data centers, and high-density zones. Partnering with cooling solution providers like Eaton can help organizations recommend IT-grade cooling solutions that lower energy costs and increase the lifespan of IT equipment.

How do cooling strategies optimize the performance and reliability of edge AI devices?

By implementing effective cooling strategies, organizations can ensure that edge AI systems operate at their full potential while reducing energy costs and increasing the lifespan of IT equipment. Cooling strategies help maintain optimal neural network performance and reliability in edge AI devices, enabling successful deployments of neural networks at the edge.