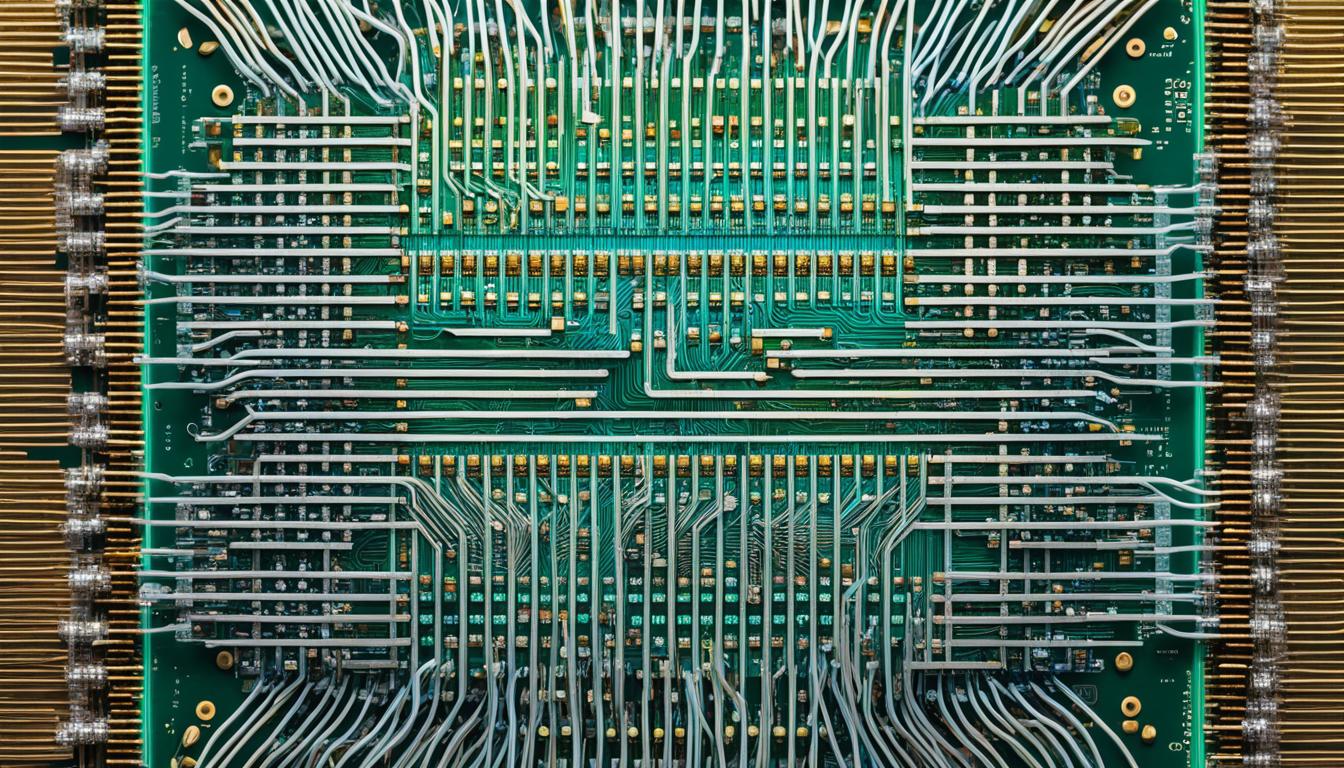

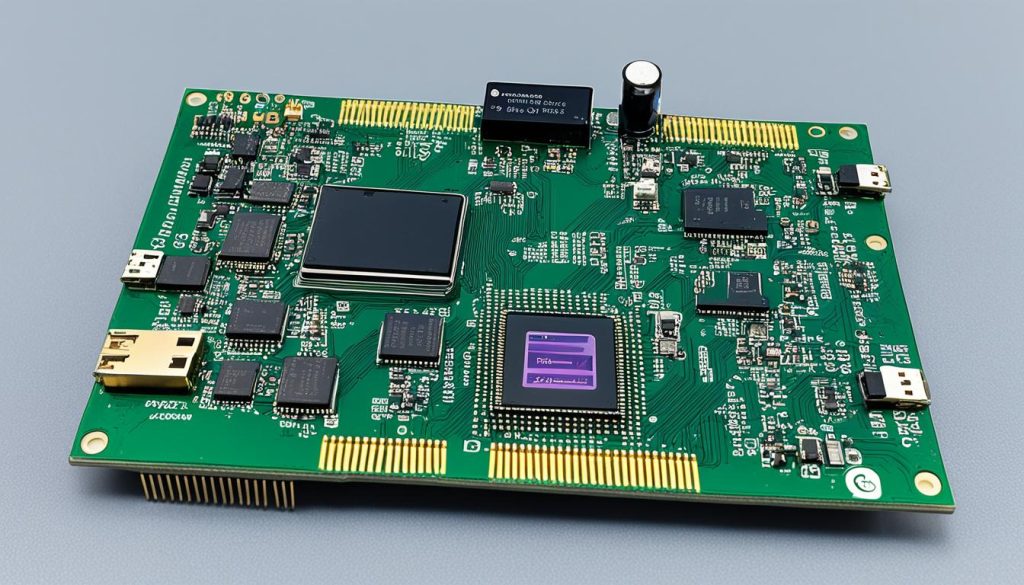

FPGAs (Field-Programmable Gate Arrays) are hardware circuits with reprogrammable logic gates that allow users to create custom circuits in the field. They are used in a variety of applications, including space exploration, defense systems, telecommunications, and networking. FPGAs provide flexibility and parallelism, and they have lower power consumption compared to CPUs and GPUs. They are well-suited for embedded applications and can be used to accelerate deep learning workloads.

When comparing FPGAs to GPUs for machine learning, FPGAs can offer similar or greater compute power and better energy efficiency. However, programming FPGAs requires expertise and can be costly, and there is a lack of libraries for FPGA-based deep learning. Despite these challenges, FPGAs are a promising option for implementing AI compute on embedded devices.

Key Takeaways:

- FPGAs are hardware circuits with reprogrammable logic gates that provide flexibility and parallelism.

- They have lower power consumption compared to CPUs and GPUs, making them suitable for embedded applications.

- FPGAs can offer similar or greater compute power and better energy efficiency compared to GPUs in machine learning.

- Programming FPGAs requires expertise and can be costly.

- There is a lack of libraries for FPGA-based deep learning.

Benefits of FPGA Technology for AI

The utilization of FPGA technology in AI systems brings forth a range of advantages, making it an appealing choice for integrating AI capabilities into embedded systems. The key benefits of using FPGA in AI are as follows:

- Highly Reconfigurable Logic: FPGAs possess fully reprogrammable logic that allows for the optimization of AI inference and training. This capability enables the updating of the logic architecture as required, ensuring flexibility and customization in AI implementation.

- Minimized Power Consumption: FPGAs can minimize power usage by eliminating unused interfaces and repetitive operations. This optimization helps enhance energy efficiency, making them a more environmentally friendly choice, particularly when compared to traditional CPUs and GPUs.

- Enhanced Parallelism: The highly parallelizable nature of FPGAs enables faster AI inference, even when dealing with large datasets. This parallelism allows for efficient and accelerated computations, improving the overall performance of AI systems.

- High-Speed Interfaces: Vendor Intellectual Property (IP) can be utilized to instantiate high-speed interfaces for receiving sensor data. This feature ensures seamless integration with various components, facilitating efficient data transfer and processing in AI applications.

- Embedded System Support: FPGAs can be implemented on top of standard SoC (System on Chip) cores, providing support for embedded operating systems (OS) and user applications. This compatibility enables the incorporation of AI capabilities into a wide range of embedded systems.

- Configurability and System Logic Integration: The configurability of FPGAs significantly reduces supply chain risk by enabling the instantiation of external components within the system logic. This flexibility promotes adaptability and mitigates potential limitations related to proprietary interfaces and specific hardware requirements.

With its highly customizable and adaptable nature, FPGA technology offers a comprehensive solution for incorporating AI capabilities into embedded systems, providing advantages such as reconfigurability, optimal logic architectures, parallelization, and support for high-speed interfaces.

Challenges and Limitations of FPGA Technology for AI

FPGAs offer numerous advantages for AI applications; however, there are also challenges and limitations that must be considered.

1. Programming Expertise in HDL

Programming FPGAs requires specific expertise in Hardware Descriptive Language (HDL). The lack of experienced programmers proficient in HDL can make it challenging to adopt FPGAs reliably for AI projects.

2. Relatively Untested for Deep Learning

Implementing FPGAs for deep learning is relatively untested compared to other technologies. This may deter conservative organizations from adopting FPGAs due to the perceived risks and uncertainties associated with this emerging technology.

3. Cost of Implementation

The cost of using FPGAs, including implementation and programming costs, can be a significant investment. This makes FPGA technology more suitable for larger projects where the potential benefits outweigh the financial considerations.

4. Lack of Compatible Deep Learning Libraries

There is currently a lack of deep learning libraries that provide native support for FPGAs without modification. However, research projects like LeFlow are actively working on creating compatibility between FPGAs and popular frameworks like TensorFlow.

These challenges and limitations highlight the importance of careful implementation planning and consideration of Return on Investment (ROI) when utilizing FPGA technology for AI applications.

FPGAs in Embedded AI Systems

The electronics industry is increasingly focusing on integrating AI compute capabilities into embedded systems. This shift towards embedded AI requires unique chipsets and optimized models to meet the specific needs of these systems. In this context, FPGAs have emerged as a prominent choice for implementing AI compute on embedded devices, thanks to their customizable and adaptable nature. Unlike custom silicon solutions, FPGAs provide a flexible hardware platform that can be reprogrammed to accommodate different AI models and algorithms.

Embedded AI systems often encounter challenges related to compute intensity and low latency requirements. The computational demands of AI processing can strain traditional processors and architectures, making it impractical to rely solely on them for embedded AI tasks. Additionally, relying on cloud connectivity for inference tasks can introduce unacceptable latency, especially in applications that require real-time processing. This is where FPGAs shine, offering the necessary computing power and real-time processing capabilities for edge AI applications.

The separation of inference and training tasks is a crucial consideration for embedded AI systems. Inference tasks, which involve the deployment of trained AI models on embedded processors, are typically less computationally intensive and can be efficiently executed on FPGAs. On the other hand, training tasks often require significant compute resources and are commonly performed in the cloud. By leveraging FPGAs for inference, embedded AI systems can strike a balance between performance and resource allocation.

FPGAs excel in providing AI capabilities on end-user devices and embedded systems. Their reconfigurable nature enables continuous optimization of AI inference and training processes, allowing for efficient utilization of computing resources. In addition, FPGAs offer benefits in terms of reduced power consumption and improved parallelization, both of which are critical for accelerating AI workloads. Their ability to handle large input datasets efficiently makes FPGAs an ideal choice for embedded systems that process high volumes of data.

To illustrate the advantages of FPGAs in embedded AI systems, consider the following table which highlights key features and benefits:

| Features | Benefits |

|---|---|

| Customizability | Allows for the optimization of AI models and algorithms specific to the embedded system |

| Real-time processing | Enables low-latency inference for time-critical applications |

| Power efficiency | Reduces energy consumption compared to traditional processors, ideal for edge computing |

| Parallelization | Accelerates AI workloads, enabling faster inference and improved performance |

| Scalability | Offers the ability to scale AI capabilities to match the evolving requirements of the embedded system |

With their inherent benefits and capabilities, FPGAs present a versatile and powerful solution for implementing AI in embedded systems. Whether it’s edge computing or other resource-constrained environments, FPGAs provide the necessary flexibility, performance, and efficiency to enable AI applications on a wide range of devices.

FPGA as a Versatile Solution for Embedded AI

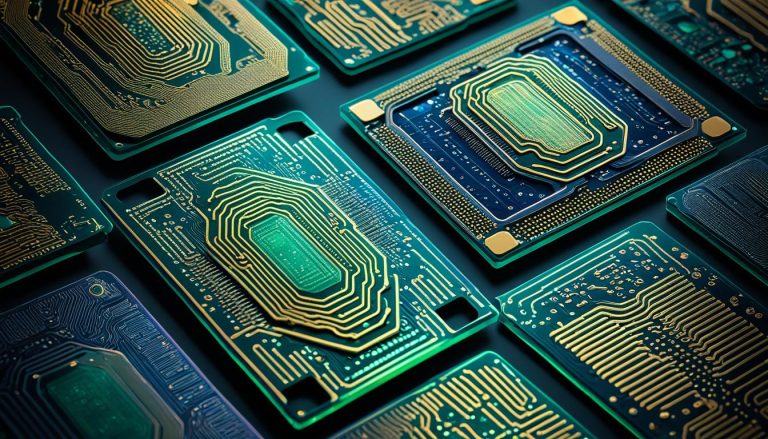

When it comes to embedded AI chipsets, FPGAs (Field-Programmable Gate Arrays) offer unparalleled adaptability and customization. These versatile devices allow for the implementation of custom compute architectures tailored specifically for AI tasks, making them a go-to solution in the field.

One of the standout features of FPGAs is their fully reconfigurable logic, which allows for continuous optimization of AI inference and training. By dynamically updating the logic architecture, FPGAs enable developers to fine-tune performance while minimizing power consumption, resulting in more efficient AI acceleration.

The highly parallelizable nature of FPGAs also makes them ideal for handling large input datasets and speeding up AI inference. With their ability to process multiple tasks simultaneously, FPGAs excel at delivering high-performance computing capabilities for embedded AI systems.

Moreover, FPGAs can be seamlessly integrated with standard System-on-Chip (SoC) cores, making them compatible with embedded operating systems and user applications. This integration enables developers to leverage the power of FPGAs while maintaining the necessary support for a wide range of system functionalities.

In terms of physical footprint, FPGAs offer a comparable or even smaller size compared to traditional processors or AI accelerators. This compact form factor is especially advantageous in embedded systems where space is limited but computational power is crucial.

FPGAs also provide a unique advantage in terms of their universal configurability. This allows for the reduction of supply chain risk and the easy instantiation of external components within the system logic. The flexibility provided by FPGAs enables developers to create highly customized embedded AI solutions tailored to specific application requirements.

Overall, FPGAs offer a versatile and powerful solution for incorporating AI capabilities into embedded systems. With their unmatched adaptability, reprogrammable logic, parallel processing capabilities, and universal configurability, FPGAs are at the forefront of AI acceleration in embedded applications.

Conclusion

In conclusion, Field-Programmable Gate Arrays (FPGAs) offer a highly customizable and flexible hardware solution for AI and neural network models. They provide the advantages of parallelism, lower power consumption, and the ability to create custom circuits in the field. While there are challenges in terms of programming expertise and implementation costs, FPGAs show great promise in implementing AI compute on embedded devices.

One of the key strengths of FPGAs is their ability to provide AI capabilities on end-user devices and embedded systems. They enable real-time processing and low latency, making them well-suited for edge AI applications. FPGAs also offer significant benefits such as reconfigurability, optimized logic architecture, parallelization, and support for high-speed interfaces.

With their versatility and universal configurability, FPGAs provide a powerful solution for incorporating AI capabilities into embedded systems. Despite the challenges and limitations, FPGAs stand out as a promising option, offering the potential to accelerate AI workloads and optimize AI inference and training.

FAQ

What is an FPGA?

An FPGA, or Field-Programmable Gate Array, is a hardware circuit with reprogrammable logic gates that allow users to create custom circuits in the field.

Where are FPGAs used?

FPGAs are used in a variety of applications, including space exploration, defense systems, telecommunications, and networking.

How do FPGAs compare to CPUs and GPUs for AI?

FPGAs provide flexibility and parallelism, with lower power consumption compared to CPUs and GPUs. They can offer similar or greater compute power and better energy efficiency for machine learning.

What are the benefits of using FPGAs in AI systems?

FPGAs offer fully reconfigurable logic for optimizing AI inference and training, minimize power consumption, provide faster AI inference, support high-speed interfaces, and can be implemented on top of standard SoC cores.

What are the challenges and limitations of using FPGAs for AI?

Programming FPGAs requires specific expertise, implementing FPGAs for deep learning is relatively untested and costly, and there is a lack of deep learning libraries for FPGA-based AI.

Why are FPGAs suitable for embedded AI systems?

FPGAs provide the computing power and real-time processing capabilities needed for edge AI tasks, allowing for low latency and minimizing reliance on cloud connectivity.

What makes FPGAs a versatile solution for embedded AI?

FPGAs offer customizability, optimized logic architecture, parallelization, support for high-speed interfaces, and reduced supply chain risk, making them ideal for incorporating AI capabilities into embedded systems.

What are some considerations when using FPGA technology for AI?

Considerations include the need for programming expertise, the cost of implementation, and the lack of deep learning libraries without modification.