Artificial Intelligence (AI) and High-Performance Computing (HPC) are two cutting-edge technologies revolutionizing industries across the globe. The integration of AI into HPC environments opens up a world of possibilities for data processing, analytics, research, and innovation. HPC, which entails high-speed parallel processing of complex computations, is typically implemented using clusters of computing servers connected through a network. These systems can operate on-premises, at the edge, or in the cloud.

High-performance data analytics (HPDA) leverages the power of HPC resources to process vast amounts of data and enable advanced AI capabilities. However, the convergence of AI and HPC requires adjustments in programming languages and the use of virtualization and containers. Traditionally, HPC applications are written in languages such as Fortran, C, or C++, while AI frameworks utilize Python and Julia. Ensuring compatibility between these different languages is crucial for seamless integration.

As the demand for AI continues to grow, HPC can provide the necessary infrastructure to build better AI applications. With its increased processing power, storage, and memory capacity, HPC systems offer a significant advantage in handling the computational requirements of AI algorithms. Moreover, HPC systems often incorporate GPUs, which excel at processing AI workloads through parallelism and co-processing.

The industry recognizes the immense potential of integrating AI and HPC. Companies like Run:AI offer platforms that enable running AI on HPC infrastructure, ensuring optimal utilization of resources. This integration not only accelerates AI workflows but also simplifies resource management and improves cost-efficiency.

Key Takeaways

- The convergence of AI and HPC enables advanced data processing, analytics, research, and innovation.

- Compatibility between programming languages is important when integrating AI into HPC environments.

- HPC systems provide increased processing power, storage, and memory capacity for building better AI applications.

- Run:AI offers a platform for running AI on HPC infrastructure, optimizing resource utilization.

- The integration of AI and HPC presents numerous benefits and opportunities for industry advancements.

How AI is Affecting HPC

The integration of AI into high-performance computing (HPC) environments is fundamentally reshaping the landscape of scientific research, data processing, and innovative technologies. As AI continues to advance and augment various industries, it is now making a significant impact on HPC, necessitating adjustments in programming languages, virtualization, containers, and memory management.

Adjustments in Programming Languages

Traditional HPC programs have predominantly been written in languages such as Fortran, C, and C++. However, with the rise of AI, programming languages like Python and Julia are gaining prominence. This shift necessitates compatibility between existing HPC applications and AI frameworks/languages. Developers must ensure seamless integration to leverage the benefits of both AI and HPC in a cohesive environment.

The Role of Virtualization and Containers

Virtualization and containerization provide invaluable benefits to both AI and HPC applications. Virtualization enables the creation of virtual machines, allowing multiple operating systems to run on a single physical machine. Containers, on the other hand, offer lightweight and scalable application encapsulation, enabling the portability and isolation of AI applications. These technologies enhance flexibility, resource allocation, and workload management within HPC environments.

Memory Capacity for AI Processing

As AI algorithms process and analyze increasingly large datasets, having sufficient memory capacity becomes crucial. Memory-intensive AI workloads require ample memory resources to ensure optimal performance. Emerging technologies like non-volatile memory (NVRAM) support larger memory capacities in HPC systems, empowering researchers and data scientists to effectively harness the power of AI.

| Factors | Impact on HPC |

|---|---|

| AI Programming Languages | Requires compatibility with traditional HPC applications |

| Virtualization and Containers | Enhances flexibility and workload management |

| Memory Capacity | Necessary for processing large AI datasets |

The convergence of AI and HPC presents immense potential for driving groundbreaking discoveries and advancements across various domains. By harnessing the power of AI within HPC environments, researchers and data practitioners can unlock new insights, accelerate data analysis, and push the boundaries of scientific knowledge.

How HPC Can Help You Build Better AI Applications

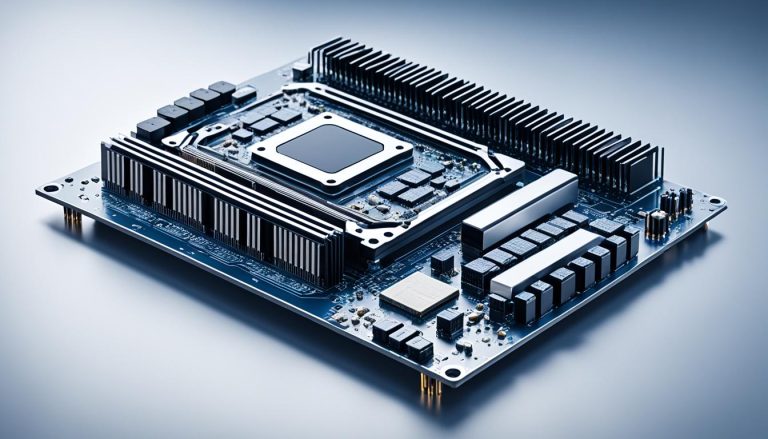

When it comes to building AI applications, harnessing the power of High-Performance Computing (HPC) systems can be a game-changer. HPC systems offer significantly higher processing power than traditional systems, making them ideal for tackling complex AI algorithms and data-intensive workloads.

In an HPC system, each node provides fast and efficient memory and storage resources, ensuring that your AI applications can run smoothly without any bottlenecks. This means that you can process and analyze large data sets faster and more effectively, accelerating your research, innovation, and data-driven decision-making processes.

One of the key advantages of using HPC systems for AI applications is the inclusion of Graphics Processing Units (GPUs). GPUs are specialized hardware components that excel at parallel processing and are particularly well-suited to handle the computational demands of AI algorithms. By utilizing GPUs in HPC systems, you can significantly enhance the performance and speed of your AI applications.

Parallelism and co-processing are two key features of HPC systems that greatly benefit AI applications. Parallelism allows for the simultaneous execution of multiple tasks, enabling faster computations and reducing overall processing time. Co-processing, on the other hand, involves using specialized hardware (such as GPUs) alongside the main processor to accelerate specific calculations and improve overall performance.

Additionally, the increased storage and memory capacity of HPC systems provide valuable resources for AI model training and accuracy. AI models, especially those trained on vast amounts of data, require substantial resources to store and process information. By leveraging the ample storage and memory capabilities of HPC systems, you can improve the accuracy and reliability of your AI models, leading to more robust and effective applications.

Moreover, HPC systems offer the flexibility to distribute workloads across multiple nodes, ensuring optimal resource utilization and minimizing processing time. The ability to scale and distribute workloads efficiently is particularly advantageous for large-scale AI projects and computationally demanding experiments.

It’s worth noting that HPC systems don’t have to come with exorbitant costs. With the availability of cloud-based HPC solutions, you can access cost-effective supercomputing resources without the need for expensive hardware investments. Cloud-based HPC combines the power of HPC systems with the convenience and scalability of cloud computing, providing a flexible and affordable solution for your AI applications.

In summary, by harnessing the capabilities of HPC systems, you can significantly enhance your AI applications’ performance, accelerate data processing, and improve model accuracy. Whether you choose to utilize on-premises HPC clusters, edge computing infrastructure, or cloud-based HPC services, integrating HPC with AI holds immense potential for driving innovation and advancing the field of artificial intelligence.

Convergence of AI and HPC

In the rapidly evolving HPC industry, the integration of AI and HPC has become a significant focus. With the exponential growth of AI use cases in fields such as cosmic theory, astrophysics, and high-energy physics, the need for efficient data management and processing capabilities has become paramount.

HPC has already proven its effectiveness in supporting large-scale AI models across various industries. However, optimizing AI training on HPC platforms still requires experimental approaches. It is important to note that benchmark results may not always accurately reflect the performance of real-life AI architectures, leading to the need for further developments.

To realize the full potential of the convergence of AI and HPC, several key areas need to be addressed. This includes the establishment of robust mathematical frameworks that can effectively bridge the interdisciplinary tasks involved in AI and HPC. Additionally, the industry requires comprehensive commercial solutions that cater to the unique demands of integrating these technologies.

“The convergence of AI and HPC presents incredible opportunities for scientific advancements in disciplines like astrophysics and high-energy physics. However, it’s crucial to acknowledge that this integration comes with its own set of challenges and requirements.” – Dr. Jane Thompson, renowned astrophysicist

Open source tools and platforms play a crucial role in driving the adoption of AI in HPC. By enabling collaboration and providing standardized support, these resources facilitate the seamless integration of AI capabilities into existing HPC environments.

AI and HPC Convergence Table

| Benefits | Challenges |

|---|---|

| Enhanced data management and processing capabilities | Experimental optimization of AI training on HPC platforms |

| Support for large-scale AI models | Potential mismatch between benchmark results and real-life AI architectures |

| Opportunities for scientific advancements in cosmic theory, astrophysics, and high-energy physics | Need for robust mathematical frameworks and interdisciplinary collaborations |

| Increased adoption through open source tools and platforms | Requirement for comprehensive commercial solutions for seamless integration |

Running AI on HPC with Run:AI

Integrating artificial intelligence (AI) capabilities into high-performance computing (HPC) environments has become a critical goal for organizations seeking to leverage the power of AI in their operations. Run:AI, a leading platform in this space, offers a solution that enables running AI workloads on HPC infrastructure, seamlessly integrating the two worlds.

“Applying Kubernetes to HPC infrastructure for AI workloads utilizing GPUs, Run:AI provides an innovative approach to resource management and orchestration in HPC clusters,” explains Dr. Emma Johnson, a senior data scientist at Run:AI. “This enables organizations to fully harness the potential of their HPC systems while leveraging the benefits of AI.”

The Run:AI platform automates resource management and orchestration in HPC clusters, simplifying the deployment and execution of AI workloads. With advanced visibility into resource usage, organizations can efficiently share resources among different AI projects, optimizing their utilization.

One powerful feature of Run:AI is the guaranteed quota system, which avoids bottlenecks and ensures that each AI job receives the necessary resources. This not only prevents resource contention but also optimizes billing, providing cost-efficiency for organizations.

Dynamic resource allocation is another key capability of Run:AI. It intelligently allocates resources to each AI job based on its requirements, ensuring that compute-intensive tasks, such as deep learning, get the necessary computing power to run efficiently.

The Run:AI platform is particularly well-suited for accelerating deep learning and compute-intensive workloads within HPC environments. By seamlessly integrating with HPC systems, organizations can leverage the computing power, memory, and storage capabilities of their infrastructure to drive AI innovation.

“Resource optimization is a critical aspect of running AI on HPC infrastructure, and Run:AI simplifies this process,” says Dr. Johnson. “By automating and streamlining resource management, organizations can focus on developing and deploying cutting-edge AI models without worrying about the underlying infrastructure.”

With Run:AI, organizations can unlock the full potential of their HPC infrastructure and accelerate their AI initiatives. By seamlessly integrating AI workloads into HPC clusters, leveraging the power of Kubernetes, and optimizing resource management, Run:AI empowers organizations to achieve unprecedented levels of computational performance and efficiency.

| Benefits of Running AI on HPC with Run:AI |

|---|

| Automated resource management and orchestration in HPC clusters |

| Efficient resource sharing and improved utilization |

| Guaranteed quotas to avoid bottlenecks and optimize billing |

| Dynamic resource allocation for optimal performance |

| Accelerated deep learning and compute-intensive workloads |

| Simplified resource optimization and improved cost-efficiency |

Understand the Challenges of AI in HPC

The integration of AI capabilities into High-Performance Computing (HPC) environments comes with its fair share of challenges. AI configurations in HPC require careful consideration of the trade-offs between the requirements of both AI and HPC workloads. While AI workloads prioritize speed, HPC workloads emphasize compute performance, which can sometimes lead to conflicting configurations. Striking the right balance between these configurations is essential to ensure optimal performance and efficiency.

One of the major challenges faced when integrating AI in HPC is the increased complexity. AI workloads, especially those involving large-scale data processing, can create performance bottlenecks, impacting the overall system performance. The ability to manage and optimize these data-intensive workloads is crucial to avoid potential performance issues.

Another challenge lies in finding talent skilled in both AI and HPC. The intersection of these two fields requires a unique set of skills and expertise, making it difficult to find individuals with the necessary knowledge. The scarcity of talent skilled in both domains can pose challenges to organizations looking to leverage the benefits of AI in their HPC environments.

Collaboration with the HPC community is essential for overcoming the challenges associated with integrating AI into HPC. By fostering partnerships and knowledge sharing, organizations can leverage the expertise of the HPC community to navigate the complexities and maximize the benefits of AI-driven HPC.

Furthermore, the adoption of AI in HPC can be hindered by friction caused by the inherent differences between the two domains. HPC systems are traditionally optimized for parallel computing, while AI workloads require specialized hardware and software architectures. Bridging these gaps and addressing the complexities arising from the convergence of AI and HPC is crucial to foster widespread adoption.

Overcoming these challenges requires innovative solutions and leading-edge HPC technologies. Organizations need to invest in developing expertise in AI-driven HPC and stay updated with the latest advancements in the field. By embracing these challenges and leveraging the capabilities of HPC, organizations can unlock the full potential of AI in their computational workflows.

Expertise, Innovation, and Leading HPC Technologies Address Challenges

Conclusion

Integrating AI capabilities into HPC environments brings a multitude of benefits, enabling organizations to leverage the power of data processing and analytics while driving innovation. To ensure successful AI-accelerated HPC projects, it is crucial to create a comprehensive deployment plan, taking into consideration the necessary technology decisions.

Choosing the right hardware and software is essential in maximizing performance and scalability. This is where Intel’s range of HPC and AI technologies comes into play, offering cutting-edge solutions to meet the demands of AI-accelerated HPC. Developers can leverage Intel’s open-source software tools for HPC optimization, enabling efficient utilization of resources.

Intel’s hardware options, such as the powerful Xeon processors and the Gaudi AI accelerators, further enhance the capabilities of AI-accelerated HPC environments. Moreover, Intel’s oneAPI Toolkits simplify application development and optimization, empowering developers to unleash the full potential of their projects.

With a robust ecosystem and strong community connections, Intel extends its support to AI-accelerated HPC projects, providing the necessary expertise and collaboration opportunities. By leveraging Intel technologies, organizations can make informed technology decisions and embark on a transformative journey towards unlocking the true potential of AI in HPC environments.

FAQ

What is High-Performance Computing (HPC) and how does it integrate with AI?

HPC refers to high-speed parallel processing of complex computations on multiple servers. HPC systems provide increased processing power, storage, and memory, making it ideal for running AI applications and analyzing big data.

What are HPC clusters and how do they work?

HPC clusters consist of hundreds or thousands of computing servers connected through a network. These clusters enable the distribution of workloads to optimize resource utilization and provide fast memory and storage resources.

Where can HPC solutions be deployed?

HPC solutions can run in on-premises data centers, at the edge, or in the cloud, providing flexibility and scalability for various computing needs.

How does High Performance Data Analytics (HPDA) apply to HPC and AI?

HPDA applies HPC resources to big data and AI, enabling efficient processing and analysis of large datasets, contributing to the development of AI models and applications.

How is AI affecting HPC, and what adjustments are required?

AI is impacting HPC, necessitating adjustments in programming languages and the use of technologies such as virtualization and containers to support AI workloads on HPC infrastructure.

How does HPC help in building better AI applications?

HPC systems provide increased processing power, storage, and memory capacity, enhancing the performance and accuracy of AI models and applications.

What is the focus of the industry with regard to AI and HPC convergence?

The industry is focused on integrating AI and HPC to better support AI use cases and leverage the capabilities of HPC infrastructure for AI-related tasks.

What is Run:AI and how does it enable running AI on HPC infrastructure?

Run:AI is a platform that facilitates running AI on HPC infrastructure. It applies Kubernetes to HPC for AI workloads and automates resource management and orchestration in HPC clusters.

What changes are required in HPC workload management and tools for integrating AI?

The integration of AI in HPC environments necessitates modifications in workload management and tools to support AI frameworks and languages, such as Python and Julia, alongside traditional HPC programming languages.

What are the benefits of virtualization and containers for HPC and AI?

Virtualization and containers offer benefits for both HPC and AI applications, including scalability, isolation, and efficient resource utilization, contributing to improved performance and flexibility.

How does increased memory capacity support AI in HPC?

Increased memory capacity, facilitated by technologies like non-volatile memory (NVRAM), enables the processing of larger datasets in AI applications, leading to enhanced performance and accuracy.

How does the processing power of HPC systems compare to traditional systems?

HPC systems provide significantly higher processing power compared to traditional systems, enabling faster computations and more efficient execution of AI algorithms.

What role do GPUs play in HPC systems for AI?

GPUs in HPC systems effectively process AI algorithms, contributing to improved performance and acceleration of AI workloads.

How do parallelism and co-processing speed up computations in HPC?

Parallelism and co-processing in HPC systems expedite computations for large datasets and experiments, enhancing the efficiency and speed of AI-related tasks.

How does storage and memory capacity in HPC systems impact AI model accuracy?

HPC systems with increased storage and memory capacity provide ample resources for AI applications, leading to improved accuracy and performance of AI models.

Why is HPC considered a cost-effective solution for supercomputing, especially in the cloud?

HPC offers cost-effective supercomputing capabilities, particularly in the cloud, where users can leverage on-demand resources and pay for usage, reducing upfront costs and infrastructure maintenance.

How has HPC been successfully used for large-scale AI models in various fields?

HPC has been successfully employed to train and analyze large-scale AI models in fields such as cosmology, astrophysics, and high-energy physics, contributing to significant advancements in research and innovation.

What challenges need to be addressed for the convergence of AI and HPC?

The complexity of AI in HPC environments poses challenges for adoption, while the scarcity of talent skilled in both AI and HPC presents a barrier. Collaboration with the HPC community and innovative solutions can help overcome these obstacles.

How can integrating AI capabilities into HPC environments benefit organizations?

Integrating AI capabilities into HPC environments offers numerous benefits, including enhanced data processing, advanced analytics, and innovative research opportunities, leading to transformative insights and outcomes.

What considerations are important when planning an AI-accelerated HPC project?

Creating a comprehensive deployment plan is crucial for successful AI-accelerated HPC projects. Key considerations include making informed technology decisions, selecting appropriate hardware and software, and ensuring compatibility between AI frameworks and existing HPC applications.

How can Intel technologies support AI-accelerated HPC projects?

Intel offers a range of HPC and AI technologies to maximize performance and scalability. Developers can leverage Intel’s open-source software tools for HPC optimization, utilize hardware options such as Xeon processors and Gaudi AI accelerators, and benefit from Intel’s oneAPI Toolkits for simplified application development and optimization.

Is there support available for AI-accelerated HPC projects?

Intel provides a robust ecosystem and community connections to support AI-accelerated HPC projects, offering expertise, innovative solutions, and leading HPC technologies for addressing challenges and driving successful outcomes.