High-performance computing (HPC) clusters have revolutionized the field of neural network training. With the increase in computing power, HPC clusters have become essential for optimizing the training process of deep neural networks (DNN). By combining the capabilities of multiple computers in a networked system, HPC clusters can handle the high computational complexity of DNN training, allowing researchers and developers to train models faster and more efficiently. This article explores the role of HPC clusters in neural network training and their impact on accelerating AI advancements and research breakthroughs.

Key Takeaways:

- High-performance computing clusters are instrumental in optimizing neural network training.

- By combining multiple computers in a networked system, HPC clusters can handle the high computational complexity of DNN training.

- HPC clusters provide increased computing power, facilitating the training of larger and more complex models.

- They enable the processing and analysis of big data, leading to more robust and accurate AI models.

- HPC clusters enable parallel processing, reducing the training time of neural networks and fostering faster research advancements.

The Benefits of High-Performance Computing for Neural Network Training

High-performance computing (HPC) offers a multitude of benefits for neural network training. From increasing computing power to enabling parallel processing, HPC clusters have become an invaluable tool for researchers and developers in the field of AI.

Increased Computing Power for Larger and More Complex Models

One of the primary benefits of HPC clusters in neural network training is the ability to harness increased computing power. With the computational complexity of deep neural networks (DNN), traditional computing systems often struggle to handle the training of larger and more complex models. However, HPC clusters provide the necessary computing resources and parallel processing capabilities to train these advanced models efficiently. The enhanced computing power enables researchers to improve the accuracy and performance of their neural networks, pushing the boundaries of AI capabilities.

Facilitating the Processing and Analysis of Big Data

In the field of AI, big data plays a crucial role in training robust and accurate models. HPC clusters excel in processing and analyzing large datasets, bringing a significant advantage to neural network training. With the ability to store and manipulate vast amounts of data, HPC clusters allow researchers to train models on diverse and comprehensive datasets, capturing a wider range of patterns and nuances. This comprehensive training data leads to more accurate AI models with improved performance in real-world applications.

Accelerating Training Time through Parallel Processing

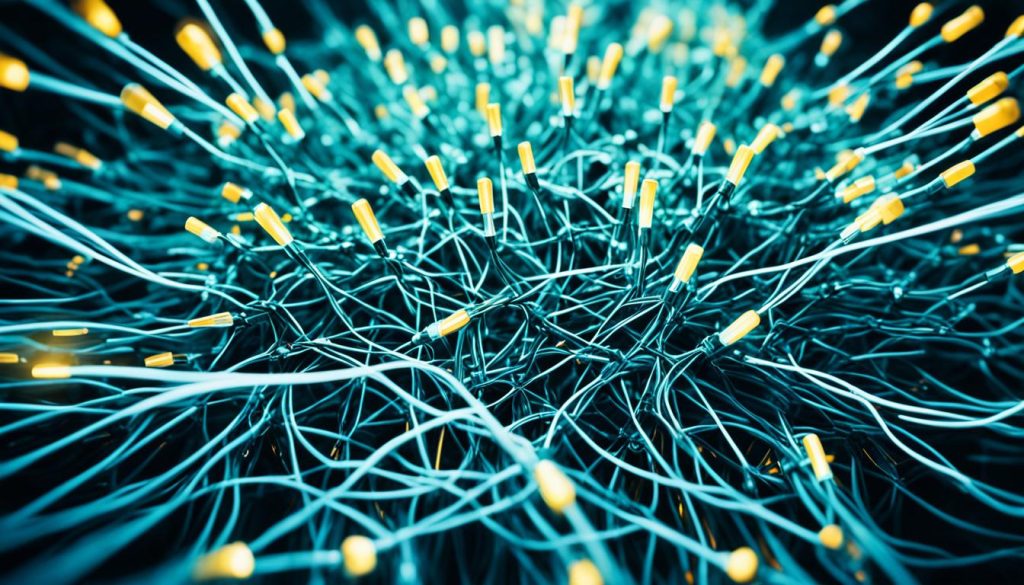

One of the most significant benefits of HPC clusters for neural network training is their ability to enable parallel processing. By distributing the computational workload across multiple nodes, HPC clusters drastically reduce the training time of neural networks. With faster training times, researchers can iterate and experiment with models more quickly, leading to faster research advancements and breakthroughs in AI. The parallel processing capabilities of HPC clusters empower researchers to tackle complex problems and explore new AI techniques with unprecedented efficiency.

Through increased computing power, enhanced processing of big data, and parallel processing capabilities, high-performance computing clusters offer significant benefits for neural network training. These benefits empower researchers and developers to push the boundaries of AI, achieve breakthroughs in AI applications, and drive advancements in various fields, from image recognition to natural language processing.

Implementing High-Performance Computing Clusters for Neural Network Training

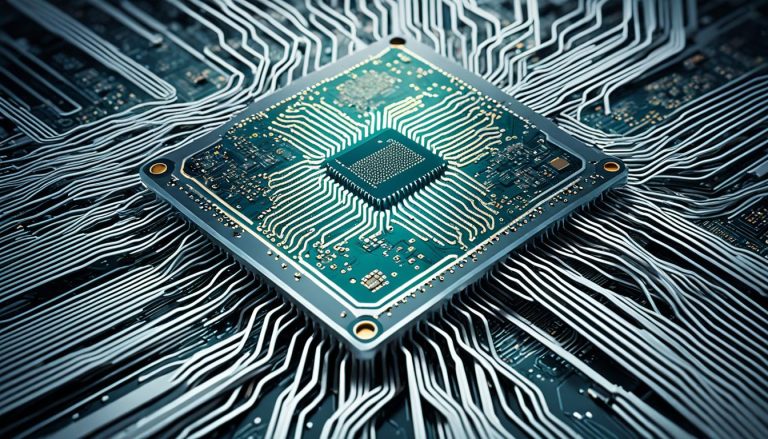

To implement high-performance computing clusters for neural network training, several key components and considerations are involved. Firstly, the hardware infrastructure of the HPC cluster needs to be carefully designed and optimized to handle the computational demands of neural network training. This includes selecting appropriate processors, GPUs, and network connections to ensure efficient and scalable computing power.

Secondly, software frameworks and libraries specifically designed for distributed computing, such as TensorFlow, Keras, and Horovod, are utilized to enable parallel processing and communication between the nodes in the cluster. These frameworks provide the necessary tools and algorithms to distribute the training workload and synchronize the gradients of the neural network across the cluster.

Lastly, optimizing the communication and synchronization process among nodes, such as implementing improved gradient synchronization strategies, further enhances the performance of the HPC cluster for neural network training. By carefully considering and implementing these components, researchers and developers can leverage the power of high-performance computing clusters to train neural networks more effectively and efficiently.

Components for Implementing High-Performance Computing Clusters

| Components | Considerations |

|---|---|

| Hardware infrastructure | – Selecting appropriate processors, GPUs, and network connections – Designing for efficient and scalable computing power |

| Software frameworks and libraries | – Utilizing distributed computing frameworks like TensorFlow, Keras, and Horovod – Enabling parallel processing and communication between nodes |

| Communication and synchronization | – Implementing improved gradient synchronization strategies – Optimizing communication processes among nodes |

Case Studies: High-Performance Computing Clusters in Neural Network Training

Several case studies highlight the successful utilization of high-performance computing clusters in neural network training. These real-world examples demonstrate the effectiveness of HPC clusters in handling large datasets, complex models, and parallel processing, leading to significant improvements in AI applications.

Researchers have implemented HPC clusters to train convolutional neural networks (CNN) for weed classification in agriculture. By combining the storage and processing capabilities of HPC clusters, researchers achieved faster and more accurate classification of weed species, aiding in the automation and optimization of crop field management.

HPC clusters have also been used to train deep neural networks for image recognition and natural language processing tasks. Through parallel processing and efficient distribution of computational workload, these studies have showcased the power of HPC clusters in enabling faster and more accurate AI model training.

By leveraging high-performance computing clusters, researchers have been able to overcome the limitations imposed by computational complexity and data size. These case studies provide evidence of the value that HPC brings to neural network training, demonstrating its potential in accelerating AI advancements and revolutionizing various industries.

Image: High-Performance Computing Clusters

Conclusion

High-performance computing clusters have proven to be indispensable in the field of neural network training. These clusters provide the increased computing power required to handle the high computational complexity of training large and complex neural networks. By leveraging the capabilities of HPC clusters, researchers and developers can optimize the training process, leading to faster and more efficient AI advancements.

The benefits of HPC clusters extend beyond computing power. These clusters enable the processing and analysis of big data, allowing researchers to train models on diverse and comprehensive datasets, resulting in more accurate and robust AI models. Additionally, HPC clusters support parallel processing by distributing the computational workload across multiple nodes, significantly reducing training time and enabling faster iterations and experimentation.

Implementing high-performance computing clusters for neural network training requires careful consideration of hardware infrastructure, specialized software frameworks, and communication strategies. By making the right choices in these areas, researchers and developers can unlock the full potential of HPC clusters and further enhance the efficiency and effectiveness of neural network training.

With the continuous evolution of high-performance computing technology, the future holds even greater possibilities for advancing neural network training and driving further breakthroughs in AI research and applications. High-performance computing clusters will continue to play a crucial role in this journey, fueling innovation and pushing the boundaries of what is possible in the field of AI.

FAQ

What is the role of high-performance computing clusters in neural network training?

High-performance computing clusters optimize the training process of deep neural networks by providing increased computing power, facilitating big data processing, and enabling parallel processing.

How do high-performance computing clusters benefit neural network training?

High-performance computing clusters allow for the training of larger and more complex models, facilitate the processing and analysis of big data, and significantly reduce the training time of neural networks.

What components are involved in implementing high-performance computing clusters for neural network training?

Implementing high-performance computing clusters requires carefully designing and optimizing the hardware infrastructure, utilizing software frameworks for distributed computing, and optimizing communication and synchronization strategies among nodes.

Can you provide examples of successful utilization of high-performance computing clusters in neural network training?

High-performance computing clusters have been used for weed classification in agriculture, image recognition, and natural language processing, leading to faster and more accurate results in these applications.

What role do high-performance computing clusters play in accelerating AI advancements and research breakthroughs?

High-performance computing clusters play a critical role in optimizing neural network training, enabling researchers to train larger models, process big data, and iterate on their models more quickly, leading to faster advancements in AI research and applications.

One Comment