AI accelerators are revolutionizing the field of artificial intelligence by enhancing the performance of neural network tasks. Designed with specialized hardware and parallel processing capabilities, these high-performance machines are specifically tailored to efficiently process AI workloads like neural networks.

In data centers, AI accelerators such as Cerebras’ Wafer-Scale Engine (WSE) are unlocking new possibilities for AI research. With increased compute power, enhanced memory, and improved communication bandwidth, data center AI accelerators enable faster and more scalable AI experiments and analysis.

At the edge, AI accelerator IP is integrated into edge System-on-Chip (SoC) devices, delivering near-instantaneous results for real-time applications like interactive programs and industrial robotics. The energy efficiency and low power consumption of these edge AI accelerators make them ideal for edge computing scenarios.

Key Takeaways:

- AI accelerators enhance the performance of neural network tasks in artificial intelligence.

- Data center AI accelerators like Cerebras’ WSE offer increased compute power and scalability for AI research.

- Edge AI accelerators integrated into SoC devices provide near-instantaneous results for real-time applications.

- AI accelerators are highly energy-efficient and low power consumption, making them ideal for edge computing.

- The integration of AI accelerators in both data centers and edge devices is driving innovation in various industries.

How AI Accelerators Work

AI accelerators are instrumental in optimizing machine learning algorithms through the utilization of parallel processing and specialized hardware. These accelerators play a crucial role in enhancing the efficiency and effectiveness of neural networks, enabling the processing of complex computations at high speeds.

In data centers, AI accelerators provide the computational power, memory, and communication bandwidth required for extensive AI research. These powerful machines, such as Cerebras’ Wafer-Scale Engine (WSE), are designed to handle immense workloads and support the development and training of neural networks.

On the other hand, AI accelerators for edge computing prioritize energy efficiency and low power consumption while delivering fast and accurate results. These accelerators are integrated into edge System-on-Chip (SoC) devices, enabling real-time inference and decision-making without relying on cloud-based processing.

There are different types of AI accelerators, each with its own unique strengths and applications. Graphics Processing Units (GPUs) offer versatility and programmability, making them suitable for a wide range of AI workloads. Tensor Processing Units (TPUs) excel in matrix operations, ensuring optimal performance for neural networks. Field-Programmable Gate Arrays (FPGAs) provide flexibility, allowing developers to customize the hardware for specific tasks. Meanwhile, Application-Specific Integrated Circuits (ASICs) are specialized chips designed for specific AI workloads, providing task-specific hardware for enhanced performance and energy efficiency.

The integration of AI accelerators in both data centers and edge devices significantly improves the processing capabilities and efficiency of neural networks. These powerful components pave the way for advancements in AI technology and drive innovation across various industries.

With their parallel processing capabilities and specialized hardware, AI accelerators are transforming the landscape of machine learning. They enable the efficient execution of neural network tasks, propelling AI research and applications to new heights.

Benefits of AI Accelerators

AI accelerators offer several benefits in machine learning applications. Their energy efficiency is particularly noteworthy, as they are often 100-1,000 times more efficient than general-purpose compute machines. This means that AI accelerators can perform complex neural network tasks while consuming significantly less power, making them ideal for energy-conscious deployments.

Another key advantage of AI accelerators is their ability to reduce latency. By processing data and running algorithms more quickly, AI accelerators can provide faster and more responsive results. This is especially important in real-time applications such as advanced driver assistance systems (ADAS) where timely decision-making is critical for safety.

The scalability of AI accelerators is also a significant benefit. With their ability to handle large neural networks and process vast quantities of data, AI accelerators enable applications to efficiently handle the increasing demands of machine learning tasks.

Furthermore, AI accelerators have a heterogeneous architecture that supports specialized processors for specific tasks. This enables them to deliver the computational performance that AI applications require, optimizing overall system performance and enhancing the effectiveness of machine learning algorithms.

To visualize the benefits of AI accelerators, consider the following table:

| Benefit | Description |

|---|---|

| Energy Efficiency | AI accelerators are significantly more power-efficient than general-purpose compute machines, reducing energy consumption. |

| Latency Reduction | AI accelerators process data quickly, leading to faster and more responsive results, which is invaluable in real-time applications. |

| Scalability | AI accelerators can handle large neural networks and process voluminous data quantities, accommodating the needs of complex machine learning tasks. |

| Heterogeneous Architecture | AI accelerators have specialized processors designed for specific tasks, optimizing computational performance and enhancing overall system efficiency. |

In summary, AI accelerators offer numerous benefits such as energy efficiency, reduced latency, scalability, and advanced heterogeneous architecture. These advantages position AI accelerators as indispensable tools for accelerating machine learning applications and driving innovation in various industries.

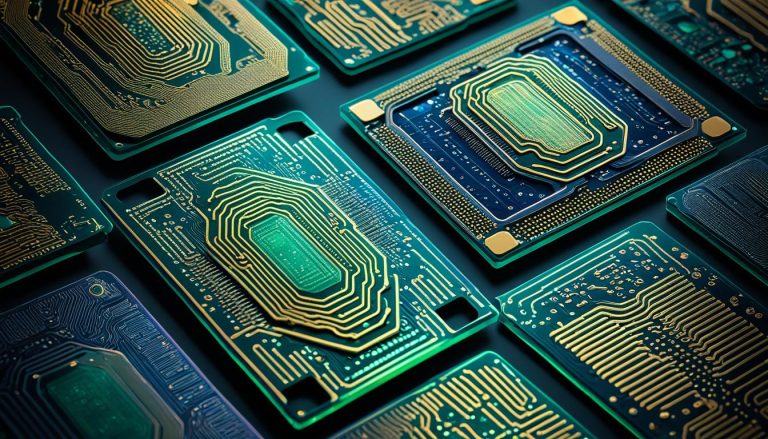

Different Types of AI Accelerators

When it comes to AI accelerators, there are several options available, each with its own strengths and use cases. Let’s explore some of the different types:

1. GPUs (Graphics Processing Units)

GPUs are widely popular for their parallel processing capabilities, making them ideal for AI workloads. They excel at performing multiple tasks simultaneously, which is crucial in neural network tasks. GPUs are commonly used in various AI applications, including image and speech recognition, natural language processing, and deep learning.

2. TPUs (Tensor Processing Units)

Developed by Google, TPUs specialize in matrix operations, making them highly efficient for AI tasks. They strike a balance between performance and energy efficiency, delivering faster processing speeds while minimizing power consumption. TPUs are designed to excel in AI workloads and are particularly well-suited for large-scale machine learning tasks.

3. FPGAs (Field-Programmable Gate Arrays)

FPGAs are programmable hardware devices that can be customized to perform specific tasks. They offer flexibility and versatility, making them popular for prototyping and experimentation. FPGAs can be reprogrammed to adapt to different AI workloads, allowing for efficient and customized processing.

4. ASICs (Application-Specific Integrated Circuits)

ASICs are specialized integrated circuits designed for specific AI workloads. They provide enhanced performance and energy efficiency by tailoring the hardware specifically to the task at hand. Examples of ASICs include AWS Inferentia and Intel Habana Gaudi, which are optimized for high-performance inference in machine learning models.

Each type of AI accelerator offers unique advantages and is suitable for different applications. Here’s a summary of their key characteristics:

| Type | Advantages | Use Cases |

|---|---|---|

| GPUs | Parallel processing capabilities, versatility, programmability | Image recognition, natural language processing, deep learning |

| TPUs | Specialized for matrix operations, performance, energy efficiency | Large-scale machine learning tasks, AI research |

| FPGAs | Flexibility, programmability, customization | Prototyping, experimentation, adaptable AI workloads |

| ASICs | Task-specific design, enhanced performance, energy efficiency | Inference in machine learning models, specialized AI workloads |

Choosing the right AI accelerator depends on the specific requirements of the application, such as the scale of the workload, the need for customization, and the desired balance between performance and energy efficiency.

Programming AI Accelerators

When it comes to programming AI accelerators, the process involves mapping high-level programs written in popular frameworks like TensorFlow, PyTorch, MxNet, or Keras to run efficiently on the hardware. The goal is to allow users to focus on writing high-level code without the need to delve into the intricate details of the underlying hardware architecture.

However, while programming AI accelerators, it is essential to consider hardware-specific features and optimizations. For example, specific AI accelerators may support low-precision arithmetic, which can significantly enhance performance and energy efficiency.

One widely-used programming model is TensorFlow, which provides compatibility with various AI accelerators. TensorFlow’s compatibility allows users to train and infer deep neural networks seamlessly on the targeted hardware. This flexibility and support for AI accelerators make TensorFlow a preferred choice for many AI developers and researchers.

TensorFlow: Simplifying AI Development

TensorFlow, an open-source framework developed by Google, offers a high-level programming interface that allows developers to define and train machine learning models efficiently. Through TensorFlow’s abstraction layer, developers can write code that focuses on the logic and algorithms of their AI models instead of dealing with the intricacies of hardware optimization.

With TensorFlow, one can write code that is hardware-agnostic, making it easier to deploy and run models on different AI accelerators and platforms, ensuring maximum utilization of the available computational power. This flexibility is particularly useful when targeting various hardware architectures, such as GPUs, TPUs, FPGAs, or ASICs. TensorFlow’s extensive ecosystem and community support also contribute to its popularity among AI practitioners.

“TensorFlow’s compatibility with different AI accelerators simplifies the process of deploying machine learning models on specialized hardware. It enables researchers and developers to focus on the model’s design and functionality, helping them bring their ideas to life more quickly and efficiently.” – Dr. Jane Martinez, AI Researcher

While TensorFlow is a popular choice, other frameworks like PyTorch, MxNet, and Keras also offer similar capabilities and compatibility with various AI accelerators. Developers have the flexibility to choose the framework that best suits their needs and preferences.

Overall, programming AI accelerators using frameworks like TensorFlow enables efficient utilization of hardware resources, simplifies the development process, and accelerates the deployment of AI models across different platforms and architectures.

Evolution of AI Accelerators

AI accelerators have evolved in response to the increasing demands of machine learning tasks. With the aim of better supporting AI applications and enhancing machine learning performance, existing CPU and GPU architectures have undergone significant modifications. These modifications include the implementation of low-precision arithmetic and specialized compute paths tailored to common deep neural network tasks.

The line between GPUs and AI accelerators is becoming increasingly blurred as GPU architectures become more specialized for AI workloads. This enables GPUs to deliver enhanced performance and efficiency in machine learning tasks. Meanwhile, new architectures have emerged to address the specific needs of machine learning, such as Field-Programmable Gate Arrays (FPGAs) and Tensor Processing Units (TPUs).

FPGAs provide programmable hardware devices that can be customized for various tasks. They offer flexibility and are commonly used in prototyping and experimentation. On the other hand, TPUs, developed by Google, excel at matrix operations and strike a balance between performance and energy efficiency.

Ongoing research and development efforts continue to propel advancements in AI accelerator technology. Each type of hardware, whether it be CPUs, GPUs, FPGAs, or TPUs, has its distinct advantages and considerations, allowing for a diverse range of options to accommodate different machine learning requirements.

“The integration of specialized compute paths and the blurring line between GPUs and AI accelerators demonstrate the commitment to improving machine learning performance. As the field advances, we can expect continued innovation in AI accelerator technology, further enhancing the efficiency and effectiveness of machine learning tasks.”

The Future of AI Accelerators

As technology progresses, AI accelerators are becoming indispensable tools in the realm of artificial intelligence. The evolution of hardware trends and CPU/GPU architectures demonstrates the industry’s dedication to optimizing machine learning performance. With ongoing enhancements in neural network processing capabilities, AI accelerators will continue to play a pivotal role in the advancement of machine learning, driving innovation across diverse industries.

| AI Accelerator | Advantages | Considerations |

|---|---|---|

| GPUs | Versatility and programmability | Lower energy efficiency compared to specialized architectures |

| FPGAs | Flexibility and customization | Higher power consumption in certain use cases |

| TPUs | High performance in matrix operations | Specifically designed for Google’s ecosystem |

| CPU/GPU Architectures | Improved support for AI applications | May require modifications for efficient AI tasks |

Conclusion

AI accelerators are instrumental in driving advancements in machine learning efficiency. With their ability to boost the performance and efficiency of neural network tasks, AI accelerators offer significant advantages in terms of energy efficiency, latency reduction, and scalability. These accelerators play a crucial role in various industries by enabling faster processing and real-time results.

By leveraging different types of AI accelerators, such as GPUs, TPUs, FPGAs, and ASICs, organizations can cater to their specific needs and optimize their machine learning workflows. Programming models like TensorFlow make it easier to integrate high-level code with targeted hardware, simplifying the development process.

As AI accelerators continue to evolve, we can expect further advancements in machine learning efficiency and performance. The ongoing research and development in this field will drive innovation, enabling organizations to leverage the power of AI for solving complex problems, making data-driven decisions, and unlocking new possibilities.

FAQ

What are AI accelerators?

AI accelerators are high-performance parallel computation machines specifically designed for efficient processing of AI workloads like neural networks. They leverage parallel processing capabilities and specialized hardware features tailored for AI tasks.

How do AI accelerators work?

In data centers, AI accelerators like Cerebras’ Wafer-Scale Engine (WSE) offer more compute, memory, and communication bandwidth, enabling faster and more scalable AI research. At the edge, AI accelerator IP is integrated into edge System-on-Chip (SoC) devices, delivering near-instantaneous results for applications like interactive programs and industrial robotics.

What are the benefits of AI accelerators?

AI accelerators enhance the efficiency and efficacy of machine learning algorithms by leveraging parallel processing and specialized hardware. They are highly energy-efficient, reduce latency, enable scalability, and support specialized processors for specific tasks.

What are the different types of AI accelerators?

There are various types of AI accelerators available, including GPUs, tensor processing units (TPUs), FPGAs, and ASICs. Each has its own advantages and use cases, such as GPUs being versatile and programmable, TPUs excelling at matrix operations, FPGAs offering flexibility, and ASICs providing task-specific hardware.

How can AI accelerators be programmed?

Programming AI accelerators involves mapping high-level programs written in frameworks like TensorFlow, PyTorch, MxNet, or Keras to run on the hardware. TensorFlow provides compatibility with various AI accelerators, making it easier to train and infer deep neural networks on the targeted hardware.

How have AI accelerators evolved?

AI accelerators have evolved to meet the growing demands of machine learning tasks. Existing CPU and GPU architectures have been modified to better support AI applications, and new architectures like FPGAs and TPUs have been developed specifically for machine learning.