Welcome to our article on Multilayer Perceptrons (MLPs), a classic architecture in the field of supervised learning. In the realm of AI and machine learning, MLPs have played a vital role in shaping neural network models.

MLPs are artificial neural networks that consist of multiple layers of interconnected neurons. Each neuron utilizes a non-linear activation function, allowing MLPs to effectively learn complex patterns in data. This makes MLPs powerful models for various tasks, including classification, regression, and pattern recognition.

MLPs have found extensive applications in diverse fields such as image recognition, natural language processing, and speech recognition. Their excellent capacity to approximate any function, under specific conditions, has positioned them as a fundamental building block in deep learning and neural network research.

In this article, we will explore the basics of neural networks, discuss different types of neural networks, and delve into the architectural components of MLPs. We will provide you with a comprehensive understanding of MLPs and their significance in the world of AI and machine learning.

Basics of Neural Networks

Neural networks, also known as artificial neural networks (ANNs), are fundamental tools in machine learning. They are inspired by the structure and function of the human brain and consist of interconnected nodes called neurons. Neurons receive input signals, perform computations using an activation function, and produce output signals that are passed to other neurons in the network. The network is organized into layers, including an input layer, hidden layers where computations are performed, and an output layer for making predictions or decisions. Neurons in adjacent layers are connected by weighted connections, which transmit signals from one layer to the next. During the training process, the network adjusts its weights based on examples provided in a training dataset.

Neural networks, with their interconnected nodes and activation functions, form the backbone of modern machine learning. By mimicking the behavior of neurons in the human brain, these networks can process and analyze complex data, enabling them to perform a wide range of tasks such as image recognition, natural language processing, and predictive modeling.

The neural network structure consists of layers, each playing a specific role in the network’s computations. The input layer receives initial input data, whether it’s numerical features, image pixels, or text representations. This data is then passed through the network’s hidden layers, where computations are performed using activation functions. The hidden layers allow the network to extract higher-level features and representations from the input data. Finally, the output layer produces the network’s predictions or decisions based on the computations performed.

Neurons in adjacent layers are interconnected through weighted connections. These connections determine the strength of the signal transmitted from one neuron to the next. The weights are adjusted during the training process to optimize the network’s performance on a specific task. By updating the weights based on the examples provided in a training dataset, the network learns to make accurate predictions or decisions.

Overall, neural networks serve as powerful models for machine learning, enabling computers to learn and make decisions based on examples. The interconnected nodes, activation functions, layers, and weighted connections form the building blocks of these networks, allowing them to process and analyze complex data effectively.

Advantages of Neural Networks

- Neural networks can learn complex patterns and relationships in data, making them suitable for a wide range of tasks.

- They can handle noisy and incomplete data by learning from examples and making predictions based on patterns.

- Neural networks can generalize well to unseen data, allowing them to make predictions or decisions in real-world scenarios.

Disadvantages of Neural Networks

- Neural networks require a significant amount of computational resources, making training time-consuming.

- They can be prone to overfitting, where the network becomes too specialized in the training data and performs poorly on unseen data.

- Interpretability can be a challenge with neural networks, as the complex computations and numerous parameters make it difficult to understand how decisions are made.

Types of Neural Networks

Neural networks have various types, each designed for specific tasks and architectural requirements. Let’s explore the different types:

Feedforward Neural Networks (FNN)

Feedforward Neural Networks (FNN) are the simplest form of artificial neural networks (ANNs). In FNNs, information flows in one direction, from the input layer to the output layer. These networks are commonly used for tasks such as classification and regression.

Recurrent Neural Networks (RNN)

Recurrent Neural Networks (RNN) are designed to process sequential data. They have connections that form directed cycles, allowing them to capture temporal dependencies in the data. RNNs have been successful in applications such as speech recognition and natural language processing (NLP).

Convolutional Neural Networks (CNN)

Convolutional Neural Networks (CNN) are specialized for efficiently processing grid-like data, such as images. They use convolutional layers to automatically learn hierarchical representations from the input data, enabling them to excel in tasks like image recognition and computer vision.

Long Short-Term Memory Networks (LSTM) and Gated Recurrent Units (GRU)

Long Short-Term Memory Networks (LSTM) and Gated Recurrent Units (GRU) are advanced types of RNNs that address the vanishing gradient problem. These architectures allow for the modeling of long-term dependencies in sequential data. LSTMs and GRUs have been widely used in tasks involving time series data and natural language processing.

Autoencoder

Autoencoders are unsupervised learning models used for dimensionality reduction and feature extraction. They aim to reconstruct the input data at the output layer, learning a compressed representation in the hidden layers. Autoencoders have applications in anomaly detection, denoising, and data visualization.

Generative Adversarial Networks (GAN)

Generative Adversarial Networks (GAN) consist of a generator and a discriminator that are trained simultaneously in a competitive setting. The generator aims to generate synthetic data that is indistinguishable from real data, while the discriminator tries to differentiate between real and fake data samples. GANs have achieved significant success in generating realistic images, videos, and other types of synthetic data.

Multilayer Perceptrons (MLPs)

Multilayer Perceptrons (MLPs) are a type of feedforward neural network that has played a crucial role in various fields such as image recognition, natural language processing, and speech recognition. MLPs consist of fully connected neurons with a non-linear activation function, making them powerful models for complex pattern recognition tasks.

One of the key advantages of MLPs is their flexibility in architecture. They can be designed with multiple hidden layers, each containing numerous neurons. This allows MLPs to learn intricate relationships and capture nuanced features in the data. The interconnectedness of fully connected neurons within MLPs ensures that information flows efficiently through the network, enabling accurate predictions and classifications.

MLPs have proven to be particularly effective in image recognition, where they excel at identifying objects and patterns within images. With advancements in deep learning and neural network research, MLPs have contributed significantly to the field’s progress by serving as a fundamental building block for developing more complex and sophisticated models.

Furthermore, MLPs have also been extensively used in natural language processing tasks, such as sentiment analysis, text classification, and language translation. By leveraging their ability to recognize patterns and extract meaningful information from textual data, MLPs have facilitated advancements in various language-related applications.

In the domain of speech recognition, MLPs have played a crucial role in transforming spoken words into digital representations. By training on large datasets of spoken language, MLPs can recognize and interpret speech patterns, laying the foundation for technologies like voice assistants, transcription services, and voice-controlled devices.

Overall, Multilayer Perceptrons (MLPs) have proven to be a versatile and powerful architecture in the field of neural networks. Their fully connected neurons, non-linear activation functions, and capability for image recognition, natural language processing, and speech recognition have established them as an essential component of deep learning and neural network research.

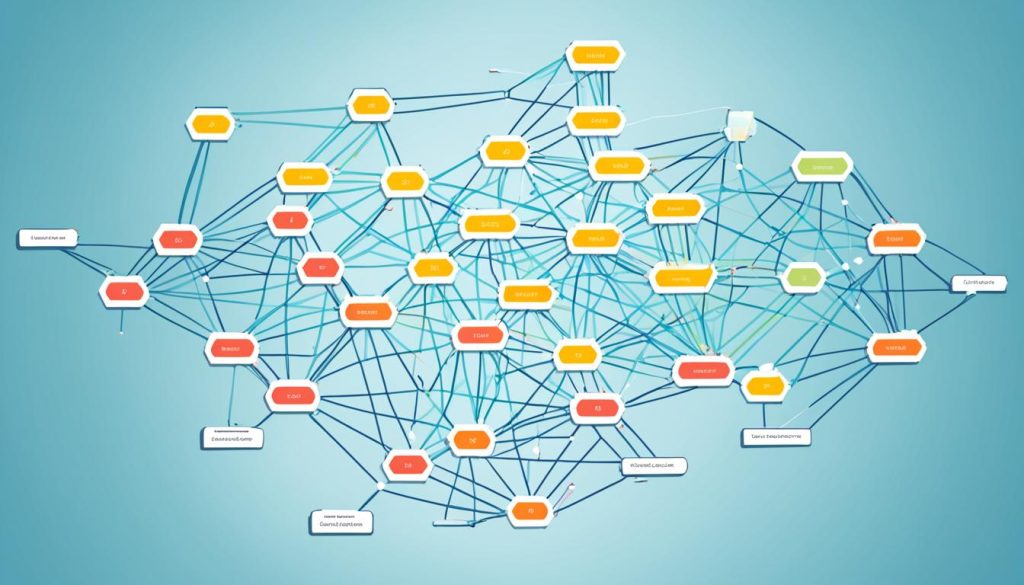

Architectural Components of MLPs

When it comes to multilayer perceptrons (MLPs), understanding their architectural components is key to grasping their functionality and power. MLPs consist of several crucial elements that contribute to their ability to learn complex patterns in data. Let’s explore these components in detail:

Input Layer

The input layer is where MLPs receive initial input data. Each neuron in the input layer represents a feature or dimension of the input data. This layer acts as the gateway for information to enter the neural network.

Hidden Layers

Between the input and output layers of MLPs, there can be one or more layers known as hidden layers. Each hidden layer is made up of interconnected neurons that perform computations and transformations on the input data. The neurons in a hidden layer receive inputs from all the neurons in the previous layer and produce an output that is passed to the next layer. Hidden layers play a crucial role in capturing and learning complex patterns within the data.

Output Layer

The output layer is where the final output of the MLP is produced. It consists of neurons that generate the network’s predictions or decisions based on the computations performed in the hidden layers. The number of neurons in the output layer depends on the specific task for which the MLP is being used.

Weights

Neurons in adjacent layers of MLPs are connected by weighted connections. These weights determine the strength and influence one neuron’s output has on another neuron’s input. During the training process, MLPs adjust these weights to optimize the network’s performance and enhance its ability to make accurate predictions. The weights are updated using various optimization algorithms, such as gradient descent.

Bias Neurons

In addition to the input, hidden, and output layers, each layer typically includes a bias neuron. Bias neurons act as thresholds for activation, allowing the MLP to account for any bias or offset in the input data. By adjusting the bias values, MLPs can fine-tune the network’s performance and make more accurate predictions.

Understanding the architectural components of MLPs provides valuable insights into how these neural networks function and learn from data. The input layer, hidden layers, output layer, weights, and bias neurons work together to make MLPs powerful tools for various AI and machine learning tasks.

Conclusion

In conclusion, Multilayer Perceptrons (MLPs) have been a classic architecture for supervised learning in artificial intelligence (AI) and machine learning. Their ability to learn complex patterns in data makes them powerful models for various tasks. MLPs have been widely used in image recognition, natural language processing, and speech recognition, among other fields.

What sets MLPs apart is their flexibility in architecture, allowing them to adapt to different problem domains and data types. This flexibility has contributed to their success and widespread adoption in the AI community. Moreover, MLPs serve as a fundamental building block in deep learning and neural network research, laying the foundation for more advanced and complex models.

As AI and machine learning continue to evolve, MLPs remain a significant neural network model, playing a pivotal role in advancing the field. Researchers and practitioners can benefit from harnessing the power of MLPs to solve real-world problems and unlock new possibilities in AI and machine learning applications.

FAQ

What are MLPs?

MLPs, or multilayer perceptrons, are a type of artificial neural network that have been fundamental in shaping the field of supervised learning in AI and machine learning.

How do MLPs work?

MLPs consist of multiple layers of interconnected neurons, with each neuron using a non-linear activation function. This allows MLPs to learn complex patterns in data, making them powerful models for classification, regression, and pattern recognition tasks.

What applications are MLPs used for?

MLPs have been widely used in various applications such as image recognition, natural language processing, and speech recognition.

What is the role of MLPs in deep learning and neural network research?

MLPs’ ability to approximate any function under certain conditions has made them a fundamental building block in deep learning and neural network research.

How are MLPs structured?

MLPs consist of several architectural components, including an input layer, hidden layers, and an output layer. Neurons in adjacent layers are connected by weighted connections, and each layer typically includes a bias neuron that adjusts the threshold for activation.