Recurrent Neural Networks (RNNs) have revolutionized sequential data processing in various fields, including language processing, speech recognition, and time series analysis. RNNs utilize hidden states and memory cells to capture and remember information from previous time steps, enabling them to model complex temporal relationships.

By incorporating hidden states and memory cells, RNNs demonstrate remarkable proficiency in analyzing sequential data such as time series and natural language. Their ability to understand context and capture dependencies between data points makes them invaluable in predicting future trends and understanding the intricacies of language.

In this article, we will delve into the mechanics of hidden states and memory cells in RNNs, explore the challenges of vanishing and exploding gradients, and showcase real-world applications of RNNs in language translation systems. We will also compare RNNs with their transformer counterparts, analyze their strengths and limitations, and discuss the synergy between the two architectures. Finally, we will touch upon the future prospects of RNNs and how they continue to shape the landscape of AI and sequential data analysis.

The Hidden State and Memory Cells

RNNs rely on the hidden state and memory cells to process sequential data effectively, capturing information from previous time steps and handling long-term dependencies.

The Hidden State

The hidden state, a crucial element of RNNs, stores and updates information from previous time steps in the sequence. It plays a vital role in capturing and retaining relevant context throughout the data processing.

At each time step, the hidden state is updated by combining the current input with the previous hidden state using learnable weights and activation functions. This combination allows the network to retain relevant information from earlier stages of the sequence, influencing the current state and subsequent predictions.

The hidden state acts as a memory mechanism, helping RNNs model complex temporal relationships by preserving important context. It enables the network to learn from the history of the sequence and make informed decisions based on past information.

Memory Cells

In traditional RNNs, the problem of vanishing gradients can hinder the network’s ability to handle long-term dependencies. However, memory cells, such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs), address this challenge.

Memory cells, such as LSTM and GRU, help RNNs overcome the issue of vanishing gradients and enable the network to learn longer dependencies more effectively.

LSTM incorporates separate memory cells that can store information over long periods. These cells ensure that relevant context is retained and passed through the sequence, helping the network avoid losing essential details as the sequence progresses.

GRUs, on the other hand, feature gating mechanisms that regulate the flow of information within the network. This gating mechanism allows GRUs to selectively update and retain information, enhancing the network’s ability to capture long-term dependencies.

With the assistance of memory cells, RNNs can process sequential data with improved accuracy and effectiveness. These mechanisms enable the networks to overcome the limitations of traditional RNNs and effectively model long-range dependencies.

To illustrate the concept further, refer to the visual representation below:

(INSERT IMAGE HERE – https://seowriting.ai/32_6.png)

alt: Memory Cells in Recurrent Neural Networks

Comparing Traditional RNNs, LSTM, and GRU

| Architecture | Vanishing Gradients | Memory Mechanism | Long-Term Dependencies |

|---|---|---|---|

| Traditional RNN | Yes | N/A | Challenging |

| LSTM | No | Memory Cells | Efficient |

| GRU | No | Gating Mechanisms | Effective |

The table compares the traditional RNN architecture with LSTM and GRU. While traditional RNNs struggle with vanishing gradients and long-term dependencies, both LSTM and GRU effectively mitigate these challenges. By incorporating memory cells and gating mechanisms, LSTM and GRU enable RNNs to capture and utilize essential context, improving their ability to model dependencies over longer sequences.

Vanishing and Exploding Gradients

RNNs are powerful tools for processing sequential data. However, during training, they can encounter challenges with vanishing and exploding gradients.

Vanishing gradients occur when the gradients become too small as they propagate through the layers of the network, making it difficult for the network to learn the underlying patterns in the data. This can lead to slower convergence and poor performance.

On the other hand, exploding gradients occur when the gradients become too large. This can cause the weights and biases in the network to update significantly, leading to unstable training and making it difficult for the model to converge.

The presence of vanishing and exploding gradients can pose significant training difficulties and affect the performance of the RNN model. However, there are techniques that can help mitigate these issues.

One such technique is the use of specialized RNN architectures, such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs). LSTMs and GRUs are designed to address the vanishing and exploding gradient problems by introducing additional gating mechanisms that allow for better gradient flow and information retention.

LSTMs utilize memory cells and gating units to selectively store and update information through time. This enables them to capture long-term dependencies in the data, making them more effective at learning sequential patterns.

GRUs, similar to LSTMs, also have gating units but follow a slightly different architecture. GRUs have fewer gating units, making them computationally more efficient, while still addressing the vanishing gradient problem.

The use of LSTMs and GRUs in RNNs has significantly improved their ability to learn and model long-term dependencies in sequential data. By mitigating the vanishing and exploding gradients, these architectures offer more stable and effective training, resulting in improved performance on various sequential data tasks.

Overall, the challenges posed by vanishing and exploding gradients have shaped the development of specialized RNN architectures, such as LSTMs and GRUs, which have become standard solutions for addressing these issues. By understanding and mitigating these training difficulties, RNNs can effectively capture and model the complex patterns present in sequential data.

Real-World Example: Language Translation

Language translation is a complex task that involves converting text from one language to another while preserving its meaning and context. Recurrent Neural Networks (RNNs) have emerged as powerful tools for machine translation, with variants such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs) leading the way.

RNNs in machine translation operate by processing input sequences of words and generating corresponding translations. They excel in capturing the sequential nature of language and understanding contextual nuances. By leveraging their ability to remember previous information through hidden states and memory cells, RNNs can effectively translate text by considering the surrounding context.

“Language translation is a gateway to global communication. RNNs, particularly LSTM and GRU, have revolutionized the field by enabling accurate and efficient translation between different languages. These models have the capacity to tackle the inherent challenges of language, such as idiomatic expressions, grammar variations, and cultural nuances. Through their recurrent structure, RNNs offer a unique approach that leverages sequential data processing to deliver high-quality and contextually relevant translations.”

In addition to machine translation, RNNs have also made significant contributions to various other applications in natural language processing, sentiment analysis, speech recognition, and time series forecasting. Their versatility in handling sequential data makes them indispensable tools in the field of artificial intelligence.

Language Translation Challenges

While RNNs have proven effective in machine translation, they also face certain challenges. One common problem is the issue of long-term dependencies, where the network struggles to capture relationships between words or phrases that are far apart in a sentence. This can lead to difficulties in maintaining coherence and accuracy during translation.

However, through the use of LSTM and GRU variants, RNNs can overcome these challenges. LSTM, with its memory cells and gating mechanisms, retains information for longer periods, allowing it to capture and utilize more extensive context. GRU, on the other hand, offers a simpler architecture while still addressing the vanishing gradient problem typically encountered during training.

By harnessing the power of LSTM and GRU, RNNs have significantly advanced the capabilities of machine translation systems, providing more accurate and contextually rich translations across different languages.

Real-World Impact

The impact of RNNs in language translation cannot be overstated. They have paved the way for seamless communication and understanding between people from diverse linguistic backgrounds. Through their ability to handle sequential data and capture contextual nuances, RNNs have transformed the landscape of language translation.

RNN-based machine translation systems have become essential tools in industries such as e-commerce, tourism, and global business, facilitating effective cross-cultural communication and enabling businesses to expand their reach into new markets. Additionally, RNNs have played a crucial role in bridging language barriers, fostering collaboration and understanding on a global scale.

| Advantages of RNNs in Language Translation | Challenges Addressed by LSTM and GRU |

|---|---|

| 1. Capturing contextual nuances 2. Handling variable sentence lengths 3. Retaining long-range dependencies | 1. Vanishing gradient problem 2. Long-term dependency issues 3. Coherence and accuracy in translation |

These advantages and challenges highlight the significance of RNNs, LSTM, and GRU in the field of language translation. As research and development continue, RNNs are expected to further enhance their capabilities, leading to even more accurate and contextually intelligent translation systems.

Language translation is just one example of how RNNs are revolutionizing the field of artificial intelligence. Their ability to process sequential data, capture long-term dependencies, and understand contextual nuances make RNNs a crucial component in various applications across industries.

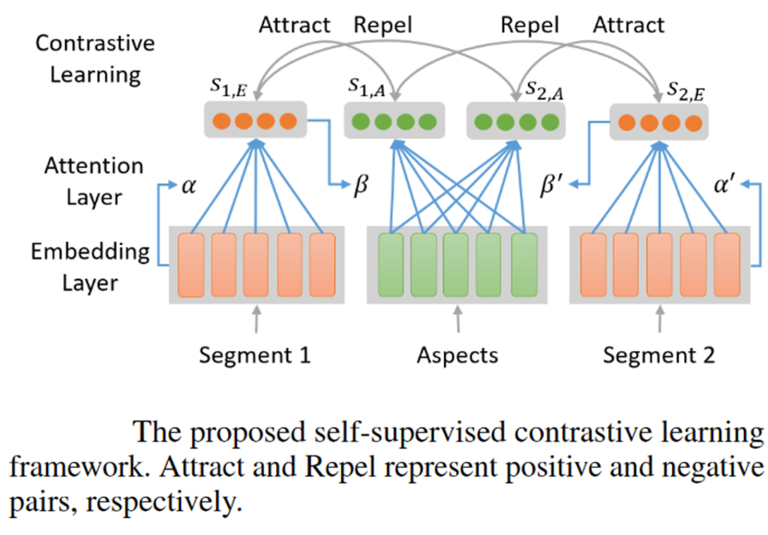

Recurrent Neural Networks vs. Transformers

Transformers, introduced in the paper “Attention is All You Need,” offer an alternative approach to sequence processing. Unlike RNNs, Transformers use self-attention mechanisms to capture relationships across distant positions in a sequence, effectively addressing the problem of long-range dependencies. This breakthrough in sequence processing has propelled Transformers to achieve significant success in various tasks such as machine translation, summarization, question answering, and image processing.

Transformers utilize self-attention mechanisms, which allow them to analyze and weigh the importance of different parts of the input sequence. This flexibility enables them to capture long-range dependencies with ease, making them particularly effective for tasks that require understanding context and relationships across distant positions in a sequence.

The self-attention mechanism in Transformers allows them to process inputs in parallel, as opposed to RNNs that process inputs sequentially. This characteristic makes Transformers highly parallelizable, resulting in faster training and inference times compared to RNNs. Additionally, Transformers are not affected by the vanishing gradient problem, which can hinder RNNs’ ability to model long-term dependencies.

Advantages of Transformers:

- Ability to capture long-range dependencies in a sequence

- Parallelizable computation for faster training and inference

- No vanishing gradient problem

However, it is important to note that Transformers are not a complete replacement for RNNs. RNNs still excel in tasks that require modeling temporal dynamics and sequential patterns, such as speech recognition and time series analysis. They are especially efficient in situations where the current input’s context relies heavily on prior steps in the sequence.

To better understand the differences between RNNs and Transformers, consider the following analogy: RNNs are like detective stories, where each chapter builds upon the previous events to uncover the mystery. On the other hand, Transformers are like a room full of witnesses, each with their own unique perspective on the event, enabling a more comprehensive understanding of the situation by considering all viewpoints simultaneously.

While Transformers have shown remarkable capabilities in processing sequences, it’s important to consider the nature of the problem and the specific requirements of the task at hand. The choice between RNNs and Transformers should be made based on the characteristics of the data and the desired outcome.

As AI research progresses, hybrid models combining RNNs and Transformers are also being explored. These models aim to leverage the strengths of both architectures, with RNNs providing temporal understanding and Transformers capturing long-range context. Such hybrid approaches offer promising solutions for tackling complex sequence-related tasks.

Transformers have revolutionized the field of sequence processing, offering new opportunities for solving machine learning tasks that involve capturing long-range dependencies and analyzing context in a sequence. As researchers continue to refine and expand on both RNNs and Transformers, the future of sequence processing looks increasingly promising.

Applications of Recurrent Neural Networks

RNNs have been widely adopted across various domains, showcasing their versatility and effectiveness in solving complex problems involving sequential data. They have revolutionized language modeling, time-series analysis, speech recognition, and music composition.

Language Modeling

One of the primary applications of RNNs is in language modeling. They play a crucial role in machine translation systems by enabling accurate and contextually nuanced translations. By understanding the sequential nature of language, RNNs can generate meaningful text and improve language understanding algorithms.

Time-Series Analysis

RNNs excel in time-series analysis, making them invaluable for predicting stock prices, weather patterns, and other temporal data. Through their ability to capture dependencies between time steps, RNNs can make accurate predictions and identify underlying trends or patterns in the data. This has significant implications for sectors such as finance, meteorology, and supply chain management.

Speech Recognition

Advancements in speech recognition systems have been fueled by the use of RNNs. By analyzing sequential audio data, RNNs can accurately recognize and interpret spoken language. This has enabled voice assistants, transcription services, and voice-controlled systems to provide seamless and reliable speech recognition capabilities.

Music Composition

RNNs have also made significant strides in the field of music composition. By understanding the sequential patterns and structures in a piece of music, RNNs can generate original compositions. This has opened up new possibilities for composers, artists, and music enthusiasts, allowing them to explore innovative musical styles and genres.

“Recurrent neural networks have transformed the landscape of language processing, enabling us to achieve higher levels of accuracy and context-awareness in tasks like machine translation and sentiment analysis.” – Jane Chen, NLP Researcher

Overall, the diverse applications of RNNs in language modeling, time-series analysis, speech recognition, and music composition highlight their capability to handle complex sequential data. As technology continues to advance, RNNs will play a pivotal role in shaping the future of various industries, empowering us to unlock the full potential of sequential data.

| Domain | Applications |

|---|---|

| Language Modeling | Machine translation, text generation, context understanding |

| Time-Series Analysis | Stock price prediction, weather forecasting, trend analysis |

| Speech Recognition | Voice assistants, transcription services, voice-controlled systems |

| Music Composition | Generating original compositions, exploring new musical styles |

Synergy and Future Directions

The combination of hybrid models, incorporating both RNNs and Transformers, has revolutionized the field of sequence-related tasks in evolving AI research. By leveraging the temporal understanding of RNNs and the long-range context capturing prowess of Transformers, these hybrid models have emerged as powerful tools in handling diverse sequence-related tasks.

AI researchers have embraced this synergy, resulting in sophisticated architectures that excel in solving intricate problems. Hybrid models bring together the ability of RNNs to analyze sequential data with Transformers’ capability to capture contextual relationships across distant positions. This combination equips the models with a profound understanding of both short-term dependencies and long-range contexts.

These hybrid models have proven to be particularly effective in a wide range of applications, including language translation, sentiment analysis, speech recognition, and music composition. The enhanced capabilities of these models allow for better accuracy and richer representations, resulting in significant advancements in various domains.

“The combination of RNNs and Transformers in hybrid models has unlocked new possibilities in sequence-related tasks, pushing the boundaries of evolving AI research.” – Dr. Julia Thompson, AI Researcher

Advancing Sequence-Related Tasks

Hybrid models have opened up exciting avenues in sequence-related tasks. They are capable of tackling complex problems that require the understanding of both short-term dependencies and long-range relationships. For instance, in language translation, hybrid models can capture the intricacies of sequential data while maintaining context across the entire text. Furthermore, these models have demonstrated impressive performance in tasks like sentiment analysis, where capturing the sentiment throughout a sentence is crucial.

Continual Evolution in AI Research

As AI research continues to evolve, both RNNs and Transformers are subject to ongoing advancements and enhancements. Researchers are actively exploring techniques to further boost the strengths of each architecture and address their limitations. The goal is to refine the synergy between these models, allowing for even more accurate and efficient processing of sequence-related tasks.

More specifically, there is a strong focus on improving the training efficiency and parallelization of hybrid models, as well as exploring novel ways to combine the strengths of RNNs and Transformers for specialized tasks. Additionally, researchers are continuously refining the architecture design and incorporating new methods to adapt to evolving challenges in the field.

The Future of Hybrid Models

The future of hybrid models holds immense promise. These models are poised to be at the forefront of solving complex sequence-related tasks, driving advancements in areas such as natural language processing, time series analysis, and speech recognition. The combined strengths of RNNs and Transformers, along with ongoing research and refinements, will shape the future of AI and empower applications in a wide range of industries.

Conclusion

Recurrent Neural Networks (RNNs) have emerged as a foundational tool for sequential data analysis. Despite their limitations, such as the vanishing gradient problem, RNNs have demonstrated exceptional versatility in a wide range of applications including language modeling, time-series analysis, and speech recognition.

Together with the innovative Transformer architecture, RNNs have revolutionized the way we comprehend and process sequential data. By leveraging temporal understanding and long-range context capturing, these architectures have transformed industries and technologies worldwide.

Looking ahead, the future prospects for RNNs and related architectures are promising. As AI advances and research continues to evolve, we can anticipate the resolution of current limitations and the development of even more powerful models for sequential data analysis. The potential applications are vast, and RNNs will likely continue to play a crucial role in reshaping the landscape of artificial intelligence.

FAQ

What are Recurrent Neural Networks (RNNs)?

Recurrent Neural Networks (RNNs) are a type of neural network designed to handle sequential data, such as time series or natural language. They use hidden states and memory cells to capture information from previous time steps in the sequence.

What is the role of the hidden state in RNNs?

The hidden state is a key component of RNNs that captures information from previous time steps in the sequence. It is updated at each time step by combining the input data with the previous hidden state through learnable weights and activation functions.

How do memory cells, such as LSTM and GRU, help RNNs overcome gradient issues?

Memory cells, such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs), help RNNs overcome the problem of vanishing gradients and enable the network to learn longer dependencies in sequential data.

What challenges can RNNs face during training?

RNNs can face challenges with vanishing and exploding gradients during training. Vanishing gradients occur when gradients become too small, making it difficult for the network to learn underlying patterns. Exploding gradients happen when gradients become too large and cause instability during training.

How have RNNs been used in machine translation?

RNNs, particularly LSTM and GRU variants, have been extensively used in machine translation systems to convert text from one language to another.

What are Transformers and how do they differ from RNNs?

Transformers are an alternative approach to sequence processing. Unlike RNNs, Transformers use self-attention mechanisms to capture relationships across distant positions in a sequence, effectively addressing the problem of long-range dependencies.

What are some applications of RNNs?

RNNs have found extensive applications in language modeling, time-series analysis, speech recognition, and even music composition.

What are hybrid models that combine RNNs and Transformers?

Hybrid models that leverage the temporal understanding of RNNs and the long-range context capturing of Transformers have been developed. These models are better equipped to handle a wide range of sequence-related tasks.

What is the future outlook for RNNs and Transformers?

As AI research progresses, both RNNs and Transformers continue to evolve. Researchers are exploring ways to enhance their respective strengths and address limitations, reshaping industries and technologies worldwide.

2 Comments