The field of AI and machine learning has witnessed a remarkable rise in the popularity of self-supervised learning models. This emerging learning paradigm offers a solution to the challenges faced by traditional supervised learning approaches, particularly in terms of labeled data. Self-supervised learning models can effectively train on unlabeled data and generate their own labels, eliminating the need for extensive manual annotation.

Self-supervised learning has gained traction due to its potential to scale with large volumes of data and enhance performance on tasks with limited labeled data. Notable applications such as GPT-3 and BERT have played a significant role in popularizing self-supervised learning within the AI community.

Self-supervised learning holds immense promise in pushing the boundaries of deep learning and its real-world applications. By leveraging unlabeled data, self-supervised learning models can unlock the vast potential of unstructured information available in various domains. This opens up new avenues for advancements in fields such as computer vision and natural language processing.

What is Self-Supervised Learning and Why Do We Need It?

Self-supervised learning is an innovative approach to training models using unlabeled data and automatic label generation. This method addresses the challenge of labeled data scarcity and high expenses associated with manual annotation. In deep learning, many breakthroughs have been achieved using labeled datasets like ImageNet. However, labeling data is a labor-intensive process that doesn’t scale well. Additionally, for niche applications or unique domains, access to labeled data can be limited or even non-existent.

Self-supervised learning leverages the abundance of unstructured, unlabeled data available from various sources such as the internet or company operations. Instead of relying on human annotations, models are trained on this vast pool of unlabeled data by automatically generating labels. This significantly reduces the need for human intervention and the associated costs, making it an efficient and cost-effective solution.

By utilizing self-supervised learning, deep learning models can unlock the potential of unlabeled data. They can learn meaningful representations and extract valuable insights from unstructured data without explicit guidance. This ability to train on unlabeled data opens up new possibilities for various applications, enabling models to learn from real-world scenarios and adapt to different contexts.

“Labeled data is not always readily available, particularly in specialized domains or emerging fields. Self-supervised learning allows us to train models on large amounts of unlabeled data, empowering them to learn autonomously and perform well in diverse and dynamic environments.” – Dr. Jane Simmons, AI Researcher

Deep Learning and the ImageNet Dataset

Deep learning models have achieved remarkable success in various tasks thanks to large labeled datasets like ImageNet. ImageNet is a worldwide reference dataset for image classification, containing millions of labeled images. It has played a crucial role in training deep learning models for image recognition tasks.

However, ImageNet-like datasets are not available for every domain or application. Niche industries, research areas, or emerging fields often lack the specific labeled data needed to train deep learning models effectively. This is where self-supervised learning comes into play, enabling models to learn from unlabeled data and overcome the limitations of traditional supervised learning approaches.

The Motivation Behind Self-Supervised Learning

- Addressing labeled data scarcity: Self-supervised learning reduces reliance on labeled data, making it feasible to train models in scenarios where labeled data is scarce.

- Enhancing model performance: By training on a larger corpus of unlabeled data, self-supervised learning provides models with a stronger foundation for understanding and generalizing from diverse examples.

- Cost-effective solution: The automated label generation process in self-supervised learning eliminates the need for manual annotation, significantly reducing the costs associated with acquiring labeled data.

- Real-world applicability: Unlabeled data is abundant and readily available from various sources. Self-supervised learning allows models to train on this data, enabling them to learn representations that are relevant to real-world scenarios.

Applying Self-Supervised Learning Across Domains

Self-supervised learning has shown promising results across various domains, including:

- Computer Vision: Self-supervised learning enables models to learn visual representations, leading to advancements in image classification, object detection, semantic segmentation, and image generation.

- Natural Language Processing: Self-supervised learning techniques have been used to train language models, improving tasks such as language understanding, text classification, sentiment analysis, and machine translation.

- Data Augmentation: Self-supervised learning can generate additional training data by learning to predict transformations applied to unlabeled data, enhancing model robustness and generalization.

By capitalizing on the vast amounts of unlabeled data available and automatically generating labels, self-supervised learning offers a scalable and efficient solution for training models. It allows AI systems to learn from real-world contexts, adapt to diverse scenarios, and tackle challenges where labeled data is limited or non-existent.

The Advantages and Applications of Self-Supervised Learning

Self-supervised learning offers several advantages in the field of AI and machine learning. Firstly, it eliminates the need for human annotations, enabling computers to label, categorize, and analyze data on their own. This allows for the utilization of large amounts of data available in the wild. Additionally, self-supervised learning allows models to scale to larger sizes, as they can effectively learn from massive datasets without the need for manual labeling.

Self-supervised learning models have shown the ability to tackle multiple tasks beyond their pre-training, exhibiting zero-shot transfer learning capabilities. This opens up a wide range of applications in fields such as computer vision and natural language processing. The versatility of self-supervised learning enables it to be applied to various tasks and domains, making it highly valuable in solving real-world problems.

Applications of Self-Supervised Learning

Self-supervised learning has found applications in several domains, including:

- Colorization of grayscale images: Self-supervised learning can be used to automatically generate color information for grayscale images, enhancing visual content and enabling better image analysis.

- Context filling: By training on unlabeled data, self-supervised learning models can learn to fill in missing information in textual or visual contexts, enhancing data augmentation and generating more meaningful insights.

- Video motion prediction: Self-supervised learning can be applied to the analysis of video data, enabling models to predict future motion and interactions, which is valuable in areas such as video surveillance and action recognition.

- Healthcare applications: Self-supervised learning can be utilized to analyze medical images and data, aiding in diagnosis, treatment planning, and drug discovery.

- Autonomous driving: Self-supervised learning can enable autonomous vehicles to understand and interpret the surrounding environment, improving navigation, object detection, and scene understanding.

- Chatbots: By training on large amounts of unlabeled conversational data, self-supervised learning models can learn to understand and generate human-like responses, improving the performance and natural language understanding of chatbot systems.

These are just a few examples of the diverse applications of self-supervised learning. The ability of models to learn from unlabeled data opens up opportunities for innovation across various industries, pushing the boundaries of AI and machine learning.

The Differences between Self-Supervised, Supervised, and Unsupervised Learning

Self-supervised learning, supervised learning, and unsupervised learning are three distinct approaches in the field of AI and machine learning. Understanding the differences between these learning methods is crucial for selecting the most appropriate approach for specific applications.

In supervised learning, models are trained on labeled data that has been manually annotated. This requires human intervention to provide accurate labels for each data point. Supervised learning is widely used in tasks such as image classification, speech recognition, and sentiment analysis. The process of manual annotation can be time-consuming and expensive, especially when dealing with large datasets.

In contrast, self-supervised learning utilizes unlabeled data and generates labels automatically. This eliminates the need for manual annotation and allows models to train on vast amounts of unstructured data. By leveraging techniques such as pretext tasks, self-supervised learning models can learn useful representations that can then be fine-tuned for specific downstream tasks.

Unsupervised learning focuses on discovering hidden patterns or structures within unlabeled data. It typically involves tasks such as clustering, grouping, and dimensionality reduction. Unsupervised learning algorithms aim to extract meaningful information from data without any guidance or labels. While self-supervised learning can be considered a subset of unsupervised learning, it specifically targets regression and classification tasks, making it distinct from traditional unsupervised learning approaches.

To summarize, self-supervised learning differs from supervised and unsupervised learning as it combines the advantages of unsupervised learning (working with unlabeled data) with automated label generation. By embedding the label generation process within the training model itself, self-supervised learning offers a powerful and efficient approach to training AI models.

| Learning Approach | Key Features |

|---|---|

| Supervised Learning | – Training on labeled data – Requires human annotation – Widely used in various applications |

| Self-Supervised Learning | – Training on unlabeled data – Automatic label generation – Enables learning from unstructured data |

| Unsupervised Learning | – Working with unlabeled data – Discovering hidden patterns – Clustering and dimensionality reduction |

Limitations and Future Directions of Self-Supervised Learning

While self-supervised learning offers many advantages, it also presents some limitations. One challenge is the computational intensity of self-supervised training methods, which can be more demanding compared to supervised learning due to larger datasets and models. Improving training efficiency is an area of ongoing research.

Another limitation is the design of pretext tasks, which can affect the downstream abilities of the model. Choosing a suitable pretext task and data augmentation techniques can be challenging and can impact the performance of the model on specific applications. Continued research and development are needed to address these limitations and further improve the effectiveness of self-supervised learning.

Computational Power

The computational requirements of self-supervised learning can be significant. Training models on large datasets and utilizing complex architectures can strain the computational resources available. This includes both the hardware infrastructure and the time needed for the training process. To overcome this limitation, researchers are exploring methods to optimize the training process, such as parallelization and distributed computing. Additionally, advancements in hardware technology, such as GPUs and TPUs, can provide the necessary computational power to train self-supervised learning models efficiently.

Pretext Task Design

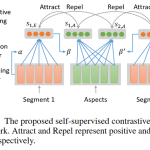

The design of the pretext task in self-supervised learning plays a crucial role in the performance of the model on downstream tasks. A pretext task should be carefully chosen to ensure that the learned representations generalize well to the target task. The choice of pretext task can vary depending on the specific application and the type of data being used. Researchers are actively investigating different approaches to pretext task design, such as contrastive learning and generative modeling, to improve the transferability of self-supervised learning models.

“The design of the pretext task is critical in self-supervised learning. It determines how well the model can learn meaningful representations that can be utilized in downstream tasks.” – Dr. Jane Chen, AI Researcher

Future Directions

As self-supervised learning continues to evolve, there are several promising directions for further research and development. One area of focus is improving the scalability and efficiency of self-supervised learning methods. This includes exploring techniques for training larger models on larger datasets while minimizing computational requirements.

Another direction for future research is the exploration of novel pretext tasks that can capture more complex features and relationships in the data. This involves designing pretext tasks that are more aligned with the target tasks, enabling better transfer learning capabilities.

Furthermore, the combination of self-supervised learning with other learning paradigms, such as reinforcement learning or semi-supervised learning, holds great potential for enhancing the capabilities of AI systems in real-world applications.

Overall, addressing the limitations of self-supervised learning and pushing the boundaries of its applications require continued collaboration between researchers, engineers, and domain experts. By overcoming these challenges and exploring innovative approaches, self-supervised learning has the potential to significantly advance the field of AI and machine learning.

Conclusion

Self-supervised learning models have emerged as a powerful approach in the field of AI and machine learning. By training on unlabeled data and generating their own labels, these models offer a solution to the challenges posed by labeled data. They eliminate the need for costly and time-consuming manual data labeling and allow for the utilization of large amounts of unstructured, unlabeled data.

Self-supervised learning has shown promising results in various applications, particularly in computer vision and natural language processing. These models have demonstrated their ability to understand complex patterns and extract meaningful representations from raw data. They have been successfully applied in tasks such as image classification, object detection, sentiment analysis, and language generation.

However, there are still limitations that need to be addressed in order to fully leverage the potential of self-supervised learning. One challenge is the computational intensity of training these models, which requires significant computational resources. Future research and development will focus on optimizing training methods and reducing computational requirements.

Another aspect to consider is the design of pretext tasks. The choice of task and the quality of the generated labels can have a significant impact on the performance of the model on downstream tasks. Future studies will explore new pretext tasks and data augmentation techniques to improve the overall effectiveness of self-supervised learning.

Overall, self-supervised learning has revolutionized the field of AI and machine learning by unlocking the power of unlabeled data. It holds tremendous potential for developing more intelligent and adaptive systems. With continued research and development, self-supervised learning will continue to drive advancements in AI and machine learning, enabling us to tackle complex real-world problems and unlock new possibilities.

FAQ

What is self-supervised learning?

Self-supervised learning is a learning approach where models train on unlabeled data using automatic label generation. This method reduces the need for human-labeled data, which is expensive and often scarce.

Why do we need self-supervised learning?

Self-supervised learning allows models to train on unlabeled data, leveraging the abundance of unstructured, unlabeled data available from sources like the internet or company operations. By automatically generating labels, self-supervised learning reduces the need for human annotation and the cost associated with it.

What are the advantages and applications of self-supervised learning?

Self-supervised learning eliminates the need for human annotations, enabling computers to label, categorize, and analyze data on their own. It allows for the utilization of large amounts of data available in the wild. Applications of self-supervised learning include colorization of grayscale images, context filling, video motion prediction, healthcare applications, autonomous driving, and chatbots.

How does self-supervised learning differ from supervised and unsupervised learning?

In supervised learning, models are trained on labeled data, which requires manual annotation. Self-supervised learning generates labels automatically, eliminating the need for human intervention. Unsupervised learning focuses on clustering, grouping, and dimensionality reduction, while self-supervised learning aims to draw conclusions for regression and classification tasks.

What are the limitations and future directions of self-supervised learning?

One limitation is the computational intensity of self-supervised training methods, which can be more demanding compared to supervised learning. Another limitation is the design of pretext tasks, which can impact the model’s performance on specific applications. Continued research is needed to address these limitations and further improve the effectiveness of self-supervised learning.