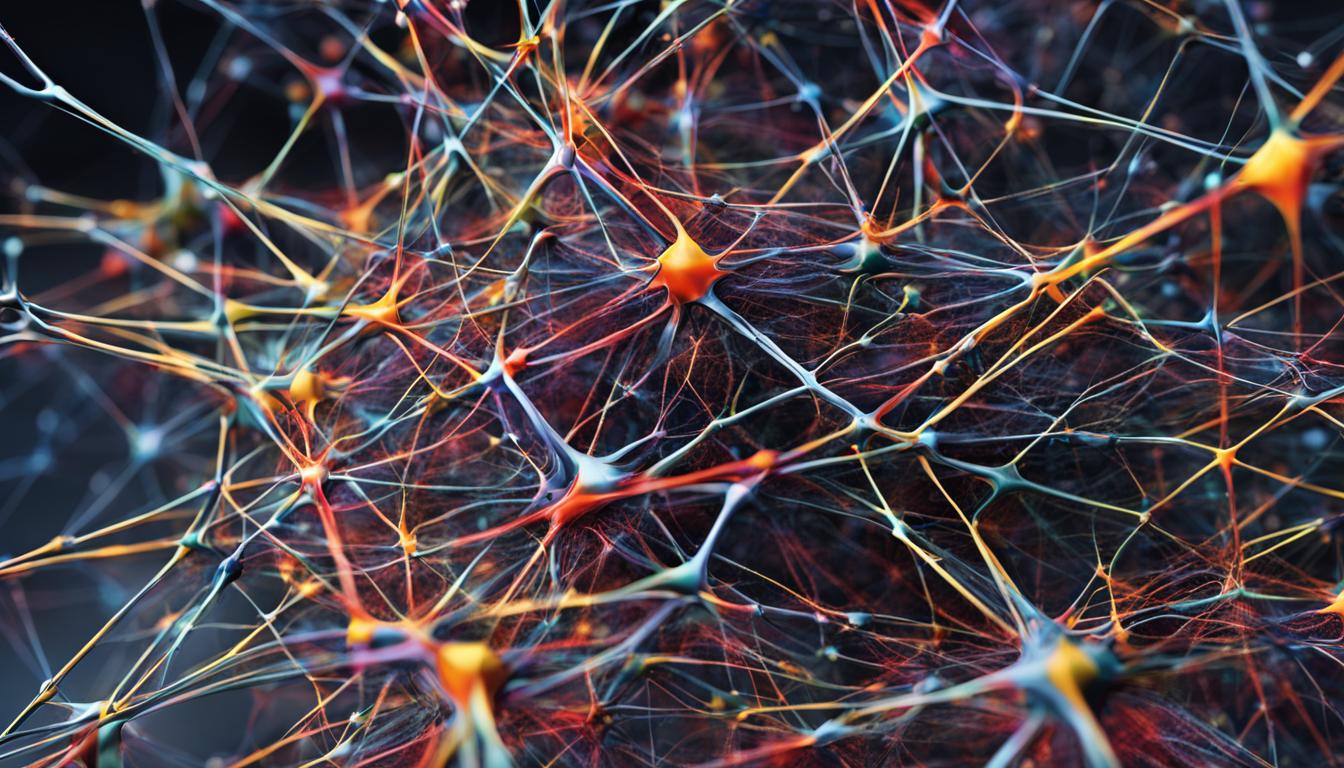

Spiking Neural Networks (SNNs) are a cutting-edge approach to artificial intelligence that aims to mimic the structure and functioning of the human brain. Unlike traditional artificial neural networks, which operate with continuous values, SNNs communicate using discrete electrical pulses called spikes. This event-driven communication offers several advantages, including low power consumption, real-time processing, and the ability to encode information in the timing and frequency of spikes. SNNs are being hailed as the key to the next generation of AI, as they offer a more efficient and realistic approach to mimicking human cognition.

The Architecture of Spiking Neural Networks

Spiking Neural Networks (SNNs) are complex systems composed of multiple components that work together to process information and generate outputs. Understanding the architecture of SNNs is key to harnessing their full potential in artificial intelligence applications.

Input Layer

The input layer is the first component of an SNN that receives external stimuli or data. Each neuron in the input layer corresponds to a specific feature or dimension of the input data. By analyzing various input features simultaneously, SNNs can capture a comprehensive understanding of the data.

Hidden Layers

SNNs may contain one or more hidden layers, situated between the input and output layers. These hidden layers are responsible for processing the input signals and extracting meaningful patterns. Each neuron in the hidden layer receives input spikes from the previous layer and performs computations using weighted connections and activation functions.

Spiking Neurons

Unlike traditional artificial neurons that operate using continuous activation values, spiking neurons in SNNs communicate through discrete spikes or electrical pulses. These spikes represent the integrated input signals surpassing a certain threshold. The timing and frequency of spikes carry valuable information in SNNs, enabling more nuanced encoding and decoding of data.

Synaptic Connections

Synaptic connections play a crucial role in the transmission of spikes between neurons in an SNN. Each connection between two neurons has a weight associated with it, which determines the influence of the presynaptic (input) neuron on the postsynaptic (output) neuron. These synaptic weights can be adjusted through learning mechanisms to optimize the network’s performance.

Output Layer

The output layer is the final component of an SNN and produces the network’s ultimate output based on the activity of neurons within this layer. The output can be in various forms, such as classifications, predictions, or control signals. The output layer represents the culmination of information processing and decision-making in an SNN.

To summarize, the architecture of Spiking Neural Networks consists of an input layer that receives external stimuli, hidden layers that process information, spiking neurons that communicate through discrete spikes, synaptic connections that transmit these spikes, and an output layer that produces the final output of the network.

A visual representation of the architecture of a Spiking Neural Network:

| Component | Description |

|---|---|

| Input Layer | Receives external stimuli or data. |

| Hidden Layers | Process input signals through weighted connections and activation functions. |

| Spiking Neurons | Communicate through discrete spikes or electrical pulses. |

| Synaptic Connections | Transmit spikes between neurons with adjustable weights. |

| Output Layer | Produces the final output based on neuron activity. |

Working Principle of Spiking Neural Networks

In Spiking Neural Networks (SNNs), the working principle revolves around the generation and propagation of spikes. Each neuron in an SNN integrates incoming spikes and, if the combined signal surpasses a certain threshold, generates its own spike. This spike generation process is crucial for information processing in SNNs.

The timing and frequency of spikes in SNNs encode information, providing a richer representation than simple activation levels. Spike encoding refers to the conversion of continuous input data into spike trains that can be processed by the network. Different spike encoding methods are used based on the specific requirements of the task at hand.

On the other hand, spike decoding is the process of interpreting spike patterns to derive meaningful output or make predictions. This decoding allows the SNN to extract relevant information from the spike trains and produce the desired response or output.

Additionally, SNNs employ various learning mechanisms to optimize network performance. One such mechanism is Spike-Timing-Dependent Plasticity (STDP), which adjusts synaptic weights based on the precise timing of pre- and post-synaptic spikes. This allows the network to adapt and learn from the temporal correlations between spikes, reinforcing synapses that contribute to desired outputs and weakening synapses that lead to undesired outputs.

Overall, the working principle of Spiking Neural Networks involves spike generation, spike encoding, spike decoding, and learning mechanisms such as STDP. These components work together to enable SNNs to process information in a biologically-inspired manner and achieve high-performance results in various applications.

Real-Time Use Cases of Spiking Neural Networks

Spiking Neural Networks (SNNs) have a wide range of real-time applications, making them a valuable tool in various fields. Their unique architecture and capabilities have opened up new possibilities in robotics, sensor processing, brain-computer interfaces, and pattern recognition.

1. Robotics

SNNs offer precise control and faster decision-making in robotics systems. Their event-driven communication allows for real-time processing, enabling robots to react swiftly to changing environments. SNNs are ideal for object manipulation, autonomous navigation, and motor control tasks, enhancing the overall performance and efficiency of robotic systems.

2. Sensor Processing

SNNs excel in handling event-driven data from sensors such as LiDAR or neuromorphic cameras. Their ability to process information based on the timing and frequency of spikes allows for efficient and accurate analysis of sensory data. This makes SNNs particularly suitable for applications that require real-time sensor processing, such as environmental monitoring, industrial automation, and smart cities.

3. Brain-Computer Interfaces

Spiking Neural Networks offer a promising avenue for intuitive control of devices using brain signals. By capturing and interpreting neural activity, SNNs can enable individuals to control prosthetic limbs, computer interfaces, and assistive technologies through their thoughts. This has profound implications for individuals with motor disabilities and can significantly improve their quality of life.

4. Pattern Recognition

Pattern recognition tasks, such as image and speech recognition, can greatly benefit from the temporal coding capabilities of SNNs. The ability to encode information in the timing and frequency of spikes allows for more accurate and robust pattern recognition, leading to improved performance in tasks like facial recognition, speech transcription, and anomaly detection.

Spiking Neural Networks are at the forefront of real-time applications, driving advancements in robotics, sensor processing, brain-computer interfaces, and pattern recognition. Their unique computational principles and capabilities make them a powerful tool in various domains, offering solutions to complex real-world challenges.

How to Use Spiking Neural Networks

Building and simulating Spiking Neural Networks is made easier with various libraries and frameworks. Researchers and developers can leverage these tools to harness the potential of SNNs in their applications. Let’s explore some of the key libraries and frameworks available for building and simulating SNNs.

Brian 2

Brian 2 is a powerful and versatile library specifically designed for complex SNNs. It offers detailed documentation and tutorials, making it easier for users to understand and implement complex neural networks. With its extensive functionality and flexibility, Brian 2 empowers researchers to explore and experiment with advanced SNN architectures.

Nengo

Nengo is another popular library that not only allows you to build SNNs but also focuses on building biologically realistic models. While Nengo supports simpler SNNs for beginners, it provides tools for online learning and control, enabling the creation of more sophisticated neural networks. This library prioritizes the construction of SNNs that accurately mimic the behavior of real biological neural networks.

SpiNNaker

SpiNNaker takes a different approach by offering a specialized hardware platform for efficient SNN implementation. It is designed to handle the massive parallelism inherent in SNNs, allowing for faster execution and real-time processing. This hardware platform enables the deployment of large-scale SNN models in practical real-world scenarios, such as robotics and sensor processing.

SNNTorch

SNNTorch stands out as a PyTorch-based library that combines SNN simulation with GPU acceleration. It offers the advantage of leveraging the high-performance computing capabilities of GPUs, accelerating the simulation of SNNs and improving their runtime performance. With SNNTorch, researchers can take advantage of PyTorch’s extensive ecosystem while utilizing SNN-specific optimizations.

These libraries and frameworks, such as Brian 2, Nengo, SpiNNaker, and SNNTorch, provide essential tools and resources for researchers and developers venturing into the realm of Spiking Neural Networks. By harnessing the capabilities of these libraries, they can unleash the potential of SNNs in various domains, ranging from neuroscience research to real-time artificial intelligence applications.

Pros and Cons of Spiking Neural Networks

Spiking Neural Networks (SNNs) offer several advantages over traditional neural networks.

Low power consumption

The event-driven nature of SNNs results in low power consumption, making them ideal for resource-constrained devices. By utilizing discrete electrical pulses called spikes, SNNs achieve efficient energy usage and extend the battery life of devices.

Real-time processing

SNNs excel in real-time processing, allowing for faster reactions and dynamic responses to stimuli. The use of discrete spikes enables SNNs to process information rapidly and enable real-time decision-making in time-sensitive applications like robotics and autonomous systems.

Bio-inspired learning

SNNs incorporate bio-inspired learning mechanisms, such as Spike-Timing-Dependent Plasticity, which imitate the learning processes of the human brain. This enables more natural and adaptable learning, enhancing the network’s ability to recognize patterns and adjust its behavior based on real-time feedback.

Scalability

However, scalability remains a challenge for SNNs. Further research is needed to improve their scalability to larger datasets and more complex tasks. As neural networks grow in size and complexity, optimizing the performance and scalability of SNNs will be crucial for their widespread adoption.

“Spiking Neural Networks offer benefits such as low power consumption and real-time processing, making them well-suited for resource-constrained devices and time-sensitive applications. However, scalability is a key consideration that requires ongoing research and development.” – Dr. Sarah Cohen, AI Researcher

In summary, Spiking Neural Networks offer significant advantages in terms of low power consumption, real-time processing, and bio-inspired learning. While scalability remains a challenge, researchers are actively working to address this limitation and unlock the full potential of SNNs for various applications.

See Table 6.1 for a detailed comparison of the pros and cons of Spiking Neural Networks:

| Pros | Cons |

|---|---|

| Low power consumption | Scalability challenges |

| Real-time processing | |

| Bio-inspired learning |

Future Improvements in Spiking Neural Networks

Ongoing research in the field of Spiking Neural Networks (SNNs) aims to overcome some of the existing challenges and enhance their capabilities. Advancements in scalability, explainability, and hardware development are key areas of focus for researchers and developers.

Scalability

One of the primary goals in SNN research is to improve scalability. New algorithms and techniques are being developed to handle larger datasets and more complex tasks. By addressing the scalability challenge, SNNs can be applied to a wider range of applications and achieve better performance in handling Big Data.

Explainability

As SNNs become more complex, the need for explainability increases. Researchers are developing techniques to make SNNs more interpretable and transparent, allowing users to understand the decisions made by the network. By improving explainability, SNNs can gain more trust and acceptance in critical domains such as healthcare and finance.

Hardware Development

Specialized hardware development for SNNs is advancing rapidly. Investments are being made to create efficient and dedicated hardware platforms optimized for running SNNs. Neuromorphic chips and architectures are being designed to harness the full potential of SNNs and enable faster and more energy-efficient computations.

Overall, the future of Spiking Neural Networks looks promising, with ongoing research focused on improving scalability, enhancing explainability, and advancing dedicated hardware development. As these advancements continue, SNNs have the potential to revolutionize artificial intelligence and pave the way for more advanced and human-like cognitive systems.

Conclusion

Spiking Neural Networks (SNNs) offer a promising approach to artificial intelligence that closely mimics the structure and functioning of the human brain. By utilizing event-driven communication and encoding information in the timing and frequency of spikes, SNNs provide several advantages over traditional neural networks. One of the key benefits is their low power consumption, making them ideal for resource-constrained devices. Additionally, SNNs excel in real-time processing, enabling faster reactions and dynamic responses to stimuli, which is crucial in many AI applications.

Another significant advantage of SNNs is their ability to encode information in the timing and frequency of spikes. This allows for more accurate information representation in the network, mimicking the way the human brain processes and encodes information. However, challenges in scalability and explainability still need to be addressed. Further research and advancements in learning algorithms and hardware optimization are necessary to improve the scalability of SNNs and make them more explainable to users.

Despite these challenges, ongoing research and improvements are making SNNs a key player in the future of artificial intelligence. As researchers continue to explore new learning algorithms and optimize hardware, the potential of SNNs to achieve human-like cognition continues to grow. With their ability to mimic the brain’s structure and functioning, SNNs hold great promise in advancing our understanding of artificial intelligence and enhancing human cognition in various domains.

FAQ

What are Spiking Neural Networks?

Spiking Neural Networks (SNNs) are a cutting-edge approach to artificial intelligence that mimic the structure and functioning of the human brain. Unlike traditional artificial neural networks, SNNs communicate using discrete electrical pulses called spikes.

What is the architecture of Spiking Neural Networks?

Spiking Neural Networks consist of an input layer, hidden layers, and an output layer. The input layer receives external stimuli, hidden layers process the input signals through weighted connections and activation functions, and the output layer produces the final output of the network based on the activity of neurons in this layer.

How do Spiking Neural Networks work?

The working principle of Spiking Neural Networks revolves around the generation, propagation, and encoding of spikes. Each neuron integrates incoming spikes and generates its own spike if the combined signal surpasses a certain threshold. The timing and frequency of spikes encode information, offering a richer representation than simple activation levels. SNNs utilize various learning mechanisms to adjust synaptic weights based on spike timing and optimize network performance.

What are the real-time use cases of Spiking Neural Networks?

Spiking Neural Networks find applications in robotics, sensor processing, brain-computer interfaces, and pattern recognition. In robotics, SNNs enable precise control and faster decision-making. In sensor processing, SNNs efficiently handle event-driven data from sensors like LiDAR or neuromorphic cameras. In brain-computer interfaces, SNNs offer potential for intuitive control using brain signals. In pattern recognition, SNNs leverage their temporal coding capabilities for improved accuracy in tasks like image and speech recognition.

How can Spiking Neural Networks be used?

Building and simulating Spiking Neural Networks is made easier with various libraries and frameworks like Brian 2, Nengo, SpiNNaker, and SNNTorch. These tools enable researchers and developers to harness the potential of SNNs in their applications.

What are the pros and cons of Spiking Neural Networks?

Spiking Neural Networks offer advantages such as low power consumption, real-time processing, and bio-inspired learning mechanisms. However, scalability remains a challenge for SNNs.

What improvements are being made in Spiking Neural Networks?

Ongoing research aims to address challenges in scalability and explainability. Improvements in scalability, explainability techniques, and dedicated hardware development for SNNs are being explored to handle larger datasets, complex tasks, and make SNNs more interpretable.

What is the future of Spiking Neural Networks?

Spiking Neural Networks offer a promising approach to artificial intelligence by mimicking the structure and functioning of the human brain. With ongoing research and improvements, SNNs are becoming a key player in the future of AI, with potential to achieve human-like cognition.