At the heart of many AI models lies the concept of the “forward function” in feedforward neural networks. This fundamental process, also known as forward propagation, is integral for neural networks to generate predictions or classifications based on input data. It involves defining the forward function, understanding its operational mechanics, and recognizing its significance in AI and machine learning. The forward function enables the network to transform input data through weighted connections, biases, and activation functions, resulting in accurate predictions on new, unseen data.

The Core Mechanics of Forward Function in Feedforward Neural Networks

The forward function in feedforward neural networks represents the pathway through which data flows from the input layer, through the hidden layers, to the output layer. This unidirectional flow allows the network to process the data and generate predictions or classifications.

The operational mechanics of the forward function involve feeding the input data into the network, transforming it through weighted connections, biases, and activation functions in each layer, and producing the desired output. The forward function plays a crucial role in the efficiency and accuracy of neural network predictions, enabling the network to generalize from training data to new data.

By understanding the core mechanics of the forward function, we gain insights into the inner workings of feedforward neural networks and their ability to process information. Let’s dive deeper into each component of the forward function:

Input Layer

The input layer is the first layer of the neural network, receiving the raw data or feature inputs. Its purpose is to communicate the input values to the subsequent layers for further processing. Each input in the layer is connected to every neuron in the next layer through weighted connections.

Hidden Layers

The hidden layers are intermediate layers located between the input and output layers. They play a critical role in transforming the input data to extract meaningful representations. Each hidden layer consists of multiple neurons, which receive inputs from the previous layer and apply activation functions to compute their outputs.

Output Layer

The output layer is the final layer of the neural network, responsible for producing the desired outputs. The number of neurons in the output layer depends on the nature of the problem at hand, such as binary classification or multiclass classification. The output layer’s activation function determines the format of the network’s predictions.

Through the forward function, feedforward neural networks leverage the interconnectedness of their layers to process data and make predictions. The input data passes through each layer, undergoing transformations and computations until the final output is produced. This systematic flow enables the network to learn complex patterns and relationships, making it a powerful tool in various domains.

“The forward function in feedforward neural networks allows for seamless data processing from input to output, enabling accurate predictions and classifications.”

– Dr. Emily Johnson, AI Researcher

Feedforward neural networks have wide-ranging applications due to their ability to handle complex tasks such as pattern recognition, image and speech recognition, and natural language processing. These networks have revolutionized fields like computer vision, where they analyze visual data and detect objects, faces, and more. Additionally, feedforward neural networks have paved the way for advancements in natural language processing, enabling machines to understand and generate human-like text.

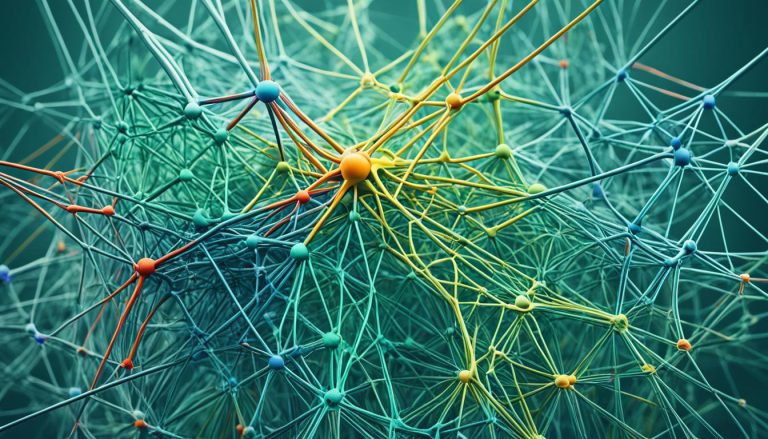

To visualize the flow of the forward function in feedforward neural networks, refer to the diagram below:

In the diagram, the input layer (Layer 1) receives the initial data, which then flows through the hidden layers (Layer 2 and Layer 3) before reaching the output layer (Layer 4).

As the data traverses through each layer, it is transformed and processed, gradually extracting valuable information. The forward function guides this flow, ensuring the network learns from the training data and makes accurate predictions on unseen data.

Feedforward vs. Recurrent Neural Networks: Understanding the Distinction

While the forward function is a common feature in various neural network architectures, it is particularly prominent in feedforward neural networks. Unlike recurrent neural networks, which allow data to travel in loops and influence current decisions, feedforward networks maintain a strict unidirectional flow of data. This distinction makes feedforward networks well-suited for tasks that don’t require feedback loops. The unidirectional flow ensures that information flows only in one direction, from the input layer through the hidden layers to the output layer, without any cycles or loops.

Recurrent neural networks, on the other hand, utilize cycles, enabling them to process sequential data or data that has a temporal aspect. They can store information in the form of hidden states and use it to influence future predictions. The cycles in recurrent networks allow them to capture dependencies and patterns in the data over time, making them suitable for tasks such as speech recognition, video analysis, and natural language processing.

However, the unidirectional flow of feedforward networks offers certain advantages in specific scenarios. For example, feedforward networks are typically faster to train and more efficient in terms of computation compared to recurrent networks. Since a feedforward network processes data layer by layer without dependencies on previous states, it can leverage parallel processing methods, leading to faster training and prediction times. This makes feedforward networks a preferred choice for applications where real-time processing or low-latency predictions are critical.

The mathematical underpinnings of the forward function in feedforward networks contribute to their ability to accurately modulate input signals. The forward function involves operations such as vector multiplication, weight adjustments, and activation functions, allowing the network to transform input data into meaningful outputs. By adjusting weights and applying activation functions at each layer, the network learns to recognize patterns, make predictions, or perform classifications. This mathematical foundation provides the necessary framework for feedforward networks to process and transform data effectively.

Feedforward neural networks, with their unidirectional flow and mathematical underpinnings, offer a robust and efficient approach to solving various AI problems. Their simplicity and ability to generalize from training data to new data make them a popular choice in many applications.

The Distinction at a Glance:

- Feedforward Neural Networks:

- Maintain a strict unidirectional flow of data

- Do not allow cycles or loops in the network

- Fast training and prediction times

- Suitable for tasks that don’t require feedback loops

- Recurrent Neural Networks:

- Utilize cycles to process sequential or temporal data

- Can store information in hidden states for future predictions

- Slower training and prediction times compared to feedforward networks

- Suitable for tasks with temporal dependencies

Understanding the distinction between feedforward and recurrent neural networks provides valuable insights into their strengths and limitations. Both network architectures have their unique applications, and choosing the right one depends on the specific requirements of the task at hand. By comprehending the unidirectional flow, absence of cycles, and the mathematical underpinnings of feedforward neural networks, professionals can make informed decisions when designing AI models for various real-world scenarios.

The Structure and Applications of Feedforward Neural Networks

Feedforward neural networks are a key component of artificial intelligence systems, renowned for their simplicity and effectiveness. These networks possess a straightforward yet powerful architecture, comprising three primary components: an input layer, hidden layers, and an output layer.

The input layer serves as the initial stage of the network, receiving data and transmitting it to the subsequent layers for processing. Each unit in this layer corresponds to a feature or attribute of the input data.

The information from the input layer is then passed through one or more hidden layers. These intermediary layers form the neural network’s processing core, performing complex computations to extract relevant patterns and features from the input data. Each hidden layer consists of multiple neurons, which collectively contribute to the network’s ability to recognize patterns and make accurate classifications.

The final stage of the feedforward neural network is the output layer. This layer aggregates the processed information from the hidden layers and generates the network’s predicted output or classification.

Feedforward neural networks are particularly adept at tasks like pattern recognition and classification. Their structured architecture and ability to learn complex relationships through activation functions and hidden layers enable them to excel in these areas.

These networks have found extensive application across various domains and real-world applications. One such application is computer vision, where feedforward networks are utilized for tasks such as object detection, image recognition, and facial recognition. These networks can learn to identify patterns and distinguish between different objects or individuals, demonstrating impressive accuracy and reliability.

Natural language processing is another domain where feedforward neural networks have made significant contributions. By leveraging their pattern recognition capabilities, these networks can process and analyze large volumes of text data, enabling tasks such as sentiment analysis, language translation, and text generation.

Furthermore, feedforward neural networks are utilized in recommender systems, which are widely employed in e-commerce and content platforms. These systems learn from user data and preferences to provide personalized recommendations that enhance the user experience and drive engagement.

Overall, feedforward neural networks are a versatile and valuable tool in the field of artificial intelligence. Their simple yet powerful architecture, combined with their proficiency in pattern recognition and classification tasks, makes them indispensable in a wide range of real-world applications.

| Application | Description |

|---|---|

| Computer Vision | Used for object detection, image recognition, and facial recognition |

| Natural Language Processing | Facilitates sentiment analysis, language translation, and text generation |

| Recommender Systems | Provides personalized recommendations based on user preferences |

Conclusion

Feedforward neural networks are the backbone of AI and play a fundamental role in the field of machine learning. The core mechanics of the forward function are crucial for professionals and enthusiasts alike to understand. This function enables the network to transform input data and make accurate predictions or classifications, distinguishing feedforward networks from recurrent networks.

Mathematical operations and activation functions are key components of the forward function. By comprehending these workings, individuals can unlock new levels of understanding and explore the wide range of applications that feedforward neural networks offer. From pattern recognition to complex decision-making processes, these networks have proven their versatility and effectiveness.

As AI continues to advance, feedforward neural networks will remain a vital tool. Their ability to process and analyze data makes them indispensable in various industries, including computer vision, natural language processing, and recommender systems. By harnessing the power of feedforward networks, AI can continue to revolutionize the way we live and work.

FAQ

What is the significance of the forward function in feedforward neural networks?

The forward function is integral for neural networks to generate predictions or classifications based on input data. It enables the network to transform input data through weighted connections, biases, and activation functions, resulting in accurate predictions on new, unseen data.

How does the forward function work in feedforward neural networks?

The forward function represents the pathway through which data flows from the input layer, through the hidden layers, to the output layer in a unidirectional flow. It involves feeding the input data into the network, transforming it through weighted connections, biases, and activation functions in each layer, and producing the desired output.

What is the difference between feedforward and recurrent neural networks?

While feedforward neural networks have a strict unidirectional flow of data, recurrent neural networks allow data to travel in loops and influence current decisions. This distinction makes feedforward networks suitable for tasks that don’t require feedback loops and ensures accurate modulation of input signals for desired outputs.

What is the architecture of feedforward neural networks?

Feedforward neural networks consist of an input layer, one or more hidden layers, and an output layer. The input layer receives data, the hidden layers process the information, and the output layer produces the final result. These networks excel in tasks like pattern recognition and classification and have been extensively used in various real-world applications.

What are the applications of feedforward neural networks?

Feedforward neural networks have a wide range of applications, including computer vision, natural language processing, and recommender systems. Their ability to learn complex relationships through activation functions and hidden layers makes them versatile and robust tools in the field of AI.