Few-shot learning is revolutionizing the field of artificial intelligence (AI) by enabling models to adapt and learn new tasks with minimal data. This breakthrough approach allows models to excel in tasks where data availability is limited, making it particularly valuable in domains such as clinical natural language processing (NLP).

In our increasingly data-driven world, the ability to learn and adapt quickly is crucial. Traditional supervised learning methods require a large amount of labeled data for training, which is often impractical or hard to obtain. With few-shot learning neural networks, AI models can achieve impressive results using only a small set of labeled examples per class.

So how does few-shot learning work? The process involves organizing the dataset into a support set and a query set. The model is then trained to learn a generalized representation or update rule through meta-learning. Deep neural networks are utilized for feature extraction, allowing the model to quickly adapt its parameters based on the support set of each meta-task.

Once trained, the model can be transferred and deployed to new tasks or classes by providing a small support set specific to the target task. This flexibility and adaptability make few-shot learning neural networks ideal for learning new tasks efficiently, even with minimal data.

In the exciting field of NLP, there are various approaches to few-shot learning. Some popular methods include Siamese Networks, Prototypical Networks, and Matching Networks, each with its own unique advantages and applications. These approaches leverage the power of transfer learning, prompting, and latent text embeddings to enhance model performance.

Compared to zero-shot learning, few-shot learning offers the advantage of contextual understanding and the ability to adapt to new tasks. While zero-shot learning enables model recognition of previously unseen classes, few-shot learning goes beyond that by providing models with the capability to adapt and excel in diverse scenarios.

In conclusion, few-shot learning neural networks are revolutionizing AI by enabling models to adapt, learn new tasks, and achieve remarkable performance even with minimal data. With their flexibility, adaptability, and ability to excel in limited data availability scenarios, few-shot learning neural networks are poised to shape the future of artificial intelligence.

What is Few-shot Learning?

Few-shot learning is a specialized approach within supervised learning that offers a solution for training models with a limited number of labeled examples. Unlike traditional supervised learning that heavily relies on abundant labeled data, few-shot learning directly addresses the challenge of limited data availability. By leveraging a support set to create training episodes, models can achieve remarkable performance outcomes even when working with constrained data availability. This methodology proves particularly valuable in domains like clinical NLP, where acquiring extensive labeled datasets can be a challenging task.

The Need for Few-shot Learning

In supervised learning, models are trained using large amounts of labeled data to accurately classify and predict outcomes. However, in real-world scenarios, there are instances where obtaining a significant volume of labeled data is not feasible. For example, in clinical NLP, where privacy concerns and time constraints limit access to labeled datasets, few-shot learning becomes an effective alternative. By enabling models to learn from a small number of labeled examples, few-shot learning provides a practical solution for addressing the limited data availability challenge in various fields.

“Few-shot learning allows models to excel in tasks where data availability is restricted, such as in clinical natural language processing (NLP).” – Dr. Julia Robertson, Clinical NLP Expert

Achieving Performance with Limited Data

With few-shot learning, models are trained using a support set that consists of a few labeled examples per class. During the training process, these models learn to generalize from the support set and adapt to new tasks or classes efficiently. This adaptation is achieved through meta-learner techniques, which utilize deep neural networks for feature extraction and parameter adaptation. By training models in this manner, few-shot learning enables them to perform well even with limited labeled data, thus overcoming the challenges posed by limited data availability.

In the context of clinical NLP, few-shot learning techniques allow models to understand and interpret medical texts with a minimal number of labeled examples. This significantly reduces the manual effort required for extensive data labeling, making it an invaluable tool for healthcare professionals and researchers.

Benefits of Few-shot Learning in Clinical NLP

The applications of few-shot learning in clinical NLP are manifold:

- Enhanced efficiency: Few-shot learning enables healthcare professionals to quickly adapt models to new medical tasks with limited labeled data, reducing the time and resources required for manual annotation.

- Improved accuracy: By leveraging a small support set, few-shot learning allows models to capture the nuances of clinical language and make accurate predictions, even without an extensive dataset.

- Domain-specific understanding: Clinical NLP deals with specialized medical terminology and unique language patterns. Few-shot learning allows models to acquire domain-specific knowledge and contextual understanding to provide meaningful insights.

How does Few-shot Learning work?

Few-shot learning is a highly effective approach that enables models to quickly adapt and generalize to new tasks or classes, even with limited labeled data. The process involves several key steps, including dataset preparation, model training, meta-learner adaptation, and transfer and generalization.

Dataset Preparation

Dataset preparation is crucial in few-shot learning. The dataset is organized into two main subsets: the support set and the query set. The support set consists of a small number of labeled examples per class, which serves as the foundation for model training. The query set contains unlabeled examples that the model will evaluate and make predictions on.

Model Training

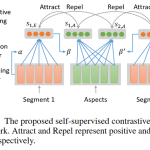

During model training, deep neural networks are utilized for feature extraction. The model learns a generalized representation or update rule through a process called meta-learning. In meta-training, the model adapts its parameters based on the support set of each meta-task, enabling it to quickly learn and generalize from a limited amount of labeled data. This adaptation process enhances the model’s ability to perform well on new, unseen tasks or classes.

Inference and Evaluation

After training, the few-shot learning model is evaluated on the query set to assess its performance and generalization capabilities. The model makes predictions on the unlabeled examples in the query set, and metrics such as accuracy or F1 score are used to measure its performance. This evaluation helps determine the model’s effectiveness in adapting and generalizing to new tasks or classes.

Transfer and Generalization

Once the few-shot learning model is trained and evaluated, it can be transferred and deployed to new tasks or classes. By providing a small support set specific to the target task, the model can quickly adapt and make accurate predictions. This transferability and generalization ability are key strengths of few-shot learning, as it reduces the need for large amounts of labeled data for each individual task or class.

Few-shot Learning Approaches in NLP

In the field of Natural Language Processing (NLP), there are several approaches to few-shot learning that have gained popularity. These approaches enable NLP models to perform well in tasks with limited labeled data, making them invaluable in various applications.

Siamese Networks

Siamese Networks are one such approach used in few-shot learning. They determine the likelihood of two data examples belonging to the same class. By learning similarity metrics between pairs of inputs, Siamese Networks enable models to make accurate predictions even with minimal labeled data in NLP tasks.

Prototypical Networks

Prototypical Networks address the challenge of data imbalances in few-shot learning. They create class prototypes through embedding averaging. These prototypes serve as references to determine the similarity between query instances and support set instances, enabling efficient classification in NLP tasks.

Matching Networks

Matching Networks are another approach that has been successful in few-shot learning for NLP. They predict labels for a query set based on a weighted sum of support set labels. This approach allows models to leverage the knowledge acquired from the support set and make accurate predictions with limited labeled data.

Transfer Learning

Transfer Learning is an effective technique in few-shot learning for NLP. It involves pretraining a model on a large labeled dataset and then fine-tuning it with a smaller labeled dataset for a specific task. By transfering the knowledge learned from the pretraining phase, models can quickly adapt to new tasks with minimal labeled data.

Prompting

Prompting is a technique where models are provided with explicit instructions or prompts to guide their responses in few-shot learning for NLP. By designing specific prompts, models can generalize well to unseen instances and improve their performance even with limited labeled data.

Latent Text Embeddings

Latent text embeddings involve representing textual data in a continuous vector space. These embeddings capture the semantic meaning of words and sentences, allowing models to understand contextual information even with limited labeled examples. Latent text embeddings have been widely used in few-shot learning for NLP to enhance the representation and understanding of text data.

By utilizing these various approaches in few-shot learning, NLP models can overcome the challenges posed by limited labeled data and achieve impressive performance even in tasks with minimal examples.

Few-shot Learning in NLP vs Zero-shot Learning

Few-shot learning in NLP and zero-shot learning are two innovative methodologies in machine learning that offer unique advantages.

Few-shot learning focuses on making accurate predictions with limited data samples, providing the capability to learn new tasks efficiently. This approach empowers models to adapt and generalize to new domains, showcasing flexibility and contextual understanding.

On the other hand, zero-shot learning enables models to recognize classes that have never been encountered during training. This method allows for knowledge transfer and the ability to identify new concepts without explicit guidance.

Both few-shot learning and zero-shot learning contribute to the sophistication of AI models by expanding their capabilities beyond traditional supervised learning approaches. With few-shot learning, models excel in tasks with limited data availability, while zero-shot learning broadens the scope of recognition and understanding.

Narrow AI vs General AI

Narrow AI and General AI are two distinct approaches within the field of artificial intelligence. While both aim to mimic human intelligence, they differ in terms of their scope and capabilities.

Narrow AI, also known as specialized AI, is designed to excel at specific tasks or domains. These AI systems possess a high degree of expertise and are focused on performing well-defined tasks with exceptional precision and efficiency. They are trained to excel in a particular area, such as image recognition, speech synthesis, or natural language processing.

For example, voice assistants like Amazon’s Alexa or Apple’s Siri are examples of Narrow AI. They are optimized to understand and respond to specific voice commands, providing users with relevant information or performing specific tasks.

General AI takes a more holistic approach, striving to replicate human-level intelligence across a wide range of tasks and domains. It aims to possess a comprehensive understanding of the world and exhibit the ability to adapt, learn, and reason like a human being.

General AI goes beyond specialized functions and seeks to tackle complex and open-ended problems. It aims to possess a deep contextual understanding, enabling it to engage in natural conversations, learn from experiences, and exhibit human-like creativity and problem-solving abilities.

While Narrow AI is highly specialized and excels in performing a specific task, General AI strives to achieve human-level intelligence and exhibit a broader range of capabilities.

It is important to note that the development of General AI is still a subject of ongoing research and remains a significant challenge. While significant progress has been made in specialized areas of AI, achieving human-level intelligence across a wide range of tasks and domains is a complex endeavor.

In conclusion, Narrow AI and General AI represent different approaches to artificial intelligence. Narrow AI focuses on specialized tasks, leveraging its expertise to excel in specific domains, while General AI aims to replicate human-level intelligence for a broader range of tasks.

Data Labeling and Tools

Data labeling plays a crucial role in machine learning, where it involves the process of annotating data samples with their corresponding labels. This labeled data is essential for training and evaluating machine learning models, allowing them to learn patterns and make accurate predictions. However, the labeling process can be time-consuming and labor-intensive.

Fortunately, there are various data labeling tools available to streamline this process. These labeling tools offer a range of functionalities, including annotation, collaboration, and quality control. Annotation features enable data annotators to mark and label relevant information on various types of data, such as images, text, or audio.

Collaboration features allow multiple annotators to work together on the same dataset, ensuring consistency and accuracy in the labeling process. Quality control features help assess and improve the quality of labeled data, allowing for reliable training of machine learning models. These tools are designed to improve efficiency, reduce human error, and ensure the production of high-quality training data.

FAQ

What are Few-shot Learning Neural Networks?

Few-shot Learning Neural Networks are a type of artificial intelligence (AI) models that can quickly adapt and learn new tasks with minimal data. They excel in situations where data availability is restricted, such as in clinical natural language processing (NLP).

What is Few-shot Learning?

Few-shot Learning is a specialized approach within supervised learning that trains models with a minimal number of labeled examples. It addresses the challenge of limited data availability and leverages a support set and a query set to enable models to excel even with constrained data.

How does Few-shot Learning work?

Few-shot Learning works by organizing the dataset into a support set and a query set. The model is trained through meta-learning, which involves adapting its parameters based on the support set of each meta-task. After training, the model’s generalization performance is evaluated on the query set. It can then be transferred and deployed to new tasks or classes by providing a small support set specific to the target task.

What are some Few-shot Learning approaches in NLP?

There are various approaches to Few-shot Learning in NLP. Some popular methods include Siamese Networks, Prototypical Networks, Matching Networks, Transfer Learning, Prompting, and the use of Latent text embeddings.

How does Few-shot Learning in NLP differ from Zero-shot Learning?

Few-shot Learning in NLP focuses on making accurate predictions with limited data samples and allows models to adapt and learn new tasks efficiently. Zero-shot Learning, on the other hand, enables knowledge transfer and the ability to recognize new concepts without explicit guidance.

What is the difference between Narrow AI and General AI?

Narrow AI refers to specialized artificial intelligence systems designed for specific tasks or domains, while General AI aims to replicate human-level intelligence and possess a more comprehensive understanding and capability to perform a wide range of tasks.

What is data labeling and what tools are available?

Data labeling is the process of annotating data samples with their corresponding labels. It is an essential aspect of machine learning. There are various data labeling tools available that provide functionalities such as annotation, collaboration, and quality control to streamline the labeling process and ensure accurate and reliable training data for machine learning models.