Hypernetworks, also known as hypernets, are revolutionizing the field of deep learning. As a type of neural network, they have the unique ability to generate weights for another neural network, known as the target network. This process of weight generation enables hypernetworks to design neural networks with greater flexibility, adaptability, and efficiency.

Unlike traditional neural networks where weights are fixed, hypernetworks take a set of inputs that contain information about the structure of the weights and architecture of the target network. With this information, hypernetworks dynamically generate weights for each layer of the target network.

The applications of hypernetworks in deep learning are vast. They have been successfully applied in areas such as continual learning, transfer learning, weight pruning, uncertainty quantification, and reinforcement learning. Hypernetworks offer solutions for ensemble learning, multitasking, neural architecture search, Bayesian neural networks, generative models, hyperparameter optimization, and more.

While hypernetworks have shown promising results, there are still challenges and areas for future exploration. The design and training of hypernetworks need to address concerns such as initialization, stability, and complexity. Additionally, further advancements in hypernetworks can contribute to enhancing the theoretical understanding and uncertainty quantification of deep neural networks.

In conclusion, hypernetworks are a powerful technique in deep learning that hold the potential to revolutionize the field. By enabling the generation of weights for target networks, hypernetworks offer greater flexibility, adaptability, and faster training. They have diverse applications and provide new possibilities for designing and training neural networks.

What are Hypernetworks and How Do They Work?

Hypernetworks are neural networks that play a vital role in weight generation for a target network. Unlike standard neural networks where the weights are fixed, hypernetworks are designed to generate the weights or parameters for each layer of the target network. This process allows hypernetworks to provide greater flexibility and adaptability.

Here’s how hypernetworks work:

- The hypernetwork takes a set of inputs that contain information about the structure of the weights and architecture of the target network.

- Using these inputs, the hypernetwork generates the weights for each layer of the target network.

- The generated weights are then used in the computations performed by the target network during training and inference.

This unique approach of weight generation sets hypernetworks apart from traditional neural networks. By dynamically generating weights based on the inputs, hypernetworks enable the creation of dynamic architectures, soft weight sharing, and data-adaptive deep neural networks (DNNs). This means that hypernetworks can adjust the weights of the target network based on the specific input data, leading to more efficient and effective neural network models.

Furthermore, using hypernetworks allows for uncertainty quantification and parameter efficiency in deep learning. The weights generated by hypernetworks can provide insights into the uncertainty of the model’s predictions, enabling a better understanding of its confidence levels. Additionally, hypernetworks optimize the parameter generation process, resulting in improved model performance and computational efficiency.

“Hypernetworks revolutionize the weight generation process in neural networks, introducing flexibility, adaptability, and efficiency.”

Benefits of Hypernetworks in Neural Networks

Hypernetworks offer several benefits in the field of neural networks:

- Flexibility: Hypernetworks allow for the creation of dynamic architectures, enabling neural networks to adapt and change their structure during training or inference. This flexibility is particularly valuable in tasks where the input distribution changes over time or when handling complex and varying data.

- Soft Weight Sharing: With hypernetworks, weights can be generated for multiple related tasks, allowing for soft weight sharing. This approach improves model efficiency and enables knowledge transfer between tasks.

- Data-Adaptive DNNs: Hypernetworks generate weights customized to the input data, resulting in data-adaptive DNNs. This adaptability improves the model’s ability to capture and represent complex patterns in the data.

- Uncertainty Quantification: The weight generation process in hypernetworks provides a means to quantify the uncertainty associated with the model’s predictions. This uncertainty estimation is crucial in applications where decision-making relies on the confidence of the model’s outputs.

- Parameter Efficiency: By optimizing the weight generation process, hypernetworks ensure parameter efficiency, resulting in improved computational performance.

Example Use Case: Weight Generation in Image Classification

Let’s consider an example of applying hypernetworks in image classification. In this scenario, a hypernetwork can generate the weights for a convolutional neural network (CNN) that performs the image classification task.

| Component | Traditional CNN | Hypernetwork-based CNN |

|---|---|---|

| Weight Generation | Fixed weights | Generated by hypernetwork |

| Flexibility | Static architecture | Dynamic architecture |

| Weight Sharing | Individual weights | Shared/soft weights |

| Parameter Efficiency | Standard parameter generation | Efficient weight generation |

In this example, the hypernetwork-based CNN offers greater flexibility by allowing the architecture of the network to change dynamically. The weights are generated by the hypernetwork, which can also enable weight sharing for related tasks. This results in improved parameter efficiency, optimizing the computational resources required for the classification task.

Overall, hypernetworks have the potential to enhance the capabilities of neural networks by revolutionizing the weight generation process. They enable dynamic architectures, soft weight sharing, and data-adaptive DNNs, leading to improved performance and flexibility in various deep learning applications.

Applications of Hypernetworks in Deep Learning

Hypernetworks have shown promising results in a wide range of deep learning problem settings. They have been successfully applied in various applications, including:

- Continual Learning: Hypernetworks enable models to learn continually from a stream of data without forgetting previously learned information. This is especially useful in scenarios where new data arrives over time.

- Causal Inference: Hypernetworks facilitate inferring causal relationships from observational data, helping to identify hidden causal factors that contribute to specific outcomes.

- Transfer Learning: Hypernetworks allow for the transfer of knowledge learned from one task to another, reducing the need for extensive retraining and improving the performance of the target network in new domains.

- Weight Pruning: By generating sparse weights, hypernetworks aid in weight pruning techniques, reducing model size and improving efficiency.

- Uncertainty Quantification: Hypernetworks can estimate uncertainty in predictions, providing valuable insights about the confidence of the model in its outputs.

In addition to the above applications, hypernetworks have been successfully employed in zero-shot learning, natural language processing, and reinforcement learning. Their versatility extends to solving problems in ensemble learning, multitasking, neural architecture search, Bayesian neural networks, generative models, hyperparameter optimization, information sharing, and adversarial defense.

Hypernetworks offer improved performance and flexibility, addressing the unique challenges present in different problem settings within the realm of deep learning.

Applications of Hypernetworks in Deep Learning

| Application | Description |

|---|---|

| Continual Learning | Learn from a stream of data without forgetting |

| Causal Inference | Infer causal relationships from observational data |

| Transfer Learning | Transfer knowledge from one task to another |

| Weight Pruning | Generate sparse weights for model efficiency |

| Uncertainty Quantification | Estimate uncertainty in predictions |

| Zero-shot Learning | Evaluate unseen classes without training data |

| Natural Language Processing | Enhance language understanding and generation tasks |

| Reinforcement Learning | Optimize agent behavior in dynamic environments |

| Ensemble Learning | Combine predictions from multiple models |

| Neural Architecture Search | Automate the search for optimal network architectures |

| Bayesian Neural Networks | Incorporate Bayesian inference into neural networks |

| Generative Models | Create new samples from learned distributions |

| Hyperparameter Optimization | Tune optimal hyperparameters for model performance |

| Information Sharing | Share knowledge across related tasks or models |

| Adversarial Defense | Protect models against adversarial attacks |

The applications of hypernetworks span across various domains, enabling deep learning models to tackle complex problems with enhanced performance and flexibility.

Design Criteria and Categorization of Hypernetworks

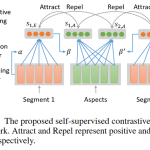

Hypernetworks, with their ability to generate weights for target networks, can be categorized based on five design criteria: inputs, outputs, variability of inputs and outputs, and the architecture of hypernets. These design criteria play a crucial role in determining how hypernets generate weights for the target network.

One important design criterion is the inputs. The inputs to a hypernetwork can include information about the structure of the weights and the architecture of the target network. By incorporating this information into the hypernetwork, it can generate weights that are tailored to the specific requirements of the target network. This flexibility allows for different weight generation strategies and enables the hypernetwork to adapt to various tasks and problem domains.

The outputs of a hypernetwork are another design criterion that influences the weight generation process. The outputs represent the generated weights for each layer of the target network. By carefully designing the output layer of the hypernetwork, it is possible to control the distribution and properties of the generated weights, ensuring that they align with the desired characteristics for the target network.

The variability of both the inputs and outputs is a design criterion that determines the range and diversity of weights that can be generated by the hypernetwork. Hypernetworks can be designed to generate fixed weights for each layer, or they can have inputs and outputs that vary based on different factors, such as the specific input data or the target task. This variability allows for greater adaptability and customization of the generated weights, enhancing the performance of the target network in different scenarios.

The architecture of hypernetworks is another design criterion that affects the weight generation process. Hypernetworks can have different architectures, ranging from simple feedforward architectures to more complex recurrent or convolutional architectures. The choice of architecture determines the connectivity patterns within the hypernetwork and can impact the expressiveness and capacity of the weights that it generates.

| Design Criteria | Description |

|---|---|

| Inputs | Information about the structure of the weights and the architecture of the target network. |

| Outputs | Generated weights for each layer of the target network. |

| Variability | Range and diversity of inputs and outputs, allowing for adaptability and customization. |

| Architecture | Determines the connectivity patterns and the expressiveness of the hypernetwork. |

Additionally, hypernetworks can have soft weight sharing, where multiple target networks for related tasks share the same set of generated weights. This approach promotes weight reuse and facilitates transfer learning, enabling the hypernetwork to generate weights that are applicable to multiple tasks, reducing redundancy in weight generation.

An interesting aspect of hypernetworks is their ability to generate weights for networks with dynamic architectures. In these cases, the structure of the target network changes during training or inference, allowing the network to adapt to different input conditions. Dynamic architectures can be beneficial in scenarios where the complexity of the problem varies or when the available data changes over time.

Moreover, hypernetworks can be designed to be data-adaptive. By considering the input data during the weight generation process, hypernetworks can generate weights that are customized to the specific input samples. This approach enhances the versatility and performance of the target network, as the generated weights are tailored to the characteristics of the input data.

Types of Hypernetworks

Based on the design criteria discussed, hypernetworks can be categorized into several types:

- Fixed-Input Hypernetworks

- Variable-Input Hypernetworks

- Fixed-Output Hypernetworks

- Variable-Output Hypernetworks

- Fixed-Input and Output Hypernetworks

- Variable-Input and Output Hypernetworks

Each type of hypernetwork has different characteristics and implications for weight generation, and they can be applied to various problem settings based on their capabilities.

Challenges and Future Directions of Hypernetworks

While hypernetworks have shown promising results, there are still challenges and areas for future exploration. In order to fully harness the potential of hypernetworks, several key challenges need to be addressed:

Initialization

One challenge lies in developing effective initialization methods for hypernetworks. Proper initialization is crucial for achieving optimal performance and avoiding issues such as vanishing or exploding gradients. Research is needed to determine the most suitable initialization strategies for hypernetworks, taking into account their unique characteristics.

Stability

Ensuring the stability of hypernetworks during training is another challenge. Hypernetworks have the potential to introduce instability due to the additional complexity of generating weights for the target network. Developing regularization techniques and training algorithms that promote stability and prevent overfitting will contribute to the successful implementation of hypernetworks in various applications.

Complexity Concerns

Hypernetworks introduce additional computational complexity compared to traditional neural networks. Addressing this challenge involves optimizing the computational efficiency of hypernetworks without compromising their performance. Techniques such as weight sharing, sparsity, and low-rank factorization can be explored to reduce the complexity of hypernetworks while preserving their effectiveness.

In addition to these challenges, there are several exciting future directions for hypernetwork research:

Enhancing Theoretical Understanding

Advancing the theoretical understanding of hypernetworks is an important direction for future research. This includes investigating the mathematical properties, convergence behavior, and expressive power of hypernetworks. A deeper theoretical understanding will pave the way for more efficient and effective design principles and training algorithms.

Uncertainty Quantification in Deep Neural Networks

Hypernetworks offer a unique opportunity to enhance uncertainty quantification in deep neural networks. By generating weights for the target network, hypernetworks can provide valuable insights into the confidence and reliability of predictions. This can enable more robust decision-making and improve the interpretability of deep learning models.

Addressing Open Problems in Domains

Further advancements in hypernetworks can address open problems in various domains. For example, in domain adaptation, hypernetworks can facilitate the transfer of knowledge across different domains by generating task-specific models. In adversarial defense, hypernetworks can be utilized to create more resilient and robust models that are resistant to adversarial attacks. In neural architecture search, hypernetworks can aid in the automated design of high-performing network architectures.

By tackling these challenges and exploring these future directions, hypernetworks have the potential to revolutionize the field of deep learning, providing more flexibility, better performance, and novel solutions to open problems.

| Challenges | Future Directions |

|---|---|

| Initialization | Enhancing Theoretical Understanding |

| Stability | Uncertainty Quantification in Deep Neural Networks |

| Complexity Concerns | Addressing Open Problems in Domains |

Conclusion

Hypernetworks are an exciting advancement in the field of deep learning. By generating weights for target networks, hypernetworks offer greater flexibility, adaptability, and faster training. Their applications span across various domains, including healthcare, natural language processing, and computer vision, showcasing their potential to revolutionize these industries.

Despite the challenges and areas for future exploration, hypernetworks provide a new way to design and train neural networks. They open up possibilities for further advancements in deep learning, addressing existing limitations and pushing the boundaries of what is possible in AI technology.

As researchers continue to delve into the potential of hypernetworks, their impact will only grow. They have already shown promising results in various problem settings, offering improved performance and flexibility. With ongoing advancements and refinements, hypernetworks have the potential to reshape the landscape of deep learning, paving the way for more innovative and powerful AI systems.

FAQ

What are hypernetworks?

Hypernetworks, also known as hypernets, are a type of neural network that generates weights for another neural network, referred to as the target network.

How do hypernetworks work?

Hypernetworks function by taking a set of inputs that contain information about the structure of the weights and architecture of the target network, and then generate the weights for each layer of the target network.

What are the applications of hypernetworks in deep learning?

Hypernetworks have various applications in deep learning, including continual learning, transfer learning, weight pruning, uncertainty quantification, and reinforcement learning.

How are hypernetworks categorized?

Hypernetworks can be categorized based on five design criteria: inputs, outputs, variability of inputs and outputs, and the architecture of hypernets.

What are the challenges and future directions of hypernetworks?

Some of the challenges and areas for future exploration in hypernetworks include initialization, stability, complexity concerns, and enhancing the theoretical understanding and uncertainty quantification of deep neural networks.

What are the benefits of using hypernetworks?

Hypernetworks offer greater flexibility, adaptability, faster training, and model compression compared to standard neural networks, revolutionizing the field of deep learning.