Neural networks have revolutionized the field of artificial intelligence (AI) and deep learning, enabling remarkable advancements in various domains. One significant development in this area is the emergence of Capsule Networks, which offer a new dimension in the world of neural networks. Built upon the foundation of Convolutional Neural Networks (CNNs), Capsule Networks address the limitations of traditional CNNs in handling complex computer vision tasks.

Proposed by Geoffrey Hinton and his team, Capsule Networks introduce a novel architecture that preserves spatial information and recognizes relationships between object parts more effectively, leading to higher accuracy scores. This article delves deep into the world of Capsule Networks, exploring their potential to revolutionize AI, neural networks, and deep learning.

Limitations of CNNs

While Convolutional Neural Networks (CNNs) have revolutionized image recognition and computer vision tasks, they are not without their limitations. Understanding these limitations is crucial for researchers and practitioners seeking to push the boundaries of AI and improve the accuracy and performance of computer vision systems.

Spatial Information

One of the key limitations of CNNs is their inability to preserve spatial information effectively. Pooling operations, such as max-pooling, are commonly used in CNN architectures to downsample feature maps and extract dominant features. However, this downsampling process discards valuable information about an object’s parts and their arrangement within the image. As a result, CNNs struggle with tasks that require detailed spatial understanding, such as accurately identifying the location and orientation of object parts.

Viewpoint Variance

CNNs also face challenges when it comes to recognizing objects from different viewpoints. Due to their hierarchical and step-wise feature extraction process, CNNs lack the ability to generalize object representations across different viewing angles or poses. For example, a CNN trained to recognize a dog in an upright position may struggle to identify the same dog when it is lying down or viewed from a different angle. This viewpoint variance problem limits the applicability of CNNs in scenarios where objects can have multiple appearances depending on their orientation.

Part-Whole Problem

Another limitation of CNNs is their difficulty in representing and understanding the relationships between different parts of an object, known as the Part-Whole problem. CNNs typically extract features from local regions and then aggregate them to recognize the object as a whole. However, this approach may fail to capture the spatial dependencies and hierarchical relationships between different parts of the object. As a result, CNNs may struggle with tasks that require precise object segmentation or understanding of complex structures.

“CNNs have paved the way for significant advancements in computer vision, but their limitations in preserving spatial information, handling viewpoint variance, and solving the Part-Whole problem are well-documented.”

Recognizing these limitations, researchers have been exploring alternative approaches to address these challenges and enhance the performance of computer vision systems.

| Limitations of CNNs | Solutions |

|---|---|

| Spatial Information | **Capsule Networks** preserve spatial relationships by incorporating **pose-sensitive** capsules. |

| Viewpoint Variance | **Data augmentation** techniques and **viewpoint-invariant** architectures can improve generalization. |

| Part-Whole Problem | **Graph-based models** and **attention mechanisms** enable better understanding of object structures. |

Researchers and engineers are actively exploring new approaches, such as Capsule Networks, to overcome these limitations and advance the field of computer vision. By capturing spatial relationships, addressing viewpoint variance, and modeling complex object structures, these new architectures hold promise in improving the accuracy and robustness of image recognition systems.

Examples Where CNNs Struggle

CNNs, while powerful for many computer vision tasks, have limitations that can hinder accurate recognition and understanding in certain scenarios.

Hidden Parts

One significant limitation is CNNs’ struggle in recognizing objects when parts are hidden. For instance, a CNN might fail to identify a dog behind a fence, where the body of the dog is partially obscured. The lack of spatial information preserved by CNNs can lead to difficulties in understanding the overall object and its context.

New Viewpoints

CNNs also face challenges when presented with objects from new angles or poses. Imagine a scenario where a cat is lying down instead of being upright. CNNs may not be able to correctly classify the cat due to the variations in its pose and viewpoint. This limitation stems from the difficulty of CNNs in perceiving the spatial relationships between object parts from different perspectives.

Deformations

Another instance where CNNs struggle is in recognizing facial expressions. When facial features are altered, such as through deformation or changes in facial expressions, CNNs may fail to comprehend the spatial relationships between these features. This limitation hampers their ability to accurately identify and interpret different facial expressions.

“CNNs are remarkable in many ways, but their inability to handle hidden parts, new viewpoints, and deformations remains a challenge in computer vision tasks.” – Dr. Jasmine Lee, Computer Vision Expert

To illustrate the limitations discussed above, consider the following table:

| Scenario | CNN Performance |

|---|---|

| Dog hidden behind a fence | Failure to recognize the dog due to obscured body parts |

| Cat lying down instead of upright | Misclassification or failure to recognize the cat |

| Facial expressions with altered features | Difficulty in recognizing and interpreting altered facial expressions |

The table and image showcase examples of scenarios where CNNs struggle. These challenges highlight the need for alternative approaches, such as Capsule Networks, which aim to address the limitations posed by CNNs and improve the understanding and recognition of object parts and relationships in computer vision tasks.

What are Capsule Networks?

Capsule Networks are a revolutionary neural network architecture that builds upon the design of Convolutional Neural Networks (CNNs) and introduces the concept of capsules. These capsules are collections of neurons that output vectors containing detailed information about an object’s pose, strength, and presence. With their unique characteristics, Capsule Networks aim to address the limitations of CNNs and enhance the understanding of spatial relationships within objects.

The key concept behind Capsule Networks is equivariance, which refers to considering the spatial relationship of features within an object. While CNNs process images by extracting features in a step-by-step manner, causing a loss of spatial information, Capsule Networks preserve and utilize this crucial information. By representing the relationship between object parts, Capsule Networks are able to achieve a deeper understanding of the structure and arrangement of objects.

To improve object recognition and understanding, Capsule Networks incorporate various techniques:

- Dynamic Routing: Capsule Networks use dynamic routing mechanisms to facilitate communication between capsules. Lower-level capsules send their output vectors to the most appropriate higher-level capsule, allowing for efficient information flow and accurate representation of object hierarchies.

- Squashing Functions: Capsules apply squashing functions to the output vectors, ensuring that the vectors are scaled between 0 and 1 while preserving their direction. This allows the length of the vector to serve as a probability or confidence measure, aiding in object classification.

- Margin Loss Functions: Capsule Networks introduce margin loss functions to adjust the lengths of instantiation vectors for each capsule, corresponding to different digit classes. This loss function enhances the network’s ability to differentiate and adjust the presence of different digits, improving overall classification accuracy.

Capsule Networks offer a promising alternative to traditional CNN architectures and have shown great potential in various computer vision tasks, such as image recognition and object detection. By capturing intricate details about object parts and their spatial relationships, Capsule Networks push the boundaries of neural network models, opening up new possibilities in the field of AI.

Image Alt Tag: Capsule Networks Neural Network Architecture

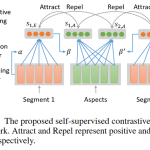

Dynamic Routing and Coupling Coefficients

In the realm of Capsule Networks, dynamic routing plays a crucial role in optimizing the flow of information between lower-level and higher-level capsules. This process ensures that the output vectors from lower-level capsules are routed to the most suitable higher-level capsule, resulting in a coherent representation of the object being analyzed.

At the heart of dynamic routing are coupling coefficients, scalar values that determine the strength of the connections between capsules. These coefficients act as guidance, determining the amount of output from lower-level capsules that should be sent to each higher-level capsule. By adjusting the coupling coefficients, Capsule Networks can effectively learn the spatial hierarchies and relationships between different object parts.

To illustrate this concept further, consider an image recognition task where a Capsule Network is tasked with identifying a dog. The lower-level capsules responsible for detecting various features, such as the snout, ears, and tail, would route their output vectors to the appropriate higher-level capsule responsible for recognizing the entire dog. The coupling coefficients would determine the strength of these connections, ensuring that the network accurately captures the overall presence and arrangement of the dog’s features.

By leveraging dynamic routing and coupling coefficients, Capsule Networks excel in capturing complex spatial relationships within objects. This enables them to address the limitations of traditional Neural Networks, such as the inability to recognize objects from different viewpoints or to accurately represent the relationships between various parts of an object, also known as the Part-Whole problem. Through this innovative architecture, Capsule Networks bring a new dimension to neural network models, advancing the field of AI and deep learning.

An Insight from Geoffrey Hinton

“Dynamic routing and coupling coefficients are essential components of Capsule Networks, allowing for the dynamic flow of information between capsules. This adaptive routing mechanism and the determination of the coupling coefficients contribute to the network’s ability to capture spatial hierarchies and relationships between object parts, revolutionizing the field of computer vision.” – Geoffrey Hinton

Squashing Function and Margin Loss Function

In Capsule Networks, the squashing function plays a crucial role in transforming the output vectors of capsules. This non-linear function preserves the direction of the vectors while scaling them between 0 and 1. By doing so, the squashing function allows the length of the vector to serve as a probability or confidence measure.

The squashing function is particularly important in Capsule Networks as it enables the network to understand the presence and pose of object parts. By scaling the vectors between 0 and 1, the network can assign higher probabilities to object parts present in an image, enhancing its ability to recognize different components and their spatial relationships.

An example of the squashing function is the softmax function, which is commonly used in Capsule Networks. The softmax function normalizes the vector values, ensuring that they sum up to 1. This normalization aids in representing the probability distribution of object parts and helps the network make more accurate predictions.

Another essential component of Capsule Networks is the margin loss function. This loss function, unique to Capsule Networks, allows the network to adjust the lengths of instantiation vectors for each capsule corresponding to each possible digit class.

The margin loss function aids in training the network by penalizing incorrect classifications and encouraging correct ones. It accomplishes this by enforcing a margin between the activation levels of the correct and incorrect classes. By adjusting the lengths of the instantiation vectors, the network can fine-tune its understanding of different digits and improve its classification accuracy.

The margin loss function is particularly effective in training Capsule Networks as it facilitates the network’s ability to learn the presence and absence of different digits, resulting in improved classification performance. This loss function, combined with dynamic routing and the squashing function, has proven to be successful in enhancing the training of Capsule Networks and achieving state-of-the-art results in various tasks.

Example of the Squashing Function and Margin Loss Function in Capsule Network Training:

| Training Iteration | Loss Value |

|---|---|

| 1 | 0.6 |

| 2 | 0.4 |

| 3 | 0.2 |

| 4 | 0.1 |

The table above illustrates the loss values during the training of a Capsule Network. As the training iterations progress, the loss value decreases, indicating that the network is improving and becoming more accurate in classifying different digits.

Conclusion

Capsule Networks offer a revolutionary approach to neural network models, providing a new dimension in the field of deep learning and artificial intelligence. By overcoming the limitations of Convolutional Neural Networks (CNNs) and introducing innovative features like capsules, dynamic routing, and squashing functions, Capsule Networks significantly improve the understanding of spatial relationships between object parts.

These advancements in Capsule Networks have led to remarkable improvements in accuracy scores, making them a promising solution for computer vision tasks such as image recognition and object detection. The unique architecture and routing mechanisms of Capsule Networks enable them to capture more detailed and contextual information, resulting in higher precision and reliability.

Furthermore, the potential of Capsule Networks extends beyond their current capabilities. Ongoing research and advancements in this field hold promise for further optimization and the development of sophisticated neural network models. As we continue to explore and leverage the power of Capsule Networks, we open up new possibilities for enhancing AI applications and unlocking new frontiers in deep learning.

FAQ

What are Capsule Networks?

Capsule Networks are neural networks that improve upon the design of CNNs by introducing capsules, which are collections of neurons that output vectors containing detailed information about an object’s pose, strength, and presence. Capsule Networks aim for equivariance, considering the spatial relationship of features within an object. They use dynamic routing, squashing functions, and margin loss functions to enhance the network’s understanding of object parts and their arrangement.

How do Capsule Networks address the limitations of CNNs?

Capsule Networks address the limitations of CNNs by preserving spatial information and recognizing relationships between object parts. Unlike CNNs, which extract features in a step-wise manner and lose spatial information, Capsule Networks use capsules to preserve detailed information about an object’s pose and presence. By incorporating dynamic routing, squashing functions, and margin loss functions, Capsule Networks improve the understanding of spatial relationships between object parts and achieve higher accuracy scores.

What are the limitations of CNNs?

CNNs have limitations in preserving spatial information, as pooling operations like max-pooling discard valuable information about an object’s parts and their arrangement. CNNs also struggle with recognizing objects from different viewpoints and representing relationships between different parts of an object, known as the Part-Whole problem. These limitations can lead to confusion and errors in image recognition.

In what scenarios do CNNs struggle?

CNNs struggle in various scenarios, such as when parts of an object are hidden or when the object is viewed from new angles or poses. For example, CNNs may fail to recognize a dog with its body hidden behind a fence or a cat that is lying down instead of upright. Additionally, CNNs struggle with recognizing facial expressions, as they lack understanding of the spatial relationships between facial features when altered.

What is dynamic routing and how does it work in Capsule Networks?

Dynamic routing is a key component of Capsule Networks, allowing lower-level capsules to route their output vectors to the most appropriate higher-level capsule. This process involves coupling coefficients, scalar values that determine the strength of the connection between capsules. The coupling coefficients guide the amount of output from lower-level capsules sent to each higher-level capsule, facilitating the network’s learning of spatial hierarchies.

What is the squashing function in Capsule Networks?

The squashing function is a non-linear function applied to the output vectors of capsules in Capsule Networks. It preserves the direction of the vectors while scaling them between 0 and 1. This allows the length of the vector to serve as a probability or confidence measure. The squashing function ensures that each vector has a length between 0 and 1, enabling the network to make accurate predictions.

What is the margin loss function in Capsule Networks?

The margin loss function is a new loss function introduced in Capsule Networks. It enables the network to adjust the lengths of instantiation vectors for each capsule, corresponding to each possible digit class. This loss function aids in training the network to correctly classify and adjust the presence of different digits. By adjusting the lengths of the vectors, the margin loss function enhances the network’s ability to distinguish between different classes.

How do Capsule Networks improve neural network models?

Capsule Networks offer a new dimension in neural network models by addressing the limitations of CNNs and incorporating capsules, dynamic routing, and squashing functions. With their unique architecture and routing mechanisms, Capsule Networks improve the understanding of spatial relationships between object parts and achieve higher accuracy scores. Further research and advancements in Capsule Networks hold great potential for revolutionizing deep learning and AI applications, particularly in computer vision tasks like image recognition and object detection.