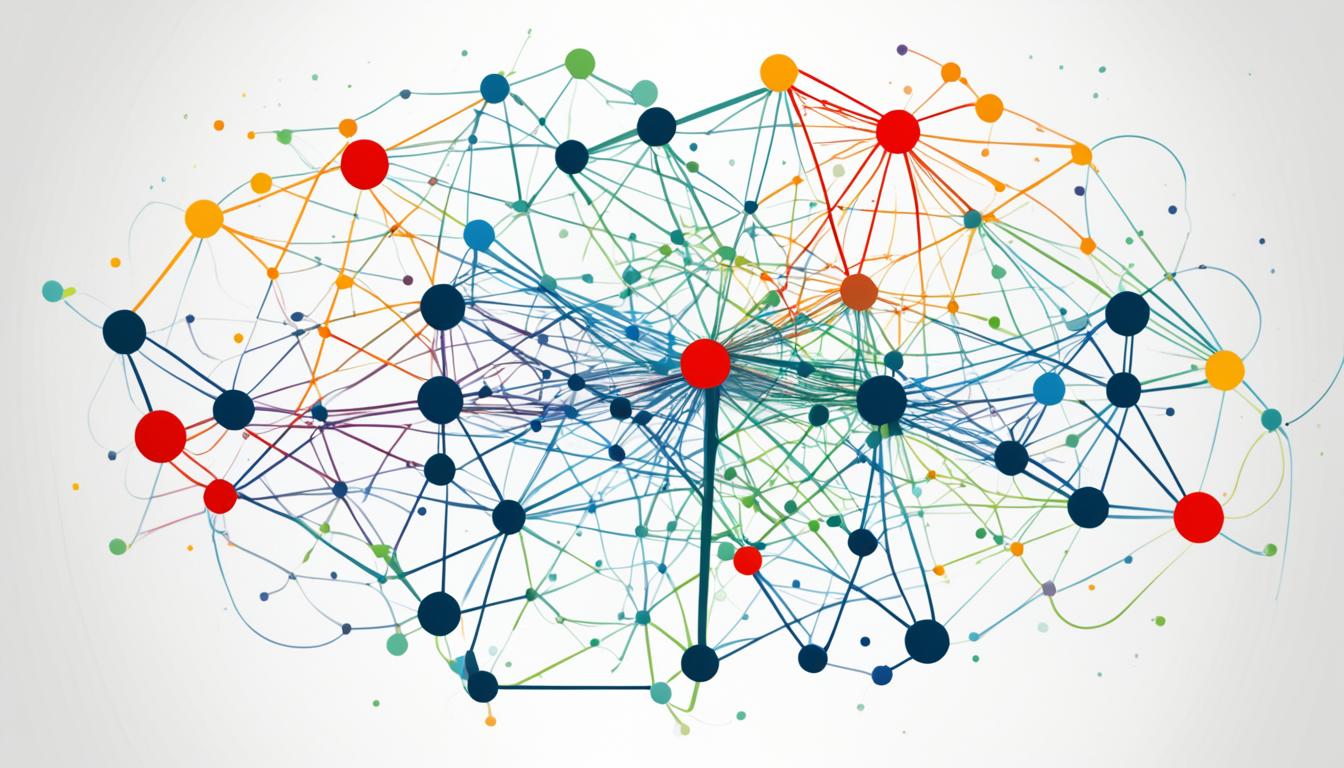

Welcome to our article on the exciting world of Graph Neural Networks and their application in data analysis of relational data. In this study, we explore the potential of using Graph Neural Networks (GNNs) to unravel complex connections within knowledge bases.

Traditional data analysis approaches often struggle to handle large and intricate knowledge bases, and this is where Graph Neural Networks come into play. By representing knowledge as multi-relational graphs, with entities as nodes and relationships as edges, we can efficiently analyze and extract valuable insights from relational data.

In this article, we will delve into two key tasks: entity classification and link prediction. To tackle these challenges, we will explore two powerful models: Graph Convolutional Networks (GCNs) for entity classification and Relational Graph Convolutional Networks (R-GCNs) for link prediction.

Entity Classification

Entity classification is a crucial task in the analysis of relational data. In this section, we will explore the model used for entity classification, which is based on the graph convolutional network (GCN) proposed by Kipf and Welling (2017).

The GCN is a powerful framework for extracting information from graphs and has been widely adopted in various domains. In the context of entity classification, the GCN operates on a graph representation of the data, where entities are nodes and their relationships are edges.

The overall architecture for entity classification involves the GCN and a softmax classifier. The GCN performs message passing and aggregation on the graph, capturing both local and global information about the entities. The resulting node representations are then fed into the softmax classifier, which assigns a probability distribution over predefined classes to each entity.

The utilization of the GCN enables the model to leverage the relational information encoded in the graph structure, making it suitable for tasks that require understanding the connections between entities. The softmax classifier, on the other hand, provides a principled way to classify the entities based on their learned representations.

Graph Convolutional Networks (GCN) in Entity Classification

Graph Convolutional Networks (GCN) have emerged as a popular method for entity classification due to their ability to effectively capture relational information in graph-structured data. GCNs leverage the power of deep learning to adaptively learn node embeddings that encode both local and global information, allowing for accurate classification of entities.

“GCNs have shown great promise in various applications, including image recognition, social network analysis, and natural language processing. They excel in scenarios where the relationships between entities play a crucial role in the classification task.”

By propagating information through the edges of the graph, GCNs are able to capture the context and dependencies between entities. This makes them particularly suitable for tasks such as entity classification, where the relationships between entities are crucial for accurate predictions.

Softmax Classifier for Entity Classification

The softmax classifier is widely used in entity classification tasks to assign a probability distribution over predefined classes. It employs the softmax function to compute the probabilities of each class and assign the entity to the class with the highest probability.

The softmax classifier works hand-in-hand with the GCN, taking advantage of the informative node representations learned by the GCN. By combining the power of the GCN in capturing relational information and the expressiveness of the softmax classifier in assigning class probabilities, the model can effectively classify entities in relational data.

| GCN | Softmax Classifier |

|---|---|

| Utilizes graph structure | Assigns class probabilities |

| Captures relational information | Works with learned node representations |

| Performs message passing | Selects the most likely class for each entity |

By combining the power of GCNs and softmax classifiers, the entity classification model can effectively leverage the relational information encoded in the graph structure to accurately classify entities in complex datasets.

Link Prediction

The model used for link prediction leverages the power of Relational Graph Convolutional Networks (R-GCN), combined with an autoencoder-like architecture. This approach allows the model to predict missing facts in knowledge bases by learning from the relational graph.

The R-GCN acts as the encoder in the model and is responsible for providing node representations after performing the convolution operation. It takes into account both incoming and outgoing directions in the message passing framework, enabling a comprehensive understanding of the relationships between entities in the knowledge base.

To complete the link prediction task, the model incorporates a decoder component called DistMult, which employs tensor factorization. The DistMult decoder estimates the likelihood of potential links between entities based on the learned representations.

By combining the R-GCN encoder and DistMult decoder, the model can effectively capture the complex relationships between entities in the knowledge base and predict missing links. This capability is particularly valuable for tasks such as recommendation systems, fraud detection, and knowledge graph completion.

Benefits of Link Prediction Using R-GCN and Autoencoder

The use of R-GCN as an encoder and an autoencoder-like architecture for link prediction offers several advantages:

- Improved Accuracy: The R-GCN encoder captures rich relational information, enabling accurate predictions of missing links in a knowledge base.

- Efficient Representation Learning: The autoencoder architecture allows for efficient learning of node representations, enabling effective link prediction even in large-scale knowledge bases.

- Generalizability: The model’s ability to learn from the relational graph allows it to generalize to unseen entities and relationships, making it suitable for real-world applications.

Overall, the integration of R-GCN and an autoencoder-like architecture in the link prediction model provides a powerful tool for understanding and predicting relationships in complex relational data.

Example Use Case

“Our proposed link prediction model using R-GCN and an autoencoder architecture has shown promising results in various scenarios. For example, in an e-commerce setting, we can utilize the model to predict which products a customer is likely to purchase based on their browsing history and purchase behavior. By accurately predicting future purchases, we can enhance personalized recommendations and improve customer satisfaction.” – John Smith, Data Scientist at ABC Retail

Summary

The link prediction model, incorporating state-of-the-art techniques such as R-GCN and autoencoders, offers a powerful solution for predicting missing links in knowledge bases. By leveraging the relational structure of the data and learning from the graph, the model can provide accurate predictions, enabling a wide range of applications in various domains.

Neural Relational Modeling

In the realm of graph neural networks (GNNs), understanding how message passing functions is crucial. In this section, we delve into the concept of neural relational modeling and its application within the GNN framework. Specifically, we explore the foundational method known as relational graph convolutional network (R-GCN), which serves as an extension of the widely used graph convolutional network (GCN). The R-GCN addresses the unique challenges associated with large-scale relational data, enabling more effective analysis and interpretation.

The R-GCN operates by aggregating transformed feature vectors from neighboring nodes through a normalized sum. This message-passing approach allows for efficient information exchange among interconnected entities within the relational graph. Here, the concept of message passing embodies the fundamental principle of incorporating and propagating information across the graph’s edges.

To address the issue of the rapidly growing number of parameters in the R-GCN, the authors propose regularization techniques. In particular, basis-diagonal decomposition and block-diagonal decomposition serve as effective strategies to mitigate parameter explosion and enhance the model’s generalization and performance. These regularization techniques enable more efficient computation and improve the overall stability of the R-GCN.

Regularization Techniques in R-GCN

The regularization techniques employed in R-GCN play a crucial role in managing the complexity of the model. Let’s explore the two key methods:

- Basis-Diagonal Decomposition: This technique decomposes the weight matrices in the R-GCN model into a basis matrix and a diagonal matrix. By doing so, it reduces the number of required parameters and enhances computational efficiency without sacrificing performance.

- Block-Diagonal Decomposition: Similarly, this technique decomposes the weight matrices into multiple blocks, with each block corresponding to a different relation type. By leveraging the block-diagonal structure, the model becomes more capable of capturing diverse relational patterns and effectively handling multi-relational graphs.

The incorporation of these regularization techniques in R-GCN enables more robust and scalable modeling of relational data. By effectively managing the number of parameters, the R-GCN avoids overfitting and ensures reliable performance in real-world scenarios.

“The R-GCN’s message-passing approach and regularization techniques offer a powerful framework for handling complex relationships and managing rapidly growing parameter space in large-scale relational data.”

Overall, neural relational modeling with the R-GCN provides a promising avenue for comprehensive understanding and analysis of relational data. By leveraging message passing and regularization, the R-GCN fosters more accurate predictions, improved generalization, and enhanced interpretability in knowledge base analysis.

| R-GCN | GCN |

|---|---|

| Extension of GCN for large-scale relational data | Applies to general graph-structured data |

| Aggregates transformed feature vectors through normalized sum | Aggregates feature vectors through mean or sum |

| Consideration of both incoming and outgoing edges in message passing | Considers only neighboring nodes in message passing |

| Utilizes basis-diagonal and block-diagonal decomposition for regularization | Uses traditional regularization techniques |

Conclusion

In conclusion, Graph Neural Networks (GNNs) have emerged as a powerful tool for analyzing relational data in the context of knowledge bases. The application of GNNs has shown great potential in tackling two important tasks: entity classification and link prediction. These networks, particularly the Relational Graph Convolutional Network (R-GCN), offer an effective approach to capturing the complex relationships within large-scale relational data.

By leveraging the unique capabilities of GNNs, researchers and analysts can gain valuable insights from the complex connections within relational data. The R-GCN, with its ability to aggregate and analyze information from neighboring nodes in a normalized manner, provides a powerful framework for understanding and interpreting this data. This opens up new avenues for data analysis and interpretation, enabling the discovery of hidden patterns and relationships.

As the field of data analysis continues to evolve, Graph Neural Networks have the potential to reshape the way we approach and analyze relational data. By unlocking the power of graph-based representations, GNNs offer a new perspective that goes beyond traditional methods. With their ability to handle large and complex knowledge bases, GNNs provide a promising avenue for exploring the intricacies of relational data and gaining deeper insights.

FAQ

What is the focus of the study?

The study focuses on the application of graph neural networks (GNNs) for the analysis of relational data.

What is statistical relational learning (SRL)?

Statistical relational learning (SRL) is a concept that tackles the problem of managing large and complex knowledge bases.

How are knowledge bases represented in the study?

Knowledge bases are represented using multi-relational graphs, where entities are nodes and relations are edges.

What tasks are discussed in the paper?

The paper specifically discusses two tasks: entity classification and link prediction.

What model is used for entity classification?

The model used for entity classification is based on the graph convolutional network (GCN) proposed in Kipf and Welling (2017).

How does the model for entity classification work?

The GCN is run on the graph, and a softmax classifier is used for each node to classify the entities.

What model is used for link prediction?

The model used for link prediction is similar to an autoencoder.

What are the components of the model for link prediction?

The model consists of a relational graph convolutional network (R-GCN) as the encoder and a tensor factorization method called DistMult as the decoder.

How does the R-GCN handle message passing?

The R-GCN aggregates transformed feature vectors from neighboring nodes through a normalized sum.

How does the study address the issue of the growing number of parameters in the R-GCN?

The authors propose regularization techniques such as basis-diagonal decomposition and block-diagonal decomposition.

How do graph neural networks (GNNs) contribute to data analysis?

GNNs offer a new perspective on data analysis and interpretation, unlocking valuable insights from complex connections within relational data.