In the world of artificial intelligence and machine learning, generative models are captivating and innovative. Two standout architectures, Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), have transformed the field. GANs consist of a generator and discriminator network, engaged in a perpetual duel to create realistic data samples. On the other hand, VAEs use an encoder and decoder network, utilizing a probabilistic latent space to generate new data. These models have numerous real-world applications, such as art generation, data augmentation, anomaly detection, drug discovery, and image super-resolution. However, ethical considerations, such as deepfake generation and privacy violations, need to be addressed.

Variational Autoencoders (VAEs) are probabilistic models that excel in generative modeling. They consist of an encoder network, which maps input data into a probabilistic latent space, and a decoder network, which reconstructs the original data from the latent space. VAEs treat the latent space as a probability distribution, allowing for the generation of new data by sampling from the distribution. VAEs have applications in image generation, anomaly detection, data augmentation, and feature learning. The implementation involves building the encoder and decoder networks, as well as incorporating the sampling function. However, challenges such as limited representation capacity and balancing the reconstruction loss and KL divergence term need to be navigated.

Generative Adversarial Networks (GANs)

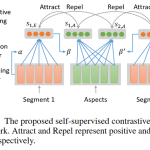

Generative Adversarial Networks (GANs), introduced by Ian Goodfellow in 2014, have revolutionized the field of generative models. GANs consist of a generator network and a discriminator network, working in tandem through an adversarial training process to produce realistic data samples. The generator is responsible for creating new data, while the discriminator distinguishes between real and fake samples.

Through a competitive interplay, GANs refine their abilities over time. The generator strives to generate data that is indistinguishable from real samples, while the discriminator becomes increasingly skilled at identifying fake data. This adversarial training dynamic drives GANs towards achieving remarkable results in various domains.

“GANs are an elegant framework for generating new data. By leveraging the adversarial interplay between the generator and discriminator networks, GANs excel at producing high-quality outputs in diverse applications.”

One of the prominent applications of GANs lies in art generation. These models can create stunning paintings, images, and even music compositions. Notably, GANs offer a powerful technique for data augmentation in machine learning. By generating synthetic samples, GANs enhance the diversity and size of training datasets, leading to improved model generalization.

GANs also demonstrate great potential in anomaly detection. By learning what “normal” data looks like, GANs can identify deviations and flag anomalous instances. In the domain of drug discovery, GANs assist in generating novel molecular structures, aiding chemists in navigating and exploring chemical spaces.

Furthermore, GANs contribute to image super-resolution by enhancing the resolution and quality of images. This has significant implications in medical imaging and surveillance, enabling clearer and more detailed visualizations for accurate analysis.

Challenges and Considerations

While GANs offer tremendous potential, there are challenges that need to be addressed for their effective implementation. One common issue is mode collapse, where the generator produces limited and repetitive outputs, failing to explore the full diversity of the data distribution. Researchers have developed techniques, such as introducing noise during training, to mitigate this challenge.

Another challenge is non-convergence, where the generator and discriminator networks struggle to reach an equilibrium during training. Techniques like adjusting the learning rate and network architecture modifications can help alleviate this problem.

It’s crucial to strike a balance between the generator’s creativity and the discriminator’s discernment. Ensuring that the generator is capable of producing diverse and realistic outputs while maintaining an effective discriminator is vital for successful GAN applications.

Exploring and overcoming these challenges will unlock the full potential of GANs as a powerful tool for generative modeling.

Comparison Table: GANs and VAEs

Let’s compare the key characteristics of GANs and Variational Autoencoders (VAEs) to understand their differences:

| Characteristics | GANs | VAEs |

|---|---|---|

| Architecture | Generator-Discriminator | Encoder-Decoder |

| Loss Function | Adversarial loss | Reconstruction loss + KL divergence |

| Latent Space | Implicit | Probabilistic |

| Output Quality | Highly realistic | Less realistic but more diverse |

| Applications | Art generation, data augmentation, anomaly detection, drug discovery, image super-resolution | Image generation, anomaly detection, data augmentation, feature learning |

Variational Autoencoders (VAEs)

Introduced by Kingma and Welling in 2013, Variational Autoencoders (VAEs) are probabilistic models that have revolutionized generative modeling. VAEs consist of two essential components: an encoder network and a decoder network. The encoder network is responsible for mapping input data into a latent space, whereas the decoder network reconstructs the original data from the latent space.

What sets VAEs apart is their treatment of the latent space as a probability distribution, enabling the generation of new data by sampling from this distribution. By incorporating probabilistic elements into the model, VAEs provide a more flexible and expressive approach to generative modeling.

The applications of VAEs are diverse and impactful. One notable application lies in image generation, where VAEs excel at creating realistic and novel images. By leveraging the latent space and its probabilistic nature, VAEs can generate new and visually appealing images with remarkable fidelity.

Beyond just image generation, VAEs are also highly effective in anomaly detection. Through the representation of normal data patterns within the latent space, VAEs can identify anomalies by measuring the discrepancy between the reconstructed and original data. This capability enables VAEs to detect abnormal data points and patterns in various domains, including fraud detection and cybersecurity.

Data augmentation is another valuable application of VAEs. By navigating the latent space, VAEs can generate new data samples that preserve the essential characteristics of the original data. This approach helps to augment training datasets and improve the generalization performance of machine learning models.

In addition to image generation, anomaly detection, and data augmentation, VAEs also contribute to feature learning. By extracting meaningful and compact representations in the latent space, VAEs enable learning high-level features that capture important patterns and variations in the data.

Implementing VAEs involves the construction of both the encoder and decoder networks. These networks are trained using a combination of reconstruction loss, which measures the similarity between the original and reconstructed data, and KL divergence, which regularizes the distribution of the latent space. Balancing these two components is crucial for achieving optimal performance in VAEs.

Advantages of VAEs

VAEs offer several advantages compared to other generative models:

- Probabilistic approach enables the generation of new data by sampling from the latent space.

- Effective in image generation, anomaly detection, data augmentation, and feature learning.

- Flexible and expressive modeling through the treatment of the latent space as a probability distribution.

- Applicable to a wide range of domains, including computer vision, natural language processing, and drug discovery.

Disadvantages and Challenges of VAEs

Despite their strengths, VAEs also face certain challenges:

- Limited representation capacity of the latent space, which can restrict the modeling capabilities of VAEs.

- Difficulty in balancing the reconstruction loss and KL divergence term, impacting the quality of the generated data.

To overcome these challenges, ongoing research and advancements focus on enhancing the representation capacity of the latent space and developing innovative training strategies for VAEs.

Overall, Variational Autoencoders (VAEs) have emerged as a powerful tool in generative modeling, offering unique advantages and capabilities. Their ability to generate new data, detect anomalies, augment datasets, and learn meaningful features has made them instrumental in various fields of AI and machine learning.

Generative AI Applications

Generative models, including GANs and VAEs, have revolutionized various industries with their wide-ranging applications. These models have proven to be versatile and powerful tools for art generation, data augmentation, anomaly detection, drug discovery, and image super-resolution.

Art Generation:

GANs have demonstrated their ability to create breathtaking and realistic paintings and images. By training on extensive datasets, GANs learn the intricate details and styles of various artworks, enabling them to produce original pieces with remarkable craftsmanship.

Data Augmentation:

In the realm of machine learning, data augmentation plays a crucial role in enhancing model generalization. GANs offer a valuable approach to this process by generating synthetic data that closely resembles the real data distribution. This technique expands the training dataset, helping models learn different variations of the input data, and improving their performance.

Anomaly Detection:

GANs excel in identifying anomalies by learning what “normal” data looks like. By being trained on extensive datasets comprising normal data samples, GANs can detect anomalous instances or outliers that deviate from the learned data distribution. This ability makes them valuable tools in various domains, including fraud detection, cybersecurity, and quality control.

Drug Discovery:

VAEs have emerged as powerful allies in the field of drug discovery. By leveraging their generative capabilities, VAEs aid in generating novel molecular structures that exhibit desired properties. These models navigate chemical spaces, exploring potential configurations and assisting researchers in the design of new drugs with enhanced efficacy and reduced side effects.

Image Super-Resolution:

The ability to enhance image resolution has crucial applications in medical imaging, surveillance, and various other fields. GANs have proven to be exceptional tools in this area by generating high-resolution images from low-resolution counterparts. This advancement significantly improves the quality and clarity of visuals, enabling better analysis, diagnosis, and decision-making.

Generative models have transformed the way we approach numerous tasks across several industries. From creating awe-inspiring art to aiding in drug discovery and anomaly detection, these models continue to push the boundaries of what is possible in the realm of AI and machine learning. The image below exemplifies the power of generative models in producing visually stunning art.

As we delve deeper into the capabilities and applications of generative AI, it is essential to remain cognizant of ethical considerations and potential challenges. Nevertheless, there is no denying the immense potential of generative models in shaping the future and driving innovation in various fields.

Conclusion

Generative models, such as Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), are revolutionizing the field of AI and machine learning. These models offer immense potential in creating new data, artistic creations, and even simulated realities. However, it is vital that we approach the use of these models with responsibility and address the ethical implications they pose, including the risks associated with deepfake generation and privacy violations.

As we delve deeper into the realm of generative AI, it becomes crucial to understand the inner workings of models like VAEs and GANs, as well as their diverse applications. Through ongoing advancements, we can continue to harness the power of generative models to shape the future of AI and drive innovation across various domains.

With their ability to push the boundaries of what is possible, VAEs, GANs, and other generative models will continue to open new doors for AI and machine learning. By balancing progress with ethics and leveraging a mindful approach, we can ensure that these models contribute positively, empower human creativity, and create new opportunities for growth in the world of artificial intelligence.

FAQ

What are Generative Adversarial Networks (GANs)?

GANs are generative models consisting of a generator network and a discriminator network. The generator creates data samples, while the discriminator distinguishes between real and fake samples through adversarial training.

What are Variational Autoencoders (VAEs)?

VAEs are probabilistic generative models composed of an encoder network and a decoder network. The encoder maps input data into a latent space, and the decoder reconstructs the original data. The latent space enables the generation of new data by sampling from the distribution.

What are the applications of GANs?

GANs have a wide range of applications, including art generation, data augmentation, anomaly detection, drug discovery, and image super-resolution.

What are the applications of VAEs?

VAEs are utilized in image generation, anomaly detection, data augmentation, and feature learning.

What are the challenges associated with GAN implementation?

Challenges in GAN implementation include mode collapse and non-convergence, which need to be addressed for effective utilization.

What are the challenges associated with VAE implementation?

Challenges in VAE implementation include limited representation capacity and the need to balance the reconstruction loss and KL divergence term.