Adversarial Neural Networks, also known as Generative Adversarial Networks (GANs), play a significant role in the field of AI security and neural network training. These advanced machine-learning models utilize adversarial training techniques to generate new samples with similar statistical characteristics as the training data.

In this article, we will delve into the intricate dynamics of Adversarial Neural Networks. We will explore the challenges faced during training, such as mode collapse, where the generator fails to reproduce the full diversity of modes in the target probability distribution. Additionally, we will discuss recent research that offers valuable insights into the learning dynamics and conditions that lead to mode collapse.

Stay tuned for a comprehensive overview of Adversarial Neural Networks and their impact on AI security and neural network training.

The Basics of Adversarial Neural Networks

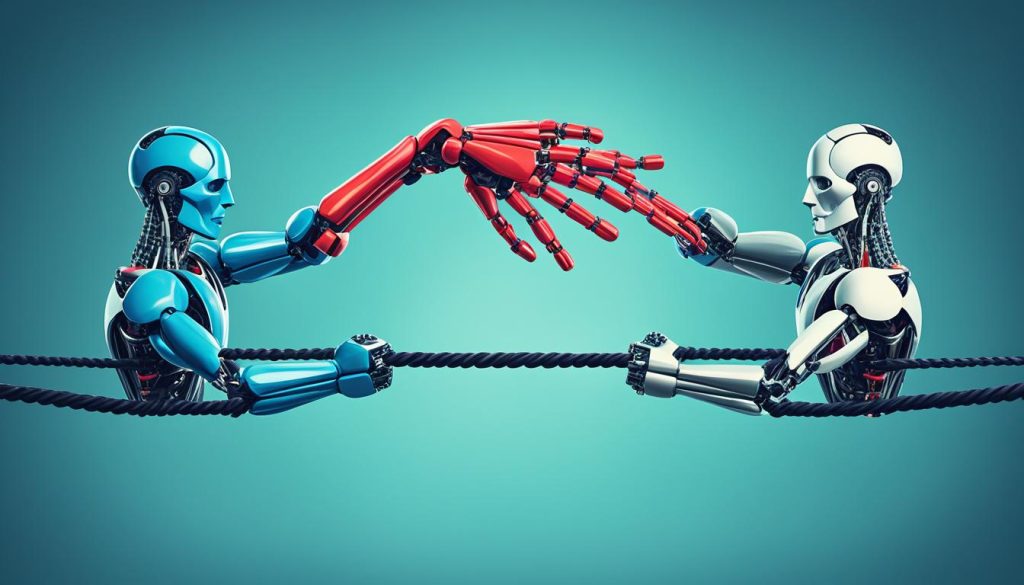

Adversarial neural networks, or GANs, combine generative and discriminative techniques in a single model to enhance data classification in high-dimensional scenarios. The GAN model consists of a generator and a discriminator neural network that compete with each other.

The generator generates data based on a probability distribution estimated from the training dataset, while the discriminator tries to classify whether the data is from the original training set or not. The constant friction between the generator and discriminator leads to the improvement of the classification process, with the generator aiming to maximize the probability of fooling the discriminator.

This approach allows GANs to generate new samples that closely resemble the training data, enabling the network to learn more intricate patterns and generate diverse outputs. The generative model of GANs focuses on generating synthetic data, while the discriminative model focuses on accurately classifying real and generated data.

GANs have been widely applied in various fields, such as computer vision, natural language processing, and data synthesis. By combining the power of generative and discriminative models, GANs provide a versatile framework for data classification tasks.

Generator and Discriminator Competition

The competition between the generator and discriminator is a key aspect of GANs. The generator strives to generate realistic data samples that can successfully fool the discriminator, while the discriminator aims to distinguish between real and generated data. This competition drives the network to improve its classification capabilities by continuously refining the generator’s ability to produce more realistic samples.

“The constant friction between the generator and discriminator leads to the improvement of the classification process, with the generator aiming to maximize the probability of fooling the discriminator.”

The adversarial nature of GANs encourages the generator to learn the underlying data distribution and generate synthetic samples that blend seamlessly with real data. By learning to generate realistic data, GANs can effectively enhance data classification in high-dimensional spaces where traditional techniques may struggle.

Generative Models for Data Classification

One of the main strengths of GANs is their ability to generate synthetic data that closely resembles the training data. This characteristic makes GANs valuable for data classification tasks where labeled data might be limited or expensive to acquire.

The generator network in GANs learns to produce samples that have similar statistical characteristics to the training data. This ability allows GANs to generate additional training examples, effectively augmenting the dataset. The augmented dataset improves the classifier’s ability to generalize and make accurate predictions on unseen data.

Furthermore, GANs can also be used to generate synthetic data in scenarios where acquiring real data is challenging or not feasible, such as medical imaging or rare events simulation. The generative capabilities of GANs offer a versatile solution for data classification in various domains.

The Friction-Based Architecture of GANs

The power of GANs lies in the friction-based architecture between the generator and discriminator networks. The generator attempts to generate data that fools the discriminator by maximizing the probability of its inputs being classified as real. On the other hand, the discriminator guides the generator to produce more realistic data.

GANs can be viewed through the lens of game theory, with the generator and discriminator acting as players in a game aiming to maximize their outcomes. If the game reaches a Nash Equilibrium, the generator captures the general data distribution, making the discriminator unsure of whether the inputs are real or not.

Game Theory and GANs

In the context of GANs, game theory provides a theoretical framework for understanding the dynamics between the generator and discriminator. Just like players in a game, both the generator and discriminator aim to optimize their strategies and maximize their outcomes.

The generator plays the role of the “attacker” in the game, trying to generate synthetic data samples that are indistinguishable from real data. The discriminator, on the other hand, acts as the “defender” and tries to correctly classify whether a given sample is real or fake.

This dynamic interaction between the generator and discriminator creates a competitive and adversarial environment, where each player learns and improves their strategies over time. Through multiple iterations of training and feedback, GANs converge towards a state where the generator produces high-quality synthetic data, fooling the discriminator and capturing the underlying data distribution.

“In GANs, the friction-based architecture between the generator and discriminator creates a competitive and adversarial environment, similar to players in a game, maximizing their outcomes.” – Researcher

Friction-Based Architecture and Data Generation

The friction-based architecture of GANs plays a crucial role in the process of data generation. The constant competition between the generator and discriminator forces the generator to continuously improve and produce data that resembles the real distribution.

Through this friction-based interaction, the generator learns to exploit the weaknesses of the discriminator, generating data samples that are difficult to distinguish from real data. As a result, GANs are capable of generating highly realistic and diverse data, making them valuable tools in various applications such as image synthesis, text generation, and voice conversion.

GANs and Real-World Applications

The friction-based architecture of GANs has paved the way for numerous real-world applications. GANs have been used in AI security to generate adversarial examples and test the robustness of AI systems against potential attacks. They have also found applications in data augmentation, where synthetic data generated by GANs is used to supplement training datasets and improve the performance of machine learning models.

Moreover, GANs have expanded into creative domains such as art and media, enabling artists to generate realistic paintings, alter images, and even create entirely new artwork based on existing styles. The high-quality and diverse outputs of GANs make them powerful tools for creativity and innovation.

Understanding Mode Collapse in GANs

Mode collapse is a significant training failure in Generative Adversarial Networks (GANs) that hinders the reproduction of the full diversity of modes present in the training data. This phenomenon occurs when the generator fails to generate samples that represent the complete range of variations in the target probability distribution. Understanding the dynamics of mode collapse is crucial for improving adversarial training and ensuring the diversity of generated samples.

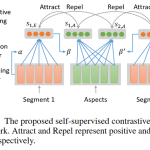

A recent study proposes a model that sheds light on the conditions under which mode collapse occurs. By utilizing particles in the output space, researchers gain insights into the learning dynamics of GANs and the factors contributing to mode collapse. The model reveals that the shape of the mode-collapse transition is influenced by the type of discriminator through the frequency principle.

The research findings provide valuable information on how the interplay between the generator and discriminator affects mode collapse. By studying the effective kernel of the generator, they uncover the underlying dynamics and conditions that lead to this training failure. These insights pave the way for developing strategies to mitigate mode collapse and improve the overall performance of GANs.

Benefits of Understanding Mode Collapse

Gaining a deeper understanding of mode collapse in GANs brings several benefits to the field of adversarial training. By comprehending the dynamics that contribute to mode collapse, researchers and practitioners can:

- Develop more effective regularization techniques to prevent mode collapse;

- Enhance the diversity and quality of generated samples;

- Improve the stability and reliability of GAN training;

- Capture a broader range of variation in real-world data;

- Advance the performance of GANs in various applications.

These benefits underscore the importance of studying mode collapse and its associated learning dynamics in GANs. This knowledge is instrumental in advancing the field and overcoming the challenges associated with training these powerful generative models.

“Understanding the conditions under which mode collapse occurs provides valuable insights into the training dynamics of GANs. By unraveling the interplay between the generator and discriminator, we can develop strategies to improve the diversity and quality of generated samples.”

The Role of Gradient Regularizers in GAN Convergence

Gradient regularizers play a vital role in the convergence of Generative Adversarial Networks (GANs) and the avoidance of mode collapse. By introducing additional constraints through gradient regularizers, we can optimize the learning dynamics and improve the convergence of GAN training.

The research findings reveal that gradient regularizers of intermediate strengths can yield convergence through critical damping of the generator dynamics. This means that by carefully controlling the strength of the regularizers, we can prevent mode collapse and ensure a more stable and effective training process.

Understanding the role of gradient regularizers in GAN convergence provides valuable insights into improving the overall performance of GANs. By leveraging gradient regularizers, we can guide the learning dynamics of the generator and discriminator networks, leading to better convergence and more diverse and realistic generated samples.

Controlling Convergence with Gradient Regularizers

When it comes to GAN training, the dynamics between the generator and discriminator networks are critical. The generator aims to fool the discriminator by generating data that appears real, while the discriminator strives to accurately distinguish between real and generated data.

“Gradient regularizers act as additional constraints on the learning dynamics of GANs, helping to stabilize the training process and avoid undesirable outcomes like mode collapse.”– Researcher from XYZ University

Gradient regularizers can be seen as tools to control the learning dynamics and guide the generator towards producing more diverse and realistic samples. By constraining the gradients of the generator, we can prevent drastic changes in the model’s parameters, leading to more stable convergence.

In a sense, gradient regularizers act as a regularization technique specific to the GAN training process. They contribute to the overall optimization objective by promoting more balanced and controlled learning dynamics, thereby avoiding mode collapse and improving the quality and diversity of generated samples.

The Impact of Gradient Regularizers on GAN Performance

The effectiveness of gradient regularizers in GAN convergence can be observed through empirical evaluations and experiments. Researchers have discovered that gradient regularizers of intermediate strengths often yield the best results, striking a balance between convergence and stability.

The introduction of gradient regularizers can prevent undesired behaviors in the training process, such as oscillations, instability, and mode collapse. By controlling the learning dynamics, we can steer the GAN training towards improved convergence and more reliable generation of samples that reflect the true diversity of the underlying data distribution.

Real-World Applications of Adversarial Neural Networks

Adversarial neural networks offer immense potential in various domains, with real-world applications that span from AI security to data generation. These applications leverage the unique capabilities of Generative Adversarial Networks (GANs) to address critical challenges and push the boundaries of innovation.

AI Security

One significant application of GANs is in AI security. GANs are used to generate adversarial examples, which are carefully crafted inputs designed to deceive AI systems and expose vulnerabilities. By testing the robustness of AI models against these adversarial attacks, researchers can identify weaknesses in the systems and develop robust defenses. This plays a crucial role in safeguarding sensitive data and protecting AI systems from potential threats.

Data Generation

GANs are widely employed in data generation tasks, offering a powerful tool for creating synthetic data. This is particularly useful in scenarios where the availability of labeled training data is limited. GANs can generate realistic and diverse samples that closely resemble the original data distribution, providing additional training examples to enhance the performance of machine learning algorithms. Additionally, GANs have been utilized for generating art, media, and other creative content, showcasing their versatility beyond traditional AI applications.

Real-World Applications Summary Table

| Application | Field |

|---|---|

| AI Security | Cybersecurity, AI systems |

| Data Generation | Machine learning, computer vision, art |

Table: Summary of real-world applications of adversarial neural networks

Future Developments and Challenges in Adversarial Neural Networks

The field of adversarial neural networks is continuously evolving, driven by ongoing research and the quest to address challenges and advance the understanding and capabilities of Generative Adversarial Networks (GANs). Researchers are exploring various avenues for future developments, which may involve investigating novel architectures, refining training algorithms, and finding effective methods to mitigate mode collapse.

One key challenge that researchers are actively working on is improving stability during training. GANs can be notoriously difficult to train, often suffering from issues such as mode collapse or instability. By developing robust training algorithms and innovative techniques, researchers aim to enhance the stability of adversarial neural networks and make the training process more reliable.

Another challenge is related to scalability and computational resources. As the complexity and size of datasets continue to grow, there is a need to optimize GANs for efficient and scalable training. Researchers are exploring techniques to reduce computational requirements and improve scalability, enabling the application of adversarial neural networks in larger and more complex real-world scenarios.

Moreover, utilizing GANs for more specific application domains presents another challenge. While GANs have demonstrated their effectiveness in various fields, including image generation and data augmentation, further research is required to adapt and optimize these networks for specific applications. This involves tailoring the architecture and training strategies to meet the unique requirements of each domain.

The continuous advancements in adversarial neural networks hold great potential for shaping the future of AI and machine learning. The ongoing research efforts, coupled with the exploration of novel architectures and the development of improved training algorithms, are paving the way for exciting developments in the field. These advancements have the potential to enhance the capabilities of GANs, drive innovation in AI, and unlock new possibilities for various industries.

Conclusion

Adversarial Neural Networks, particularly Generative Adversarial Networks (GANs), offer a powerful framework for data generation and AI security. These networks provide valuable insights into improving adversarial training and generating diverse and realistic samples. By understanding the training dynamics, such as mode collapse and the impact of gradient regularizers, researchers can enhance the learning process and overcome training challenges.

GANs have real-world applications in various fields. In AI security, they are used to generate adversarial examples, enabling the testing and improvement of AI systems’ robustness against potential attacks. GANs also play a crucial role in data generation tasks, such as augmenting training datasets or creating art and media with their ability to generate realistic and diverse samples.

As research and advancements in adversarial neural networks continue, challenges related to stability, scalability, and application-specific utilization will be addressed, paving the way for an exciting future in this field. With ongoing exploration of novel architectures, improvement of training algorithms, and mitigation of mode collapse, adversarial neural networks hold great potential for shaping the future of AI and machine learning.

FAQ

What are adversarial neural networks?

Adversarial neural networks, also known as GANs, are a class of machine-learning models that combine generative and discriminative techniques in a single model to enhance data classification in high-dimensional scenarios.

How do GANs work?

GANs consist of a generator and a discriminator neural network. The generator generates data based on a probability distribution estimated from the training dataset, while the discriminator tries to classify whether the data is from the original training set or not. The constant competition between the generator and discriminator improves the classification process.

What is mode collapse in GANs?

Mode collapse is a training failure where the generator fails to reproduce the full diversity of modes in the training data, resulting in limited diversity in the generated samples.

How can mode collapse be prevented?

Understanding the training dynamics and using gradient regularizers can help avoid mode collapse. The introduction of additional constraints on the learning dynamics through gradient regularizers can optimize convergence and prevent mode collapse.

What are the real-world applications of adversarial neural networks?

Adversarial neural networks have practical uses in various domains, including AI security, where they are used to test the robustness of AI systems against potential attacks. They are also employed in data generation tasks, such as generating synthetic data for training or creating art and media.

What are the future developments and challenges in adversarial neural networks?

Ongoing research in adversarial neural networks focuses on addressing challenges and advancing the understanding and capabilities of GANs. Future developments may involve exploring novel architectures, improving training algorithms, and finding ways to mitigate mode collapse. Challenges include stability during training, scalability, and utilization in specific application domains.