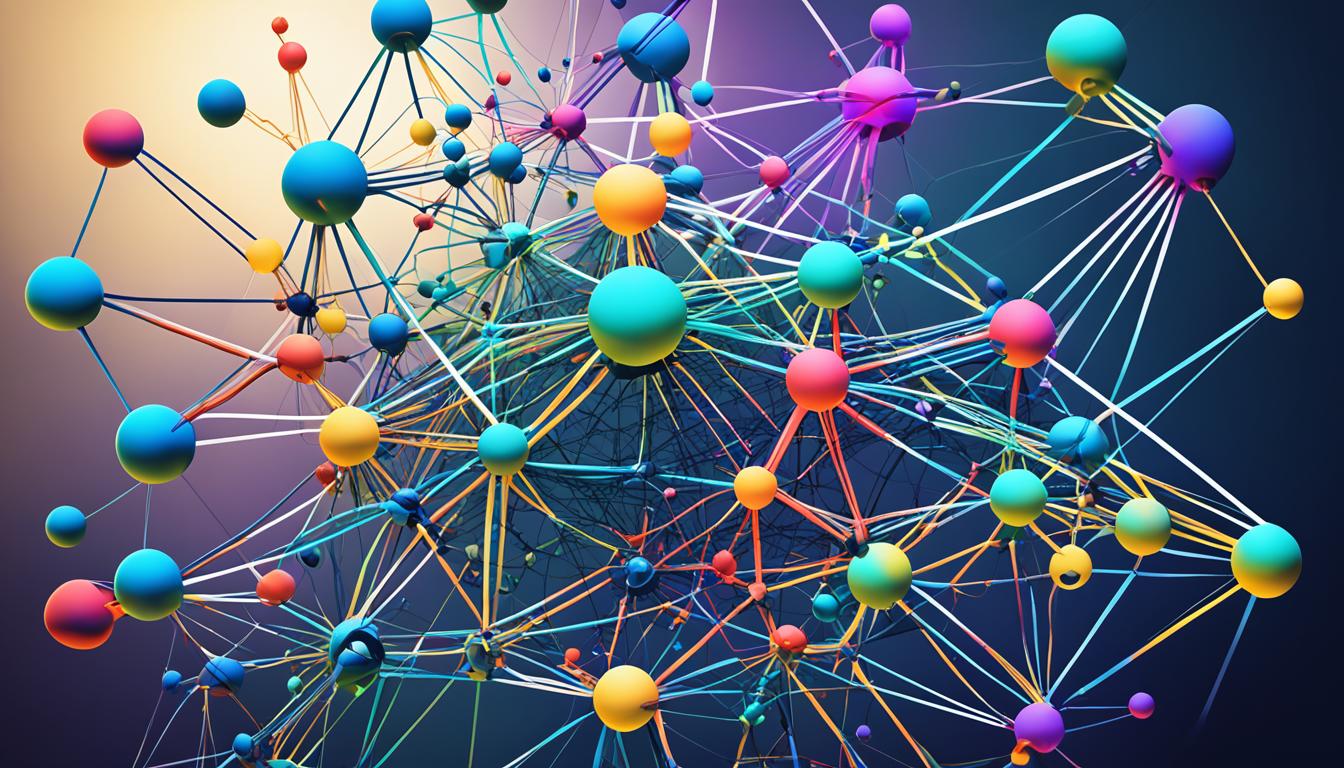

Welcome to our article on Radial Basis Function (RBF) Networks, where we delve into the fascinating world of function approximation and its applications in artificial intelligence (AI) and pattern recognition. RBF networks are a specific type of feedforward neural network renowned for their ability to model complex nonlinear relationships.

Unlike traditional neural networks, RBF networks utilize radial basis functions as activation functions, enabling them to capture intricate nonlinear patterns in data. These networks are highly efficient in approximating complex functions by combining radial basis functions centered at specific data points. This unique approach makes RBF networks a powerful tool in AI, pattern recognition, regression analysis, and data clustering.

In this article, we will explore the structure and training process of RBF networks, their applications in various domains such as image recognition and speech recognition, and the advantages they offer over other neural networks. By the end, you will have a comprehensive understanding of RBF networks and their potential in revolutionizing AI and advancing pattern recognition capabilities.

Understanding Radial Basis Functions

Radial Basis Functions (RBFs) play a crucial role in the functioning of neural networks. RBF networks utilize these functions as activation functions, which introduce nonlinearity and enable the modeling of complex relationships in network modeling. Unlike traditional activation functions such as sigmoid or ReLU, RBFs provide greater flexibility in capturing nonlinear patterns in data.

The key idea behind RBF networks is to approximate the desired output by composing weighted combinations of radial basis functions centered at specific data points. Each radial basis function has its own center, which corresponds to a particular data point. The width of the function determines its extent of influence on the output.

RBF networks are particularly effective in network modeling when dealing with intricate nonlinear interactions. The use of RBFs allows for the accurate representation of complex relationships, making them suitable for tasks such as pattern recognition and regression analysis.

The Flexibility of RBFs

One of the main advantages of using radial basis functions as activation functions is their ability to capture nonlinear patterns in data. This flexibility enables RBF networks to model complex relationships that would be difficult to represent using linear activation functions.

By using RBFs, neural networks can accurately approximate intricate functions and perform tasks such as pattern recognition with high accuracy. RBFs provide the necessary nonlinearity to capture the nuances and complexities present in real-world data.

Advantages in Network Modeling

Radial basis functions offer several advantages in network modeling. Their ability to introduce nonlinearity allows for more accurate representation of complex relationships, leading to improved performance in tasks such as pattern recognition and regression analysis.

Furthermore, the localized nature of RBFs enables networks to handle outliers and noise effectively. Instead of relying on a single global model, RBF networks use localized models centered around specific data points. This allows for robustness in the presence of noisy or outlier data.

RBF networks, with their utilization of radial basis functions, provide a powerful tool for modeling nonlinear relationships. These networks excel in capturing complex patterns and relationships in data, making them invaluable in fields such as image recognition, speech recognition, and data clustering.

Architecture and Training of RBF Networks

The architecture of an RBF neural network is composed of three layers: the input layer, the hidden layer, and the output layer. These layers work together to process the input data and generate the desired output. Let’s take a closer look at each layer and their respective functions in the network.

Input Layer

The input layer is responsible for receiving the input data that the network will process. It serves as the starting point of the network and provides a way for external data to enter the network. The input layer does not perform any calculations or transformations on the data; its sole purpose is to pass the input data to the next layer, which is the hidden layer.

Hidden Layer

The hidden layer is the core of the RBF network. It applies radial basis functions to the input data, transforming it into a higher-dimensional representation. Each neuron in the hidden layer represents a radial basis function, with its center corresponding to a specific data point. The width of the radial basis function determines its extent of influence on the output. The hidden layer’s neurons calculate the degree of activation based on the input data and their respective radial basis function centers.

Output Layer

The output layer combines the responses of the hidden layer neurons to produce the final output of the RBF network. It takes the weighted sum of the hidden layer’s neuron activations as input and applies additional transformations, if necessary, to generate the network’s output. The output layer’s configuration and activation function depend on the specific task the RBF network is designed to perform, such as classification or regression.

To train an RBF neural network, two important steps need to be performed: center selection and weight determination.

Center Selection

Center selection involves identifying representative data points from the training data and using them as the centers for the radial basis functions in the hidden layer. The selection of these centers plays a crucial role in the performance of the RBF network. Various algorithms and techniques, such as the k-means algorithm, can be employed to determine the optimal centers.

Weight Determination

Weight determination aims to optimize the weights associated with each radial basis function in the hidden layer. These weights determine the importance or contribution of each radial basis function to the network’s overall output. The process involves minimizing the difference between the network’s predicted output and the target output through techniques like gradient descent or least squares estimation.

By optimizing the center selection and weight determination processes, RBF neural networks can effectively model complex relationships, approximate nonlinear functions, and provide accurate predictions in various domains.

Next, we will explore the applications of RBF networks and see how they are utilized in real-world scenarios.

Applications of RBF Networks

RBF networks are highly versatile and find applications in various domains. One of their main strengths lies in pattern recognition, where they excel at identifying complex and nonlinear patterns. Their ability to capture intricate relationships in data makes them effective in classifying and recognizing patterns with high accuracy. RBF networks have been successfully applied in a wide range of fields, including:

- Image recognition: RBF networks are widely used in image recognition tasks, enabling the identification and categorization of objects, shapes, and patterns within images. Their ability to model nonlinearity allows for robust recognition even in complex visual scenes.

- Speech recognition: The nonlinear modeling capabilities of RBF networks make them well-suited for speech recognition applications. They can accurately identify and transcribe spoken words and phrases, enabling advancements in voice-controlled technologies and natural language processing.

- Handwritten character recognition: RBF networks are commonly employed in handwriting recognition systems, allowing the identification and interpretation of handwritten characters. This has applications in digitizing handwritten documents, signature verification, and automated form processing.

- Regression analysis: RBF networks also find use in regression analysis tasks, where the goal is to model and predict continuous variables based on input data. Their ability to approximate complex nonlinear relationships makes them effective at generating accurate predictions and insights.

- Data clustering: RBF networks possess intrinsic clustering capabilities, allowing them to group data points based on their similarity. This is especially valuable in unsupervised learning tasks such as data clustering and anomaly detection, where the goal is to identify patterns or outliers in large datasets.

By leveraging the power of radial basis functions and their ability to model nonlinear relationships, RBF networks have become essential tools in various domains, providing accurate pattern recognition, regression analysis, and clustering capabilities.

Advantages of RBF Networks

Radial Basis Functions (RBF) neural networks offer significant advantages compared to other types of neural networks. Their unique ability to model nonlinear relationships makes them particularly well-suited for domains where linear models fall short. By capturing the complexity of real-world problems with intricate nonlinear interactions, RBF networks provide superior performance and more accurate predictions.

One notable advantage of RBF networks is the reduced number of parameters required compared to deep neural networks. This reduction not only simplifies the network architecture but also mitigates the risk of overfitting. Fewer parameters contribute to enhanced generalization capabilities, enabling RBF networks to adapt well to new, unseen data.

RBF networks are also adept at handling outliers and noise in the input data. The localized nature of radial basis functions allows the network to selectively focus on relevant information while disregarding irrelevant or noisy data points. This attribute enhances the network’s resilience to outliers and noise, resulting in more robust and reliable performance.

“The advantages of RBF networks lie in their ability to effectively model complex and nonlinear relationships, leading to superior performance, fewer parameters, and robustness against outliers and noise.”

RBF networks have demonstrated their effectiveness in various applications, spanning pattern recognition, regression analysis, data clustering, image recognition, speech recognition, and handwritten character recognition. Across these domains, RBF networks consistently deliver accurate results by capturing intricate and non-obvious patterns in the data.

By leveraging the power of nonlinear relationships, RBF networks continue to advance the fields of artificial intelligence and machine learning. These networks unlock new possibilities for solving complex real-world problems and ultimately contribute to the advancement of AI technology as a whole.

Advantages of RBF Networks Summary:

| Advantages | Explanation |

|---|---|

| Modeling Nonlinear Relationships | RBF networks excel at capturing intricate nonlinear interactions, enabling superior performance in complex domains. |

| Superior Performance | By accurately approximating complex functions, RBF networks consistently deliver high-quality results in various applications. |

| Fewer Parameters | The reduced parameter count simplifies network architecture and enhances generalization capabilities, reducing overfitting risks. |

| Handling Outliers and Noise | The localized nature of radial basis functions enables effective handling of outliers and noise, resulting in more robust performance. |

Conclusion

Radial Basis Functions neural networks have proven to be a valuable tool for modeling complex nonlinear relationships in various domains. Their ability to approximate nonlinear functions by composing weighted combinations of radial basis functions allows for more accurate predictions and pattern recognition. These advancements in RBF networks have paved the way for significant advancements in the field of artificial intelligence.

RBF networks continue to find applications in a wide range of fields, including image and speech recognition, data clustering, and regression analysis. Their versatility and effectiveness in handling complex patterns make them a popular choice for solving real-world problems. Moreover, the capabilities of RBF networks are expected to advance further as researchers and practitioners continue to explore their potential.

As the field of artificial intelligence progresses, the importance of nonlinear function approximation and pattern recognition becomes increasingly evident. RBF networks hold promise for solving increasingly intricate problems and unlocking the power of nonlinearity in machine learning. With ongoing research and development, RBF networks are poised to contribute significantly to advancements in AI and its applications in the years to come.

FAQ

What are RBF networks?

RBF networks, or Radial Basis Function networks, are a type of feedforward neural network that excel in modeling nonlinear relationships.

How do RBF networks introduce nonlinearity in network modeling?

RBF networks utilize radial basis functions as activation functions, which provide greater flexibility in capturing nonlinear patterns in data.

What is the architecture of an RBF neural network?

The architecture of an RBF neural network consists of three layers: the input layer, the hidden layer (where radial basis functions are applied), and the output layer.

How are RBF networks trained?

Training an RBF neural network involves center selection and weight determination, aiming to identify representative data points as centers for the radial basis functions and optimizing the weights to minimize the difference between the predicted output and the target output.

What are the applications of RBF networks?

RBF networks find applications in pattern recognition, regression analysis, and data clustering, and have been successfully applied in image recognition, speech recognition, and handwritten character recognition.

What are the advantages of RBF networks?

RBF networks offer superior performance in domains with intricate nonlinear interactions, have fewer parameters compared to deep neural networks, and handle outliers and noise effectively.

How do RBF networks contribute to AI?

RBF networks are a valuable tool for modeling complex nonlinear relationships, allowing for more accurate predictions and pattern recognition in various domains.