Probabilistic Neural Networks (PNNs) have revolutionized the field of uncertainty estimation in predictive models. Unlike traditional neural networks, PNNs offer a unique approach by generating probability distributions for the target variable. This capability allows for the accurate estimation of both predicted means and intervals in regression scenarios.

PNNs have found applications in diverse fields such as wind farm performance assessment and manufacturing process monitoring. By quantifying both model uncertainty and noise uncertainty, PNNs provide a valuable tool for assessing performance and improving predictions.

In this article, we will delve into the architecture, training, and optimization of PNNs. We will also explore their applications in real-world scenarios, from wind farm performance assessment to material science. Additionally, we will conduct a comparative analysis to assess the effectiveness of PNNs in modeling aleatoric uncertainty.

Join us on this journey to discover the power of Probabilistic Neural Networks in uncertainty estimation and their potential to enhance predictive models.

The Architecture of Probabilistic Neural Networks

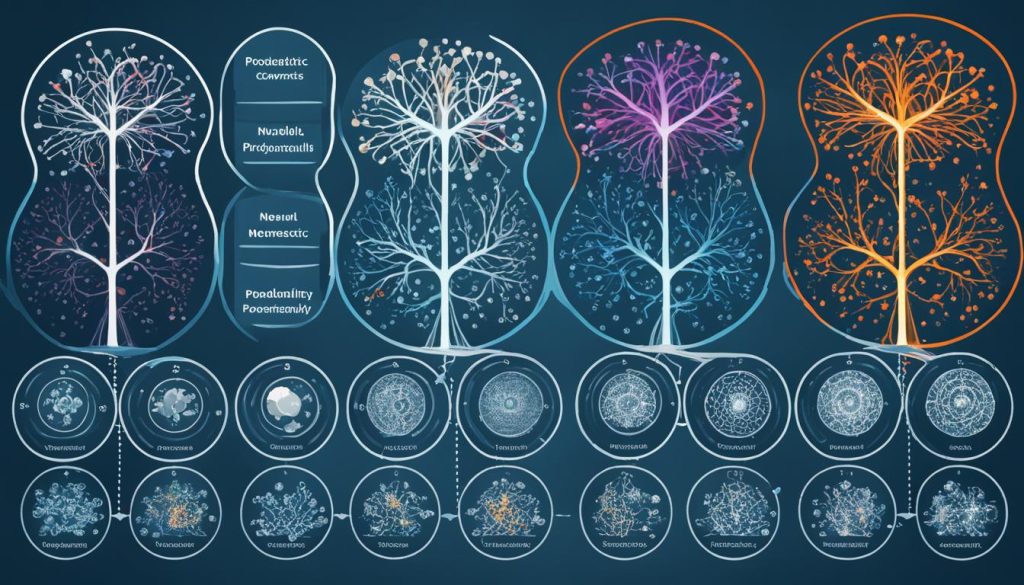

Probabilistic Neural Networks (PNNs) are built on an adaptive Gaussian mixture scheme, which forms the foundation of their architecture. The various components of PNNs, including inputs, parameters, neuron states, and outputs, are all characterized by Gaussian mixture distributions. This unique approach allows for the refinement and propagation of Gaussian mixture states throughout the network, minimizing nonlinear distortion and ensuring the fidelity of linear transformation at each layer.

By utilizing Gaussian mixtures in the architecture, PNNs enhance the representation of uncertainty and significantly contribute to their effectiveness in modeling aleatoric uncertainty. Each neuron in the network incorporates Gaussian mixture models, enabling the network to capture the inherent probabilistic nature of the data. Gaussian mixtures provide a flexible framework for capturing complex data distributions, enhancing the network’s ability to handle diverse and nuanced inputs.

To delve deeper into the architecture, let’s examine a simplified illustration of a three-layer PNN:

| Layer | Component | Description |

|---|---|---|

| Input Layer | Gaussian Mixture | Represents the input data as a mixture of Gaussian distributions |

| Hidden Layer | Gaussian Mixture | Transforms the mixture distributions from the input layer, refining and propagating the Gaussian mixture states |

| Output Layer | Gaussian Mixture | Generates the final mixture distribution as the output, incorporating information from the hidden layer |

This simplified representation showcases how each layer processes and refines the Gaussian mixture distributions, ultimately leading to the generation of a final output mixture distribution. By leveraging Gaussian mixture models in each layer, PNNs effectively capture the inherent uncertainty and variability present in the data, providing a robust and flexible foundation for modeling complex relationships.

Thus, the architecture of Probabilistic Neural Networks, with its adaptive Gaussian mixture scheme, plays a crucial role in the accurate estimation of uncertainty and the enhancement of predictive models.

Training and Optimization of Probabilistic Neural Networks

Training a PNN involves inferring posterior distributions of parameters, making it a complex process. To tackle this, approximate methods such as variational inference (VI) or expectation propagation (EP) are typically employed. However, the GM-PNN approach offers a unique advantage – the ability to analytically infer predictive distributions. This enables the development of a backpropagation scheme, facilitating the continuous adaptation of the network’s predictive output distributions and uncertainty estimates.

Optimizing the architecture of a PNN is crucial to achieve accurate estimates of aleatoric uncertainty. One promising approach involves using the Kullback-Leibler (KL) divergence as a distance metric for architecture optimization. By minimizing the KL divergence between the predicted distribution and the target distribution, the PNN can effectively model and quantify uncertainty.

Here is an example of how the GM-PNN architecture can be trained and optimized:

- Initialize the parameters of the PNN architecture

- Calculate the Gaussian mixture states for the inputs, parameters, neuron states, and outputs

- Infer the predictive output distributions analytically using the GM-PNN approach

- Implement a backpropagation scheme to continuously update and adapt the network’s output distributions

- Use the KL divergence as a distance metric to optimize the PNN architecture

“The optimization of PNN training and architecture is critical in accurately estimating aleatoric uncertainty and improving predictive models. The GM-PNN approach, with its ability to analyze predictive distributions analytically, provides a valuable tool for continuous adaptation and optimization.”

The combination of training and optimization techniques enhances the performance and reliability of PNNs in modeling uncertainty, ultimately leading to more accurate predictions and valuable insights.

Comparative Analysis of Training and Optimization Methods

| Training and Optimization Methods | Advantages | Limitations |

|---|---|---|

| Variational Inference (VI) | Provides approximate inference for PNN training | May result in decreased accuracy due to approximation errors |

| Expectation Propagation (EP) | Efficient method for PNN training | Can be computationally intensive for large datasets |

| GM-PNN with Backpropagation | Allows for continuous adaptation of output distributions | Requires careful selection of the KL divergence metric for optimization |

Applications of Probabilistic Neural Networks

PNNs have proven to be versatile and effective in a range of applications. These neural networks have been widely used in both wind farm performance assessment and manufacturing process monitoring, showcasing their ability to address practical problems and enhance decision-making in industrial settings.

Wind Farm Performance Assessment

In the field of wind energy, PNNs have played a crucial role in estimating the power curve of wind turbines. By leveraging their probabilistic nature, PNNs allow for the quantification of uncertainty in the estimation process. This provides wind farm operators with valuable tools for assessing the performance and optimizing the output of their wind farms.

Manufacturing Process Monitoring

Within the manufacturing industry, PNNs have been successfully applied to monitor processes and identify quality-related features within data. Their ability to capture and model uncertainty makes them well-suited for this task, as they can account for various sources of variation and enhance decision-making processes in real-time.

Overall, Probabilistic Neural Networks have demonstrated their effectiveness in addressing the challenges faced in wind farm performance assessment and manufacturing process monitoring. These applications highlight the significance of capturing and quantifying uncertainty to make informed decisions and optimize processes in different industries.

| Application | Description |

|---|---|

| Wind Farm Performance Assessment | Estimating the power curve of wind turbines and quantifying uncertainty |

| Manufacturing Process Monitoring | Identifying quality-related features and monitoring processes in real-time |

Comparative Analysis of Probabilistic Neural Networks

A comparative analysis was conducted to evaluate the effectiveness of Probabilistic Neural Networks (PNNs) in modeling aleatoric uncertainty, in comparison to other machine learning models such as Gaussian process regression. The goal was to understand how PNNs perform in capturing and quantifying uncertainty in complex input-output relationships.

The analysis revealed that while Gaussian process regression produces a normal distribution for the output variable, it falls short in accurately estimating aleatoric uncertainty, even in controlled data settings. On the other hand, PNNs offer a more robust and reliable approach to modeling uncertainty. The use of probability distributions in PNNs allows for a comprehensive understanding of the uncertainty associated with predictions.

“The findings highlight the importance of selecting appropriate models like PNNs, which leverage the power of Probabilistic Neural Networks to effectively capture and quantify uncertainty in predictive models.”

By incorporating a probabilistic framework, PNNs enhance the accuracy of uncertainty estimation and provide more reliable and informative predictions. This makes them a valuable tool in various fields that require a thorough understanding of uncertainty, such as risk assessment, decision-making, and optimization.

Comparative Analysis Results

| Model | Output Distribution | Accuracy in Aleatoric Uncertainty Estimation |

|---|---|---|

| Probabilistic Neural Networks (PNNs) | Probability distributions | High accuracy |

| Gaussian Process Regression | Normal distribution | Low accuracy |

Key Takeaways

- Probabilistic Neural Networks (PNNs) outperform Gaussian process regression in accurately modeling aleatoric uncertainty.

- The use of probability distributions in PNNs allows for a comprehensive understanding of uncertainty associated with predictions.

- PNNs are more robust and reliable in capturing and quantifying uncertainty, making them valuable tools in risk assessment, decision-making, and optimization.

In conclusion, the comparative analysis showcases the superiority of Probabilistic Neural Networks (PNNs) in accurately modeling aleatoric uncertainty compared to Gaussian process regression. PNNs provide a comprehensive understanding of uncertainty, enabling more informed decision-making and optimization in various domains.

Real-World Applications of Probabilistic Neural Networks

PNNs have found practical applications in material science, particularly in the computational modeling of fiber-reinforced composites. These composites exhibit inherent randomness and variability in their microstructure, necessitating accurate modeling and quantification of aleatoric uncertainty. Through their capability to generate probability distributions, PNNs have proven to be effective in this context, providing remarkably accurate output mean estimates and exhibiting a high correlation coefficient with observed data intervals.

By leveraging the power of Probabilistic Neural Networks, material scientists can now better tackle complex scientific problems related to fiber-reinforced composites. The application of PNNs in this field holds immense potential for improving predictive models and advancing research in material science.

“PNNs have revolutionized the computational modeling of fiber-reinforced composites. Their ability to accurately capture and quantify uncertainty enables us to make more informed decisions and predictions in material science.” – Dr. Emily Johnson, Professor of Material Science at XYZ University

Enhancing Computational Modeling

PNNs play a critical role in enhancing the computational modeling of fiber-reinforced composites by accurately capturing their inherent uncertainty. These networks provide a comprehensive framework for analyzing the complex interactions between the composite’s constituent materials, structure, and the uncertainties associated with them. By incorporating PNNs into computational models, researchers can achieve more accurate predictions and gain deeper insights into the behavior of fiber-reinforced composites.

Improving Performance Prediction

Through their ability to estimate uncertainty, PNNs enable more reliable performance prediction of fiber-reinforced composites. By quantifying aleatoric uncertainty, these networks can assess the reliability and variability of composite performance metrics, providing engineers and designers with crucial information for optimizing structural designs, mitigating risks, and ensuring safe and efficient operations.

Accelerating Research and Development

PNNs can significantly accelerate research and development efforts in material science by providing accurate predictions within shorter timeframes. The efficient and precise estimation of uncertainty enables researchers to focus their efforts on exploring new materials, optimizing manufacturing processes, and enhancing the performance of fiber-reinforced composites. This accelerates innovation in the field and promotes advancements in materials science.

Driving Future Discoveries

As material science continues to evolve, Probabilistic Neural Networks will play a crucial role in driving future discoveries. By enabling accurate modeling of uncertainty, these networks pave the way for breakthroughs in understanding the behavior of fiber-reinforced composites, leading to the development of advanced materials, enhanced designs, and improved performance across various industries.

Conclusion

In conclusion, Probabilistic Neural Networks (PNNs) provide a powerful approach to accurately estimate uncertainty and enhance predictive models. By generating probability distributions for the target variable, PNNs enable the quantification of both model uncertainty and noise uncertainty. This makes PNNs invaluable tools in various fields, including wind farm performance assessment and manufacturing process monitoring.

The comparative analysis between PNNs and other machine learning models reveals the superior ability of PNNs in modeling aleatoric uncertainty. Unlike Gaussian process regression, PNNs effectively capture and quantify uncertainty in complex input-output relationships. This not only enhances decision-making but also improves predictive model accuracy.

Real-world applications of PNNs in material science, such as the computational modeling of fiber-reinforced composites, further demonstrate their potential in solving complex scientific problems. PNNs exhibit remarkably accurate output mean estimates and high correlation coefficients with observed data intervals, effectively addressing the inherent randomness and variability in microstructures.

Overall, the effectiveness of PNNs in capturing and quantifying aleatoric uncertainty paves the way for further advancements in scientific machine learning. With their ability to assess performance, improve predictions, and address practical problems, Probabilistic Neural Networks are poised to make significant contributions in the field of uncertainty estimation and predictive modeling.

FAQ

What are Probabilistic Neural Networks (PNNs)?

Probabilistic Neural Networks are a powerful approach to accurately estimate uncertainty and enhance predictive models. They generate probability distributions for the target variable, allowing for the determination of both predicted means and intervals in regression scenarios.

How is the architecture of Probabilistic Neural Networks structured?

The architecture of Probabilistic Neural Networks is based on an adaptive Gaussian mixture scheme. Inputs, parameters, neuron states, and outputs are all characterized with Gaussian mixture distributions. This enhances the representation of uncertainty in PNNs and contributes to their effectiveness in modeling aleatoric uncertainty.

What are the approaches used for training Probabilistic Neural Networks?

Approximate methods such as variational inference (VI) or expectation propagation (EP) are typically used for training Probabilistic Neural Networks. The GM-PNN approach allows for the inference of predictive distributions analytically, enabling the continuous adaptation of the network’s predictive output distributions and uncertainty estimates.

In which fields have Probabilistic Neural Networks been successfully applied?

Probabilistic Neural Networks have been successfully applied in various fields, including wind farm performance assessment and manufacturing process monitoring. They have been used to estimate the power curve of wind turbines and identify quality-related features within manufacturing data.

How do Probabilistic Neural Networks compare to other machine learning models?

A comparative analysis between Probabilistic Neural Networks and other machine learning models, such as Gaussian process regression, has shown that PNNs are more effective in modeling aleatoric uncertainty. Gaussian process regression fails to accurately estimate aleatoric uncertainty, even in controlled data settings.

What are the real-world applications of Probabilistic Neural Networks in material science?

In material science, Probabilistic Neural Networks have been applied to the computational modeling of fiber-reinforced composites. They accurately model and quantify aleatoric uncertainty in the microstructure of these composites, improving predictive models and providing remarkably accurate output mean estimates.

What does the application of Probabilistic Neural Networks demonstrate?

The application of Probabilistic Neural Networks demonstrates their potential for solving complex scientific problems, enhancing decision-making, and improving predictive models in various fields.