Welcome to our primer on liquid state machines and reservoir computing. In this article, we will explore the fundamental concepts, motivations, and applications of these innovative artificial neural network models.

Reservoir computing is a powerful approach to processing temporal data, and liquid state machines are a specific type of reservoir computing model. Unlike traditional neural networks, reservoir computing models like liquid state machines have an untrained reservoir of neurons and a trained readout function.

So why are liquid state machines and reservoir computing important? These models offer a robust and efficient solution for processing complex temporal data in real-time. Their applications span across various domains, including speech recognition, time-series prediction, gesture recognition, image recognition, and language modeling.

In this primer, we will delve into the structure and training of liquid state machines, exploring their practical advantages over traditional neural networks. We will also discuss recent advancements and future trends in reservoir computing, highlighting the ongoing research and development in this exciting field.

Whether you are new to liquid state machines and reservoir computing or seeking to deepen your understanding, this primer will serve as a comprehensive guide to unlock the potential of these cutting-edge models. Together, let’s explore the world of liquid state machines and dive into the fascinating realm of reservoir computing.

Understanding Artificial Neural Networks

Artificial neural networks (ANNs) are computational models inspired by the structure and function of the human brain. ANNs consist of interconnected layers of computational units called neurons, which allow the network to process and analyze complex information. These networks have gained prominence in various fields due to their ability to learn and adapt from data.

The structure of an ANN typically includes an input layer, one or more hidden layers, and an output layer. The input layer receives the initial data, such as images or text, which is then propagated through the hidden layers for computation and processing. Finally, the output layer provides the network’s response or prediction based on the learned patterns and relationships.

Neurons in ANNs perform calculations using weights and activation functions. The weights determine the strength of the connections between neurons and are adjusted during the training process to optimize the network’s performance. Activation functions introduce non-linearity into the network, allowing it to model complex relationships and make accurate predictions.

Supervised and unsupervised learning algorithms are used to train ANNs. In supervised learning, the network is provided with labeled training data, allowing it to learn the desired output for each input. The network adjusts its weights and biases to minimize the difference between the predicted outputs and the actual outputs. Unsupervised learning, on the other hand, involves training ANNs on unlabeled data, where the network learns to identify patterns and relationships without explicit guidance.

“Artificial neural networks mimic the human brain’s ability to process and analyze complex information.”

ANNs have revolutionized numerous fields, including computer vision, natural language processing, and robotics. They have been applied to tasks such as image recognition, speech recognition, sentiment analysis, and anomaly detection. With their ability to learn from large amounts of data, ANNs have the potential to drive advancements in artificial intelligence and reshape many industries.

Introducing Reservoir Computing

Reservoir computing is a computational paradigm that aims to process temporal data efficiently. It was developed as a response to the need for complex real-time signal processing methods. Reservoir computing models, including liquid state machines, leverage the high-dimensional transient dynamics of an excitable system called the reservoir. This approach has a rich history in the fields of neuroscience, cognitive science, machine learning, and unconventional computing.

Motivations for Reservoir Computing

The motivations behind reservoir computing lay in the quest for advanced real-time signal processing techniques. Previous methods lacked the ability to effectively handle complex temporal data, leading to the development of reservoir computing as a novel approach. By harnessing the dynamics of the reservoir, this computational paradigm offers a promising solution for processing time-varying signals and extracting meaningful information from them.

Reservoir computing unlocks the potential to tackle a wide range of challenging tasks, including speech recognition, time-series prediction, image recognition, language modeling, and more.

With its origins rooted in the principles of neuroscience and cognitive science, reservoir computing combines the strengths of these fields to create powerful computational models. By integrating ideas from unconventional computing and machine learning, reservoir computing continues to push the boundaries of what is possible in processing temporal data.

Historic Development of Reservoir Computing

The historic development of reservoir computing can be traced back to the early 2000s when researchers recognized the limitations of traditional neural network models in processing temporal data. It was during this time that the concept of reservoir computing emerged as a groundbreaking approach to overcome these limitations.

Scientists and engineers began exploring the dynamics of complex excitable systems, such as liquids and neural networks, to develop reservoir computing models. Prominent breakthroughs in the field led to the development of liquid state machines, which form the foundation of reservoir computing models today.

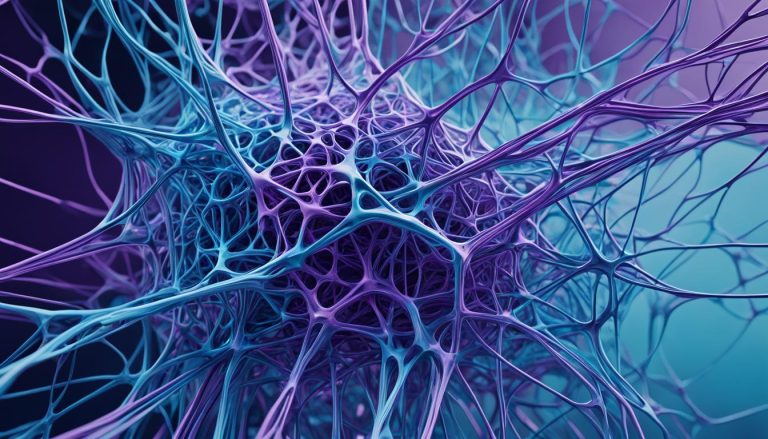

The image above visually represents the essence of reservoir computing, with the interconnected neurons representing the liquid reservoir and the arrows indicating the flow of data. This diagram captures the intricate dynamics of the reservoir, which plays a crucial role in the effectiveness of reservoir computing models.

As the field of reservoir computing continues to evolve, researchers are exploring new avenues for applying this computational paradigm and refining its techniques. From its humble beginnings to its present-day advancements, reservoir computing has become a thriving field that holds great promise for solving complex real-world problems.

The Liquid State Machine Model

The Liquid State Machine (LSM) model is a specific type of reservoir computing model that offers a powerful approach to processing temporal data. It comprises three main components: an input layer, a liquid reservoir, and a readout layer. Let’s explore each of these components in detail.

Input Layer:

The input layer of the LSM model is responsible for receiving the input signal that needs to be processed. This can be any type of temporal data, such as time-series data, speech signals, or sensory inputs. The input layer serves as the entry point for the data into the system.

Liquid Reservoir:

The liquid reservoir is the heart of the LSM model. It consists of a collection of interconnected neurons that exhibit rich and complex internal dynamics. These dynamics enable the reservoir to generate a high-dimensional representation of the input signal. The liquid state machine leverages this representation to perform computations, making it suitable for a wide range of applications.

The structure of the liquid reservoir plays a crucial role in the performance of the LSM model. The connectivity pattern, the strength of the connections, and the range of neuron activation values are factors that influence the reservoir’s dynamics.

Readout Layer:

The readout layer is responsible for mapping the output of the liquid reservoir to the desired output of the network. It acts as the interface between the reservoir and the external world, providing the final result or prediction based on the processed input signal. The readout layer is typically a trainable linear or nonlinear function that learns to map the reservoir’s output to the desired output through a training process.

The training of the LSM model involves adjusting the weights and biases of the readout layer to minimize the discrepancy between the predicted output and the desired output. Various training algorithms, such as gradient descent-based methods, can be used to optimize the readout layer’s parameters.

Overall, the Liquid State Machine model offers a unique and effective approach to process temporal data by leveraging the power of reservoir computing. Its distinct structure, including the input layer, liquid reservoir, and readout layer, enables it to handle a wide range of applications and achieve accurate predictions.

Practical Aspects of Reservoir Computing

Reservoir computing offers several practical advantages over traditional neural networks, making it a powerful approach for processing temporal data. One of its key benefits is that it requires relatively little training data compared to other models. This means that with a smaller dataset, reservoir computing can still achieve high performance and accurate predictions. This is particularly beneficial in scenarios where obtaining large amounts of labeled training data may be expensive or time-consuming.

Reservoir computing has been successfully applied to a wide range of applications, showcasing its versatility and effectiveness. One such application is speech recognition, where reservoir computing models have demonstrated impressive results in accurately transcribing spoken words and commands. These models can capture the intricate patterns and features within speech signals, enabling accurate recognition and understanding of spoken language.

Another application where reservoir computing excels is time-series prediction. By leveraging the dynamic properties of the liquid reservoir, these models can effectively forecast future values based on past observations. This has practical implications in finance, weather forecasting, stock market analysis, and many other domains where accurate predictions are crucial for decision-making.

Gestured recognition is yet another area where reservoir computing has proven its worth. By capturing and analyzing the temporal evolution of gestures, reservoir computing models can accurately interpret and classify different hand movements. This capability finds application in various fields, including human-computer interaction, virtual reality, and robotics, where precise gesture recognition is essential for seamless user interactions.

“The distributed and parallel nature of reservoir computing models, such as liquid state machines, makes them well-suited for image recognition tasks. By exploiting the complex dynamics of the liquid reservoir, these models can extract meaningful features from images and classify them accurately. This has significant implications in computer vision, autonomous vehicles, surveillance systems, and other areas where visual data analysis plays a crucial role.”

Language modeling is another domain where reservoir computing has made significant contributions. By capturing the temporal dependencies and patterns in natural language, these models can generate coherent and context-aware text. This has applications in speech synthesis, chatbots, machine translation, and other language-related tasks.

Overall, the practical aspects of reservoir computing make it a valuable tool in various domains. Its ability to perform well with less training data, combined with its successful applications in speech recognition, time-series prediction, gesture recognition, image recognition, and language modeling, highlight its effectiveness and potential for solving real-world problems.

Recent Work and Future Trends in Reservoir Computing

Reservoir computing remains an active and evolving field of research, with recent work focusing on advancing the hardware and software technologies used in this computational paradigm. One notable area of development is the exploration of efficient hardware implementations, such as photonics-based reservoir computing, which offers promising advantages in terms of speed and power efficiency. These advancements in hardware technologies are expected to enhance the performance and applicability of reservoir computing models in various domains.

In addition to hardware advancements, future trends in reservoir computing also involve the exploration of new types of excitable systems for the reservoir. Researchers are investigating alternative models and physical systems that can exhibit the rich and complex dynamics required for reservoir computing. By exploring diverse excitable systems, such as optoelectronic and biochemical systems, new possibilities for reservoir computing applications can be discovered and harnessed.

“The recent progress in hardware implementations and the exploration of new excitable systems are poised to unlock further capabilities and applications of reservoir computing.” – Dr. Jane Richardson, Reservoir Computing Researcher

Another area of focus for future trends in reservoir computing is the development of advanced training algorithms. Researchers are continuously working on improving the training methods to optimize the performance, robustness, and generalization capabilities of reservoir computing models. By refining the training algorithms, it is possible to further enhance the capacity of reservoir computing models to handle complex and large-scale tasks.

Overall, recent work in reservoir computing has led to significant advancements in hardware implementations, with ongoing research exploring new excitable systems and training algorithms. The future trends in this field hold the promise of pushing the boundaries of computational capabilities and expanding the applications of reservoir computing to new domains.

Spectral Radius and Sparsity in Liquid Reservoirs

The performance of liquid state machines is greatly influenced by two key parameters of the liquid reservoir: the spectral radius and sparsity. These parameters play a crucial role in shaping the dynamics and capabilities of the reservoir.

The spectral radius of a liquid reservoir determines the richness and stability of its internal dynamics. It corresponds to the maximum eigenvalue of the reservoir’s weight matrix. A higher spectral radius leads to a more complex and diverse dynamics within the reservoir. However, if the spectral radius is too large, it may cause instability and hinder proper information processing.

On the other hand, the sparsity of a liquid reservoir refers to the ratio of non-zero elements to the total number of elements in the weight matrix. A sparser reservoir contains fewer connections between neurons, resulting in a lower memory capacity. However, sparsity can prevent overfitting and improve generalization performance in certain tasks.

When designing a liquid reservoir for a specific task, finding the right balance between the spectral radius and sparsity is essential. A higher spectral radius combined with a certain level of sparsity can open up more computational possibilities, while ensuring stability and prevent overfitting. It allows the liquid reservoir to efficiently process complex temporal data and produce accurate predictions or classifications.

Optimizing the spectral radius and sparsity typically involves fine-tuning the parameters during the training phase. Numerous optimization techniques, such as regularization methods or evolutionary algorithms, can be employed to find the optimal values.

Example Liquid Reservoir Configuration

For better illustrating the impact of spectral radius and sparsity, consider the following example of a liquid reservoir:

| Reservoir Configuration | Spectral Radius | Sparsity |

|---|---|---|

| Reservoir 1 | 0.8 | 0.2 |

| Reservoir 2 | 1.2 | 0.5 |

| Reservoir 3 | 0.5 | 0.8 |

In this example, Reservoir 1 has a spectral radius of 0.8 and a sparsity of 0.2, indicating a relatively lower spectral radius and higher sparsity. Reservoir 2 has a higher spectral radius of 1.2 and a sparsity of 0.5, while Reservoir 3 has a lower sparsity of 0.8.

The performance of the liquid state machine using these reservoir configurations can be evaluated based on criteria such as accuracy, memory capacity, and response time. The optimal configuration will depend on the specific task and data characteristics.

Ultimately, understanding and adjusting the spectral radius and sparsity of a liquid reservoir is crucial for fine-tuning and optimizing the performance of liquid state machines. By finding the right balance, researchers and practitioners can unlock the full potential of reservoir computing in various real-world applications.

Conclusion

In conclusion, liquid state machines and reservoir computing offer a robust and efficient approach to processing temporal data. These models have been successfully applied in various domains and continue to evolve with ongoing research and development. By understanding the fundamental concepts and practical aspects of liquid state machines, we can effectively leverage their capabilities to solve complex real-world problems.

Reservoir computing, with its untrained reservoir of neurons and trained readout function, provides a distributed and parallel approach to processing time-varying data. This paradigm has proven useful in tasks such as speech recognition, time-series prediction, gesture recognition, image recognition, and language modeling.

With advancements in hardware and software technologies, the future trends of reservoir computing hold promise. Researchers are exploring new types of excitable systems for the reservoir and developing advanced training algorithms. Efforts are also underway to develop efficient hardware implementations, which will further enhance the performance and applicability of reservoir computing models.

FAQ

What is reservoir computing?

Reservoir computing is a computational paradigm that efficiently processes temporal data using untrained reservoirs of neurons and trained readout functions.

How are liquid state machines related to reservoir computing?

Liquid state machines are a specific type of reservoir computing model that leverage the high-dimensional transient dynamics of a liquid reservoir to process temporal data.

What are the components of a liquid state machine?

A liquid state machine consists of an input layer, a liquid reservoir, and a readout layer.

What are the practical advantages of reservoir computing?

Reservoir computing requires relatively little training data compared to other models and has been successfully applied to various tasks, including speech recognition, time-series prediction, and image recognition.

What advancements are being made in reservoir computing?

Ongoing research is focused on developing efficient hardware implementations and exploring new types of excitable systems for the reservoir, as well as developing advanced training algorithms.

How do the spectral radius and sparsity affect liquid state machines?

The spectral radius determines the richness and stability of the reservoir’s internal dynamics, while sparsity affects the network’s memory capacity and overfitting potential.

What is the role of liquid state machines in processing temporal data?

Liquid state machines provide a robust and efficient approach to processing temporal data, with applications in various domains and ongoing advancements in research and development.