When it comes to enhancing efficiency and performance in the field of Artificial Intelligence (AI), Multi-task Learning Neural Networks have emerged as a promising solution. By training a single shared machine learning model to perform multiple tasks simultaneously, Multi-task Learning (MTL) aims to improve data efficiency, model convergence, and reduce overfitting. Through the utilization of shared representations and the transfer of knowledge between related tasks, MTL enhances decision-making and overall performance.

In this article, we will explore the concept of Multi-task Learning Neural Networks and their significant impact on learning efficiency. We will delve into the benefits, optimization methods, practical applications, and when to best utilize Multi-task Learning. Let’s delve into a world where multiple tasks are tackled simultaneously, leading to improved outcomes and a more efficient AI ecosystem.

What is a Multi-Task Learning model?

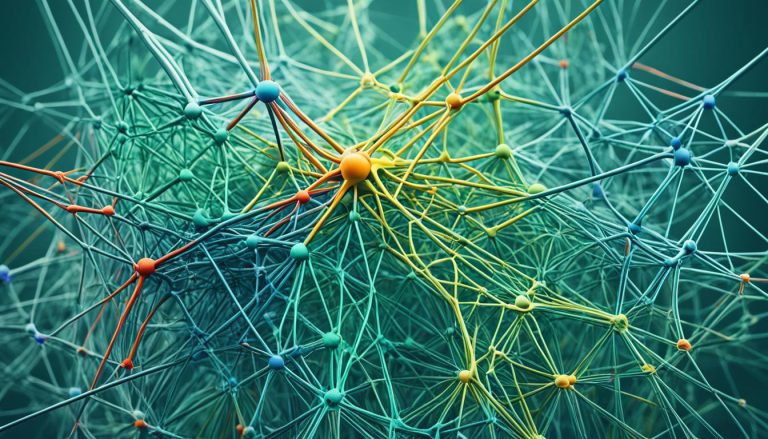

A Multi-Task Learning model refers to a single machine learning model that can handle multiple tasks simultaneously. This model utilizes shared representations in its hidden layers, enabling the transfer of knowledge and information between tasks. By learning transferable skills, similar to how humans learn, Multi-Task Learning models can leverage existing knowledge to improve performance on new tasks. This approach allows for faster model convergence, improved data efficiency, and reduced computational costs compared to training separate models for each individual task.

“A Multi-Task Learning model enables the transfer of knowledge and information between tasks, thanks to its shared representations.”

Traditional machine learning algorithms focus on solving one task at a time. However, Multi-Task Learning models break this limitation by jointly training on multiple related tasks. This joint training exploits the connections and commonalities between tasks, resulting in shared representations that capture essential features across tasks. These shared representations act as a source of knowledge transfer, allowing the model to leverage what it has learned from one task to improve its performance on other related tasks.

Transferable Skills and Improved Performance

Multi-Task Learning models learn transferable skills, which are generalizable knowledge and patterns that can be applied to multiple tasks. By acquiring these transferable skills, Multi-Task Learning models exhibit improved performance compared to single-task models when faced with new or unseen tasks.

For example, suppose we have a Multi-Task Learning model trained on both image classification and object detection tasks. The model can learn to identify general features like edges, textures, and shapes that are transferable across both tasks. When presented with a new image classification task, the model can leverage these shared representations to make accurate predictions even with limited training data. This transfer of knowledge leads to faster convergence, improved efficiency in learning, and enhanced performance on multiple tasks.

Moreover, Multi-Task Learning models can benefit from shared representations by avoiding overfitting on individual tasks. By jointly training on multiple tasks, the model is exposed to a more diverse set of examples, which helps in building robust and generalized representations. This results in improved generalization and reduced task-specific bias in the model.

Efficiency and Computational Savings

Multi-Task Learning models offer significant efficiency advantages over training separate models for each task. By sharing parameters and representations, these models reduce the overall computational cost required for training and inference. This is particularly valuable in scenarios where computational resources are limited.

Training separate models for each task requires redundant computation as each model independently learns task-specific features and representations. In contrast, Multi-Task Learning models learn shared representations that capture common information between tasks. This enables the model to leverage existing knowledge and avoid redundant computation, resulting in improved data efficiency and reduced training time.

Furthermore, deploying a Multi-Task Learning model requires managing a single model instead of maintaining and updating multiple individual models. This leads to operational efficiency and simplifies the deployment process in real-world applications.

When should Multi-Task Learning be used?

Multi-Task Learning (MTL) is a powerful technique that can greatly benefit certain types of problems. It is particularly effective when the tasks being solved are related and have an inherent correlation. By jointly training a model on multiple related tasks, MTL offers several potential advantages.

**Joint training** of multiple tasks in MTL can lead to improved **prediction accuracy**. By leveraging shared representations and transferring knowledge between tasks, the model can learn more robust and generalized features that enhance its predictive capabilities. This allows for a more comprehensive understanding of the underlying relationships and dependencies among the tasks.

Another significant advantage of MTL is **data efficiency**. When multiple tasks are learned simultaneously, the model can effectively utilize shared information and extract common patterns across different datasets. This means that the model can achieve high performance even with limited amounts of labeled training data for each individual task. MTL reduces the need for collecting and labeling large amounts of task-specific data, resulting in significant time and cost savings.

MTL also contributes to **reduced training time**. Instead of training separate models for each task, MTL allows for the joint training of all tasks in a single model. This eliminates the need for redundant computations and redundant model parameters, leading to faster convergence and overall speedup in training. The sharing of hidden layers and model parameters across tasks promotes information transfer and improves the overall learning efficiency.

However, it is essential to approach MTL with caution. The **quality of predictions** may suffer if the tasks have conflicting objectives or if one task dominates the learning process. Conflicting objectives can undermine the effectiveness of the shared representations, leading to suboptimal performance on individual tasks. Additionally, if one task is significantly easier or more straightforward than the others, it may overshadow the learning of other tasks, negatively impacting the overall performance of the model.

Therefore, before deciding to implement MTL, careful consideration should be given to the relationship between the tasks and their compatibility. Evaluating the interdependencies, synergies, and potential conflicts will help determine whether MTL is the appropriate approach for the given problem and lead to the desired improvements in prediction accuracy and data efficiency.

Optimization Methods for Multi-Task Learning

Multi-Task Learning (MTL) employs various optimization methods to maximize its effectiveness in training neural networks to perform multiple tasks simultaneously. These methods help in achieving efficient learning and improving overall model performance.

Loss Construction

Loss construction plays a crucial role in Multi-Task Learning. It involves balancing the individual loss functions for each task using different weighting schemes. By assigning different weights to each task’s loss function, the model can prioritize certain tasks and adjust the overall optimization process accordingly. This approach ensures that each task contributes appropriately to the shared learning process, enhancing the model’s performance on all tasks.

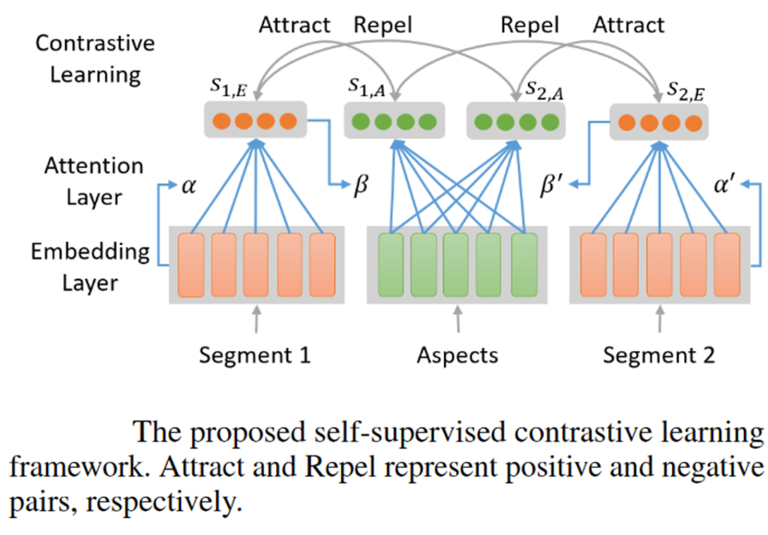

Hard Parameter Sharing

In Multi-Task Learning, hard parameter sharing is a technique where the hidden layers of the neural network are shared among all tasks, while specific output layers are task-specific. This sharing of hidden layers allows the model to learn the underlying representations common to all tasks. The shared layers capture features and patterns that are transferable across tasks, enabling the model to leverage this shared knowledge to improve performance on individual tasks.

Soft Parameter Sharing

Soft parameter sharing is another optimization method used in Multi-Task Learning. It focuses on learning shared features between tasks by regularizing the distance between individual model parameters. The regularization encourages the model to prioritize learning representations that are common to multiple tasks. By doing so, the model becomes more robust and generalizes better across tasks, utilizing the shared knowledge effectively.

Data Sampling

Data sampling techniques play a crucial role in Multi-Task Learning, especially when dealing with imbalanced data distributions across tasks. These techniques ensure that each task receives appropriate training samples, accounting for the differences in data sizes and distributions. By carefully sampling data from each task, the model can learn from all tasks equally, effectively leveraging the available information to improve performance.

Task Scheduling

Intelligent task scheduling methods determine which tasks to train on in each epoch of Multi-Task Learning. By carefully selecting the tasks to train on at different stages, the model can optimize the learning process. Task scheduling helps balance the training of different tasks, ensuring that important tasks receive sufficient attention and resources during training. This approach improves the model’s ability to generalize across tasks and makes the learning process more efficient.

By employing these optimization methods, Multi-Task Learning allows for efficient and effective joint training of multiple tasks. The combination of loss construction, hard parameter sharing, soft parameter sharing, data sampling, and task scheduling contributes to improved performance, enhanced data efficiency, and better utilization of shared knowledge across tasks.

Practical Applications of Multi-Task Learning

Multi-Task Learning (MTL) has emerged as a powerful technique with a wide range of practical applications in the fields of computer vision and natural language processing. By leveraging the benefits of shared representations, MTL models have demonstrated significant improvements in performance and efficiency across multiple related tasks.

Computer Vision

In computer vision, MTL has been successfully employed in various tasks, including:

- Object Detection: MTL models can simultaneously detect multiple objects in an image, improving accuracy and reducing computational costs.

- Image Segmentation: By utilizing shared representations, MTL models can accurately segment different regions in an image, enabling more precise analysis and understanding of visual content.

- Depth Estimation: MTL models can predict depth information from 2D images, enhancing the understanding of spatial relationships and enabling applications such as augmented reality and autonomous driving.

Through shared representations, MTL models in computer vision can effectively leverage knowledge learned from multiple tasks to enhance performance and generalization capabilities.

Natural Language Processing

Multitask learning has also shown significant value in the field of natural language processing, where it has been applied to tasks such as:

- Machine Translation: MTL models can learn from multiple translation tasks, improving the quality and accuracy of translation across different language pairs.

- Sentiment Analysis: By jointly training on different sentiment analysis tasks, MTL models can capture a broader understanding of human emotions and sentiment, leading to more nuanced and accurate sentiment analysis results.

- Language Understanding: MTL models can benefit from shared knowledge acquired from various language-related tasks, facilitating better comprehension, question answering, and document classification.

Multi-Task Learning in natural language processing enables models to acquire a deeper understanding of language by leveraging the shared information learned from diverse linguistic tasks.

In summary, Multi-Task Learning offers practical applications and performance improvements in computer vision and natural language processing domains. By effectively utilizing shared representations, MTL models exhibit enhanced performance, increased efficiency, and improved generalization capabilities in a diverse range of tasks.

Conclusion

Multi-Task Learning Neural Networks offer a promising approach to enhancing efficiency and performance in AI systems. By jointly optimizing multiple tasks, Multi-Task Learning enables the transfer of knowledge and information between related tasks, leading to improved decision-making and model performance.

The shared representations and transferable skills learned by Multi-Task Learning models contribute to faster convergence, improved data efficiency, and reduced computational costs. By leveraging the similarities and relationships between tasks, Multi-Task Learning enhances the learning process, allowing AI systems to achieve better results in less time.

Integrating Multi-Task Learning into the architecture of AI systems offers substantial benefits. It not only improves efficiency and performance but also allows AI models to learn from a broader range of experiences and tasks. This approach enhances the model’s ability to generalize and adapt to new challenges, ultimately unlocking the full potential of efficient and effective learning in AI systems.

FAQ

What is Multi-Task Learning?

Multi-Task Learning is a technique in Artificial Intelligence that trains a single shared machine learning model to perform multiple tasks simultaneously.

How does a Multi-Task Learning model work?

A Multi-Task Learning model is a single machine learning model that can handle multiple tasks at once by utilizing shared representations in its hidden layers.

When should Multi-Task Learning be used?

Multi-Task Learning is most effective when the tasks being solved are related and have some inherent correlation.

What are the optimization methods for Multi-Task Learning?

Optimization methods for Multi-Task Learning include loss construction, hard parameter sharing, soft parameter sharing, data sampling, and task scheduling.

What are the practical applications of Multi-Task Learning?

Multi-Task Learning has been applied in computer vision and natural language processing, among other fields.