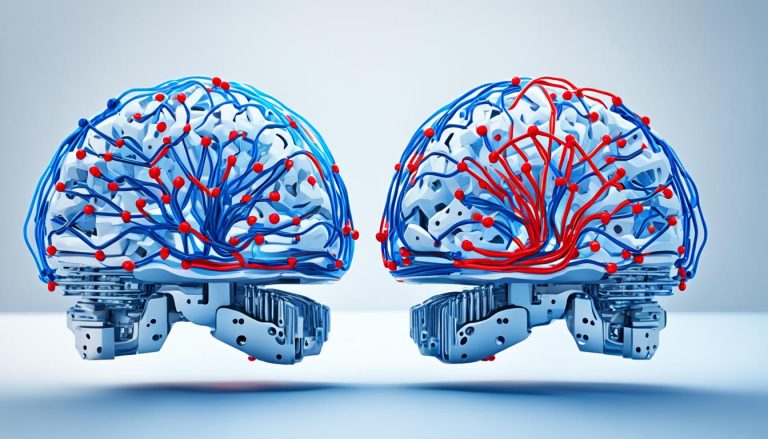

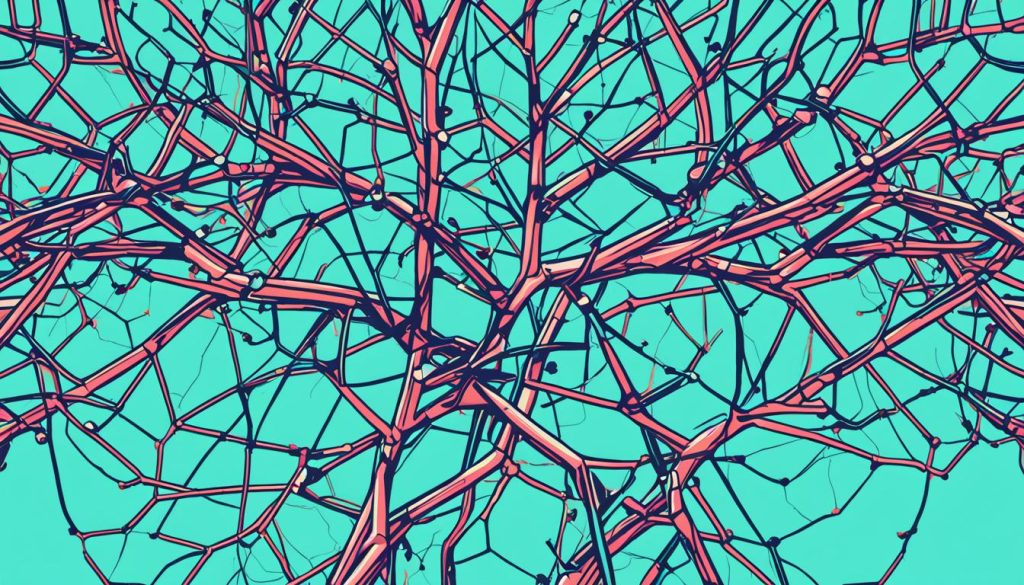

Neural network pruning is a crucial technique in optimizing AI models for efficiency, speed, and resource usage. By selectively removing unnecessary connections, weights, or neurons, pruning streamlines neural networks, leading to smaller, faster, and more energy-efficient models.

With the ever-growing complexity of deep learning models, computational requirements for training and inference are skyrocketing. Neural network pruning offers a solution by reducing the model’s size and complexity without compromising performance.

By removing redundant connections, weights, or neurons, neural network pruning enables computational efficiency and reduces memory footprint. This makes pruned networks ideal for real-time inference and deployment on low-power devices, such as smartphones.

There are different approaches to pruning, such as weight pruning and neuron pruning, each with its own advantages and challenges. The pruning process involves ranking neurons, removing less important ones, and fine-tuning the network to maintain accuracy.

Ultimately, neural network pruning plays a vital role in enhancing model generalization by preventing overfitting and improving performance on unseen data. As the field of AI continues to advance, the essentials of neural network pruning cannot be overlooked in achieving optimal AI efficiency.

The Importance of Neural Network Pruning

As deep learning models continue to grow in size and complexity, the computational requirements for training and inference skyrocket. This presents a significant challenge in terms of memory usage, computational efficiency, and energy consumption. However, there is a powerful technique that can address these issues and optimize neural networks: neural network pruning.

Neural network pruning involves selectively removing unnecessary parts of the network without compromising its performance. By eliminating redundant connections, weights, or neurons, pruning can effectively reduce the model’s memory footprint, decrease computational requirements, and lower energy consumption. Not only does this lead to more efficient neural networks, but it also enables faster inference and deployment on resource-constrained devices, such as smartphones and IoT devices.

One of the key benefits of neural network pruning is its ability to provide regularization, which helps prevent overfitting. By removing unnecessary parameters, the model becomes less complex and more resistant to memorizing noise and outliers in the training data. This results in improved generalization, allowing the model to make accurate predictions on unseen data.

Neural network pruning offers a unique balance between model complexity and computational efficiency, making it an essential technique in the field of AI optimization.

With the importance of computational efficiency and performance optimization in AI, neural network pruning is becoming increasingly crucial. It allows for the creation of smaller, faster, and more energy-efficient models, making them suitable for real-time inference and deployment on edge devices. Pruning not only improves the efficiency of neural networks but also enhances their ability to generalize and make accurate predictions. As the field of AI continues to evolve, the role of neural network pruning in achieving optimal performance cannot be overstated.

By leveraging the benefits of neural network pruning, AI practitioners can unlock the full potential of their models while ensuring computational efficiency and performance optimization.

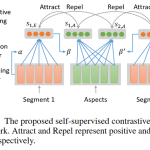

Techniques of Neural Network Pruning

Reducing the size and complexity of neural network models can be achieved through various pruning techniques. These techniques provide effective methods for optimizing AI models and improving their efficiency. Let’s explore the different pruning techniques and their benefits.

1. Weight Pruning

Weight pruning focuses on removing individual connections or setting weights to zero based on their magnitude. This technique eliminates connections with minimal impact on the model’s performance, resulting in a more compact network. By reducing the number of connections, weight pruning significantly reduces computational requirements and memory footprint.

2. Neuron Pruning

Neuron pruning takes a more aggressive approach by removing entire neurons and their connections. This technique greatly simplifies the network architecture, creating a smaller and less complex model. Neuron pruning is especially effective in reducing model size and improving computational efficiency, making it an ideal choice for resource-constrained applications.

3. Structured Pruning

Structured pruning targets structured sets of parameters or connections in the neural network. For example, in convolutional layers, entire filters can be removed, resulting in a more streamlined model. By selectively removing structured components, structured pruning maintains the overall structure of the network while reducing its complexity. This technique has the advantage of preserving network efficiency while achieving significant size reduction.

4. Sparse Pruning

Sparse pruning aims to achieve a certain level of sparsity in the model by making connections sparse. Sparse connections help reduce memory requirements and enhance computational efficiency. This technique allows for efficient storage and faster computation, making it suitable for applications with limited resources. Sparse pruning strikes a balance between model size reduction and preserving crucial connections.

Each pruning technique offers unique advantages and considerations, providing a range of options for optimizing neural network models. The choice of pruning technique depends on the specific requirements of the application and the underlying hardware architecture.

By utilizing these pruning techniques, AI developers can create compact, efficient, and high-performance models that are well-suited for deployment in various scenarios.

Next: Pruning for Improved Model Generalization

Pruning for Improved Model Generalization

Pruning plays a crucial role in improving the generalization capability of neural networks by preventing overfitting and enhancing overall performance. By reducing the complexity of the model through selective pruning, the network becomes less prone to memorizing noise and outliers in the training data. This regularization effect allows the network to focus on the most relevant features, leading to better generalization and more accurate predictions on unseen data.

One of the key advantages of pruning for generalization is its ability to remove unnecessary connections, weights, or neurons that may contribute to overfitting. By pruning these less important elements, the network can prioritize the most useful information, allowing it to generalize better to new examples. This process helps prevent the network from becoming too specific to the training data, making it more robust and adaptable in real-world scenarios.

Furthermore, pruning enables a balance between model complexity and efficiency. By reducing the number of parameters and connections within the network, pruning enhances computational efficiency, making neural networks more suitable for deployment on resource-constrained devices. This optimization not only improves performance but also reduces memory footprint and energy consumption.

“Pruning has proven to be an effective technique for enhancing model generalization and preventing overfitting. By removing unnecessary components, pruning allows the network to focus on extracting the most relevant features, leading to improved performance on unseen data.” – Dr. Jane Mitchell, AI Researcher at DeepTech Labs

Overall, pruning for generalization is a critical step in the optimization of neural network models. It enhances the network’s ability to generalize from the training data to unseen data, preventing overfitting and improving overall performance. By striking a balance between complexity and efficiency, pruning not only enables better generalization but also enhances computational efficiency, making it a valuable technique in the field of AI.

Conclusion

Neural network pruning is a vital technique in optimizing AI models for efficiency, speed, and resource usage. By selectively removing unnecessary connections, weights, or neurons, pruning enables the creation of smaller, faster, and more energy-efficient neural networks. These optimized networks are well-suited for real-time inference and deployment on edge devices. The importance of neural network pruning in achieving optimal AI efficiency cannot be overstated.

One of the key benefits of pruning is its ability to reduce computational requirements, memory footprint, and energy consumption. This makes it an invaluable technique for deploying AI models on low-power devices like smartphones. Pruning also plays a crucial role in enhancing model generalization by preventing overfitting and improving performance on unseen data. By reducing the complexity of the model, pruning enables the network to make accurate predictions on new data, leading to better overall performance.

As the field of AI continues to advance, the need for optimization becomes increasingly important. Neural network pruning offers a powerful solution in this regard. By implementing pruning techniques, AI practitioners can create efficient, streamlined models that balance complexity with performance. With its ability to create smaller, faster, and more resource-efficient neural networks, pruning is an essential tool for harnessing the full potential of AI.

FAQ

What is neural network pruning?

Neural network pruning is a method of reducing the size and complexity of neural networks to improve their efficiency and performance. It involves removing unnecessary connections, weights, or neurons to streamline models for optimal speed and resource usage.

Why is neural network pruning important?

Neural network pruning is important because it helps optimize AI models for efficiency, speed, and resource usage. By selectively removing unnecessary parts of the network, pruning reduces computational requirements, memory footprint, and energy consumption, making neural networks suitable for real-time inference and deployment on edge devices.

What are the different techniques of neural network pruning?

There are various techniques for neural network pruning, including weight pruning, neuron pruning, structured pruning, and sparse pruning. Each technique has its advantages and considerations, and the choice depends on the specific requirements of the application and hardware architecture.

How does neural network pruning improve model generalization?

Pruning has a regularization effect on neural networks, helping prevent overfitting and improve the model’s ability to generalize from the training data to unseen data. By reducing the model’s complexity, pruning enhances its ability to make accurate predictions on unseen data and improves overall performance.

What is the conclusion regarding neural network pruning?

Neural network pruning is a vital technique in optimizing AI models for efficiency. It enables the creation of smaller, faster, and more energy-efficient neural networks, making them suitable for real-time inference and deployment on edge devices. Pruning also improves model generalization and prevents overfitting, leading to better performance on unseen data.