Welcome to our article on Transformer Neural Networks and their impact on sequence-to-sequence learning in the field of AI. With their advanced natural language processing capabilities, transformers have reshaped the landscape of sequence tasks, from language processing to bioinformatics.

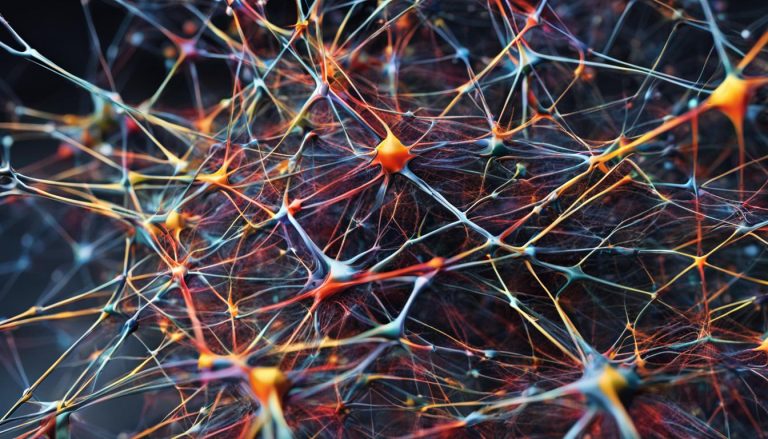

Transformers, built on the foundation of self-attention, have emerged as a game-changer in the realm of sequence tasks. Unlike traditional models, which process elements sequentially, transformers process all elements simultaneously, capturing intricate relationships and long-range dependencies. This unique approach has propelled transformers to the forefront of natural language processing, computer vision, speech recognition, and beyond.

However, it’s essential to note that the power of transformers comes with its challenges. The parallel processing demands of transformers require significant computational resources, and the complexity of their architecture can hinder interpretability. Nonetheless, ongoing research aims to optimize efficiency and interpretability, paving the way for the future development of transformers.

In our article, we will delve into specific applications of transformers, such as their role in natural language processing, computer vision, speech recognition, and their expanding presence in diverse fields like protein structure prediction, drug discovery, and music generation.

Join us on this exciting journey as we explore the impressive capabilities, challenges, and the bright future that lies ahead for transformers in revolutionizing sequence-to-sequence learning and the world of AI.

The Power of Transformers in Natural Language Processing

Transformers have revolutionized Natural Language Processing (NLP) by enabling breakthroughs in a variety of tasks, from translation and summarization to question answering (QA) and sentiment analysis. Their ability to capture nuanced language nuances and long-range context has made them indispensable in these areas.

When it comes to translation, transformers excel in delivering accurate and context-aware results. By leveraging their self-attention mechanism, they can understand the entire sentence to ensure the translated output maintains fluency and meaning. This has significantly advanced machine translation systems across different languages, making communication more accessible and efficient.

Summarization, another key NLP task, involves condensing lengthy texts into concise and informative summaries. Transformers have shown remarkable prowess in summarization, extracting key information and conveying it effectively. Their ability to capture both local and global dependencies allows for a more comprehensive understanding of the source text, resulting in high-quality summaries.

“Transformers have proven to be a game-changer in the field of NLP. Their ability to grasp the intricacies of language and consider long-range context has paved the way for more accurate and powerful models.” – Dr. Hannah Chen, NLP Researcher.

Question answering systems powered by transformers have also seen significant advancements. By comprehending the context and the question itself, transformers can provide precise and relevant answers, mimicking human-like comprehension. This has led to improvements in various QA applications, including customer support chatbots and intelligent search engines.

Sentiment analysis, which involves determining the emotional tone of a piece of text, has greatly benefited from transformer models. Transformers can effectively capture the sentiment expressed in text by considering the surrounding words and sentences, allowing for more accurate sentiment classification. Businesses can leverage this technology to understand customer feedback, monitor brand reputation, and make data-driven decisions.

The power of transformers in NLP lies in their ability to capture and encode long-range context, allowing them to comprehend the subtle nuances of language. By capturing the dependencies between words across the entire sequence, transformers enable more accurate and context-aware language understanding. This makes them pivotal in driving advancements in Natural Language Processing.

Long-Range Context in Transformers

Long-range context refers to the ability of transformers to consider dependencies and relationships between words that are more distant from each other within a sentence or document. Unlike traditional NLP models that rely on fixed window sizes or local context, transformers can capture dependencies across the entire input sequence through self-attention mechanisms.

Self-attention allows each word to attend to all other words in the sequence, assigning importance based on the relevance and context. This flexible and dynamic attention mechanism enables transformers to capture long-range relationships, resulting in a more comprehensive understanding of the text.

By considering long-range context, transformers avoid the limitations imposed by fixed window sizes and can capture dependencies that span beyond a few words. This is particularly advantageous for tasks that require a deep understanding of the input, such as language translation, summarization, and sentiment analysis.

With their ability to capture nuanced language nuances and long-range context, transformers have emerged as champions in Natural Language Processing. Their transformative capabilities have revolutionized the field, empowering researchers and practitioners to tackle complex language tasks with greater accuracy and efficiency.

Transformers in Computer Vision

Transformers, originally popularized for natural language processing tasks, have also made significant advancements in the field of computer vision. With their attention mechanisms, transformers have proven to be effective in various visual tasks, including image captioning and object detection.

Enhanced Understanding of Visual Scenes

Transformers excel at understanding not just individual objects in images but also the complex interactions within visual scenes. Their attention mechanisms allow them to capture long-range dependencies and intricate relationships between different elements within the image.

Transformers take computer vision to a new level by going beyond simple object detection. They have the ability to comprehend the context and relationships within an image, leading to more accurate and detailed results.

Image Captioning with Transformers

Image captioning, the process of generating textual descriptions for images automatically, has been greatly improved by the use of transformers. By leveraging the attention mechanisms in transformers, models can generate captions that not only describe the objects in the image but also incorporate the relationships and interactions between them.

Transformers provide a more nuanced and contextually aware approach to image captioning, resulting in captions that are more accurate and informative. This is particularly beneficial in applications such as assistive technologies for the visually impaired and content summarization.

Object Detection at Scale

Object detection, a fundamental task in computer vision, involves identifying and localizing objects within an image. Transformers have shown remarkable capabilities in this area, leveraging their attention mechanisms to detect objects even in complex and cluttered scenes.

Thanks to transformers, object detection algorithms can better understand the spatial relationships between objects, leading to more precise and reliable results. This has significant implications in practical applications like autonomous vehicles, surveillance systems, and image analysis.

A Look into the Future

Transformers have opened new possibilities and advancements in computer vision. As research continues to push the boundaries, we can anticipate further enhancements in accuracy, speed, and scalability. The combination of transformers with other state-of-the-art techniques is set to revolutionize computer vision applications and drive innovation in the field.

The Power of Transformers in Computer Vision

| Computer Vision Tasks | Benefits of Transformers |

|---|---|

| Image Captioning | Improved context comprehension More accurate and informative captions |

| Object Detection | Enhanced spatial understanding Precise localization of objects |

Transformers in Speech Recognition and Synthesis

Transformers have shown enormous promise in the fields of speech recognition and synthesis, revolutionizing the way we interact with spoken language. The ability of transformers to capture spoken language nuances effectively has made them invaluable in these areas, offering significant advancements in the accuracy and naturalness of speech-related applications.

In speech recognition, transformers are employed to convert spoken words into written text with impressive precision. By leveraging their powerful self-attention mechanism, transformers can process audio input at various temporal resolutions and analyze it holistically, capturing both local details and global context. This comprehensive approach enables transformers to accurately transcribe spoken language, even in challenging scenarios where multiple speakers are present or there is background noise.

Furthermore, transformers have also made significant contributions to the field of speech synthesis, enabling the generation of lifelike speech. By leveraging their ability to capture intricate relationships between different elements of the input, transformers produce synthesized speech that exhibits exceptionally natural prosody, intonation, and rhythm. Whether it’s for virtual assistants, audiobooks, or voiceovers, the utilization of transformers in speech synthesis has greatly enhanced the user experience, making interactions with technology more intuitive and human-like.

One notable advantage of transformers in speech recognition and synthesis is their ability to handle diverse languages and accents. The comprehensive modeling of language nuances within the transformer’s architecture allows it to adapt seamlessly to different linguistic patterns and variations, ensuring accurate and contextually appropriate results across a wide range of languages and dialects.

“The integration of transformers into speech recognition and synthesis has brought forth groundbreaking advancements in the field. The ability to capture spoken language nuances effectively paves the way for more accurate and natural interactions between humans and technology.”

Transformers in speech recognition and synthesis hold immense potential for applications in various industries. They can play a crucial role in improving transcription services, voice assistants, voice-controlled applications, and automated customer service systems. Additionally, they can aid in the development of assistive technologies for individuals with speech impairments, enabling them to communicate more efficiently and independently.

Advantages of Transformers in Speech Recognition and Synthesis

- Accurate transcription of spoken language, even in complex scenarios

- Generation of lifelike and natural-sounding speech

- Ability to handle diverse languages and accents

- Improved user experience in voice-controlled applications and virtual assistants

- Potential for assistive technologies for individuals with speech impairments

The application of transformers in speech recognition and synthesis continues to drive advancements in the field, attracting significant attention from researchers and industry professionals alike. Ongoing research and development aim to address the challenges associated with resource-intensive parallel processing and enhance the interpretability of transformer models, further expanding their capabilities and unleashing their full potential in transforming spoken language processing.

| Advantages | Challenges |

|---|---|

| Accurate transcription of spoken language | Resource-intensive parallel processing |

| Natural-sounding speech synthesis | Complexity hindering interpretability |

| Ability to handle diverse languages and accents | |

| Improved user experience in voice-controlled applications | |

| Potential for assistive technologies |

Transformers Beyond Seq2Seq: Diverse Applications

The power of transformers extends far beyond their traditional role in sequence-to-sequence (Seq2Seq) learning. These innovative models have found diverse applications in domains ranging from biology to music. Let’s explore some of these exciting use cases where transformers have showcased their versatility and adaptability.

Protein Structure Prediction

One remarkable application of transformers is in protein structure prediction. By leveraging their ability to capture intricate relationships within protein sequences, transformers enable accurate modeling and prediction of protein structures. This breakthrough has significant implications for drug design and development, as understanding protein structures is crucial for identifying potential targets and designing effective therapeutic interventions.

Drug Discovery

In the realm of drug discovery, transformers have emerged as powerful tools for analyzing and predicting the interactions between small molecules and proteins. Their ability to process and understand complex molecular features enables more efficient screening of potential drug candidates. By leveraging transformers in drug discovery, researchers can accelerate the identification of novel compounds and improve the efficiency of the drug development process.

Music Generation

Transformers have also made their mark in the realm of music generation. By analyzing patterns and sequences within existing musical compositions, transformers can generate new melodies, harmonies, and musical expressions. This ability to create original pieces of music holds immense potential for the music industry, opening new avenues for creativity and exploration.

Transformers have ventured beyond traditional sequence tasks and found applications in diverse domains, such as protein structure prediction, drug discovery, and music generation. This showcases their versatility and adaptability across different fields.

The versatility of transformers extends beyond the examples mentioned above. Their unique architecture and capabilities have sparked interest and research in various other areas, including natural language understanding, recommendation systems, and time series analysis. As transformers continue to evolve, their potential applications are only limited by our imagination.

Examples of Transformers Beyond Seq2Seq

| Domain | Application |

|---|---|

| Biology | Protein structure prediction |

| Pharmaceuticals | Drug discovery |

| Musical Composition | Music generation |

As we explore the versatility of transformers, it becomes evident that these models are not confined to one particular field. Their ability to capture complex relationships and patterns combined with their adaptability make them valuable assets across diverse domains. The future of transformers promises continued innovation and groundbreaking applications, making them a transformative force in the world of AI.

Challenges and Future of Transformers

While transformers offer transformative capabilities, they also come with challenges. Let’s explore the challenges of transformers, including their parallel processing demands, complexity, and future developments in this field.

Parallel Processing Demands

Transformers, with their attention-based architecture, allow for efficient processing of information by considering all elements simultaneously. However, this parallel processing capability comes with a trade-off – the demand for significant computational resources. The sheer scale of data and calculations required can become a bottleneck, hindering the performance of transformers.

To overcome this challenge, ongoing research focuses on optimizing the efficiency of transformers. Strategies like model compression, parameter sharing, and distributed computing aim to reduce the computational demands without compromising the power of transformers. These advancements will ensure that transformers can be effectively used in various applications, even on resource-constrained devices.

Complexity and Interpretability

The architecture of transformers can be highly complex, with countless parameters and layers. While this complexity enables their impressive performance in tasks like natural language processing and computer vision, it can also make them challenging to interpret. Understanding how transformers make specific decisions or extract meaning from the input data can be a non-trivial task.

Researchers are actively working on developing interpretability techniques for transformers. By analyzing attention weights and feature importance, they aim to shed light on the inner workings of transformers and make their decisions more transparent. These efforts will not only enhance our understanding of transformers but also ensure their responsible and ethical use in critical applications.

Future Developments

The future of transformers holds exciting possibilities. Researchers are continually pushing the boundaries of transformer models, exploring novel architectures, and experimenting with different attention mechanisms. These advancements aim to further improve the performance, efficiency, and interpretability of transformers.

Additionally, the incorporation of transformers into new domains and applications is an area of active research. From healthcare to finance, transformers are poised to revolutionize various industries by tackling complex problems and delivering state-of-the-art results.

As advancements in transformer technology continue, we can expect increased attention to overcoming challenges, further refining their capabilities, and enabling their seamless integration into our daily lives.

| Challenges | Potential Solutions |

|---|---|

| Parallel processing demands | Model compression, parameter sharing, distributed computing |

| Complexity and interpretability | Interpretability techniques, analyzing attention weights, feature importance |

| Future developments | Exploring novel architectures, experimenting with different attention mechanisms |

Conclusion

Transformers have revolutionized sequence-to-sequence learning in the field of AI, providing unparalleled natural language processing capabilities and finding applications in various domains. Built on the foundation of self-attention, these models have reshaped the landscape of sequence tasks, capturing intricate relationships and long-range dependencies.

Throughout this article, we explored the power of transformers in natural language processing, computer vision, speech recognition, and beyond. In tasks such as translation, summarization, question answering, and sentiment analysis, transformers have excelled at capturing nuanced language nuances and long-range context, making them indispensable in these areas.

While transformers offer transformative capabilities, they also come with their own set of challenges. The parallel processing demands significant computational resources and the complexity of their architecture can hinder interpretability. However, ongoing research and development aim to optimize efficiency and interpretability, ensuring that transformers continue to evolve and improve in the future.

Looking ahead, as research progresses and new advancements are made, transformers hold the potential to further revolutionize the field of AI. With their ability to process all elements simultaneously and their versatility in diverse applications, transformers are expected to pave the way for future prospects, enhancing the effectiveness and efficiency of sequence-to-sequence learning.

FAQ

What are Transformers?

Transformers are neural networks that are revolutionizing sequence-to-sequence learning in AI. They excel in capturing complex relationships and long-range dependencies.

In which fields do Transformers shine?

Transformers shine in natural language processing tasks such as translation, summarization, question answering, and sentiment analysis. They also show promise in computer vision and speech recognition tasks.

What are some applications of Transformers in computer vision?

Transformers are used for tasks like image captioning and object detection in computer vision. Their attention mechanisms allow them to understand interactions within scenes.

How do Transformers contribute to speech recognition and synthesis?

Transformers are effective in converting spoken words to text and generating lifelike speech. They capture spoken language nuances accurately, enhancing their performance in these areas.

Are Transformers only applicable to sequence tasks?

No, Transformers have ventured beyond traditional sequence tasks. They have found applications in diverse domains such as protein structure prediction, drug discovery, and music generation, highlighting their versatility.

What are the challenges faced by Transformers?

Transformers demand significant computational resources for parallel processing, and their complex architecture can hinder interpretability. However, ongoing research aims to optimize their efficiency and interpretability.

What is the future of Transformers?

As research progresses, Transformers are expected to continue evolving and transforming the field of AI, further enhancing their capabilities and addressing the challenges they currently face.